Integration of Service Systems for Homeless Persons With Serious Mental Illness Through the ACCESS Program

Abstract

OBJECTIVE: The aim of this study was to evaluate the first of the two core questions around which the ACCESS (Access to Community Care and Effective Services and Supports) evaluation was designed: Does implementation of system-change strategies lead to better integration of service systems? METHODS: The study was part of the five-year federal ACCESS service demonstration program, which sought to enhance integration of service delivery systems for homeless persons with serious mental illness. Data were gathered from nine randomly selected experimental sites and nine comparison sites in 15 of the nation's largest cities on the degree to which each site implemented a set of systems integration strategies and the degree of systems integration that ensued among community agencies across five service sectors: mental health, substance abuse, primary care, housing, and social welfare and entitlement services. Integration was measured across all interorganizational relationships in the local service networks (overall systems integration) and across relationships involving only the primary ACCESS grantee organization (project-centered integration). RESULTS: Contrary to expectations, the nine experimental sites did not demonstrate significantly greater overall systems integration than the nine comparison sites. However, the experimental sites demonstrated better project-centered integration than the comparison sites. Moreover, more extensive implementation of strategies for system change was associated with higher levels of overall systems integration as well as project-centered integration at both the experimental sites and the comparison sites. CONCLUSIONS: The ACCESS demonstration was successful in terms of project-centered integration but not overall system integration.

This article, the second of four in this issue of Psychiatric Services (1,2,3), provides details about research on the first of the two policy questions underlying the ACCESS (Access to Community Care and Effective Services and Supports) demonstration program: Does the implementation of system-change strategies result in improved service systems integration? To investigate this question, three working hypotheses were tested.

The first hypothesis was that providing earmarked funds and technical assistance to the nine ACCESS experimental sites to implement systems integration strategies would result in greater improvements in systems integration at those sites than at nine comparison sites. The second hypothesis was that providing such funding would also result in greater improvements in project-centered integration at the nine ACCESS experimental sites than at the nine comparison sites. The third hypothesis was that regardless of whether a site was an experimental site or a comparison site, sites that implemented the integration strategies more fully would demonstrate greater improvements in both systems integration and project-centered integration. The first two hypotheses are related to the experimental logic underlying the ACCESS demonstration, and the third hypothesis concerns the extent to which the use of these strategies, whether by experimental sites or by comparison sites, improves integration at the system level and at the project level.

An interorganizational network perspective (4,5) was used to test these three hypotheses. None of the grantee agencies provided the entire range of services needed by homeless persons with serious mental illness in any of the participating communities. Rather, service delivery was an interorganizational process through which needed services were secured through a network of local organizations that offered other clinical and supportive services (6,7).

Social network methods have been increasingly applied to the evaluation of mental health services (5,8,9,10) and other human services (7,11,12); such evaluations have shown that between-site differences as well as changes in service systems over time can be detected by these methods. The premise underlying this work is that the integration and coordination of service systems can be inferred from the patterns of interagency resource exchanges—for example, client referrals, information exchanges, and funding flows.

Planned interventions involving interagency networks may reverberate throughout a service system or may be limited to individual organizations (8). Accordingly, to assess the impact of the ACCESS demonstration, we analyzed two levels of integration—overall systems integration and project-centered integration. Overall systems integration refers to the presence of multi-client, information, and funding linkages among all organizations in a community's service network for homeless persons with mental illnesses. Project-centered integration refers to a subset of these linkages—only those involving the primary ACCESS grantee agency and its relationships with other organizations in the same service network.

The two types of integration may vary independently—for example, in a situation where the overall system is poorly linked but the ACCESS grantee agency has formed linkages with many of the other organizations in the network. The central question addressed in this article is whether the ACCESS demonstration improved one, both, or neither of these types of integration.

Methods

Data collection

The data for this study were collected over a five-year period (1994 to 1998) from agencies that provided mental health, substance abuse, housing, primary care, and social welfare and entitlement services to homeless persons with serious mental illness at each of 18 sites participating in the ACCESS demonstration. These sites are located in 15 of the largest cities in the United States: Bridgeport and New Haven, Connecticut; Chicago, Illinois; Topeka and Wichita, Kansas; Kansas City and St. Louis, Missouri; Charlotte and Raleigh, North Carolina; Philadelphia, Pennsylvania; Austin and Fort Worth, Texas; Richmond and Hampton-Newport News, Virginia; and Seattle, Washington.

Site visits. Data on the extent of implementation of 12 system-change strategies were obtained from structured observations made by three-person teams during two of the annual visits to each site, which usually lasted for three days (13). These data are measures of the extent to which systems integration activities, rated independently by each member of the site visit team, were implemented at each site, as discussed below. These ratings were made at the midpoint of the ACCESS demonstration (1996) and at the later stage (1998).

Network interviews. Three waves of interorganizational network data were collected independently of site visits at each of the 18 sites by different interviewers, usually two to four months after the annual site visit. The interorganizational network data collection methods used in this study are described in detail elsewhere (14,15). Overall, 15 data collection teams, each composed of two to six interviewers and a site coordinator, were hired and trained to conduct agency interviews at their respective ACCESS sites; three cities each had two sites, which could both be covered by one team. Agencies selected for inclusion in the network came from one of the five service sectors, provided direct client services, and served homeless persons. At least one respondent per agency was interviewed, but in many cases multiple respondents provided data. The respondents selected were "boundary spanners" (16,17)—people who were particularly knowledgeable about their agency's or program's connections with other service providers.

During each wave of data collection, an average of 60 interviews, each of about 60 to 90 minutes' duration, were conducted at each site over a period of 12 to 20 weeks. The refusal rate was approximately 3 percent for all three data collection efforts. Of the 947 agency respondents for whom data were available at wave 3, a total of 220 (23 percent) were agency directors or chief executive officers, 459 (48 percent) were program directors, 163 (17 percent) were case managers or outreach workers, 58 (6 percent) were therapists or other mental health professionals, and 47 (5 percent) were administrators or other types of workers. This distribution of respondents' job titles was similar for each wave of data collection.

Measures

Dependent variables. Two dependent variables were analyzed: overall systems integration and project-centered integration. Measures for both variables were based on counts of client referrals, information exchanges, and funding flows between agencies in the ACCESS networks, as discussed below. Both measures were computed separately for each site at multiple time points.

Client referrals were measured by asking the question, "To what extent does your organization send clients to or receive clients from this other agency specifically related to homeless persons with a severe mental illness?" Information exchanges were measured by asking, "To what extent does your organization send information to or receive information from this other agency for coordination, control, planning, or evaluation purposes concerning individuals who are homeless with a severe mental illness?" Funding flows were measured with the question, "To what extent does your organization send funds (direct funding exchanges only, including grants and contracts) to or receive funds from this other agency specifically related to persons who are homeless with a severe mental illness?" Response categories for each question ranged from 0, none, to 4, a lot; sending relationships and receiving relationships were scored separately.

Site-specific measures were derived in three steps. First, because we were concerned primarily with the presence of a relationship, each reported service link score of 0 to 4 was dichotomized by recoding all responses greater than 0 as 1. Second, the dichotomized scores (0 or 1) were summed across six networks, which were defined by two relations (sending or receiving) and three contents (clients, information, and funds). Use of this approach produced a sum score for each interorganizational relationship ranging from 0, no links present, to 6, all links present; multiple links were represented by scores of 2 or more.

Third, overall systems integration was measured on a site-specific basis as the density or proportion of relationships that had multiple links out of the total number of possible relationships among all the agencies at a given site. Project-centered integration is also a density measure that involves multiple linked relationships, but the count is based only on relationships that involve the primary ACCESS grantee agency. The two measures reflect the extent to which the overall system and the ACCESS grantee agency, respectively, at each site were interconnected across multiple service-based relationships.

Both integration measures were computed for three observation points: 1994, 1996, and 1998. These time points represent key developmental stages of the ACCESS demonstration: early (within six months of program start-up), midpoint (around 24 months after start-up), and late (around 44 months after start-up).

Independent variables. The main independent variable was study condition, a binary variable designating whether a site was an experimental site (coded as 1) or a comparison site (coded as 0). The two sites in each state were randomly assigned to a condition before funding was provided. This variable was the main focus of the experimental analysis and the intent-to-implement analyses, discussed below, of the effects of the demonstration.

A second independent variable—strategy implementation—was used as a measure of the extent to which each site carried out 12 strategies aimed at integrating local service systems for homeless persons with serious mental illness. Examples of these strategies are a systems integration coordinator position, an interagency coordinating body, co-location of services, cross-training, and client tracking systems (1,13). Site visitors rated each strategy for each site on a 5-point scale of 1, none (no steps taken to implement), to 5, high (strategy fully implemented).

Interrater reliability was good. For the initial ratings of strategy implementation, the site visitors agreed in 81 percent of cases (13). Moreover, in these instances raters assigned identical scores 88 percent of the time. When discrepancies occurred, the raters met and came to a consensus on the final score. These ratings were averaged across the 12 strategies to produce a summary score for each experimental site and each comparison site. For these calculations, each individual strategy was assigned equal weight.

Wave—scored 0, 1, and 2 for the three data collection points—was included in the analyses to measure trends in the integration scores over time. Interaction terms for study condition times wave and strategy implementation times wave were also included to determine whether sites behaved differently within a given condition over the course of the project.

Analyses

Given the randomized experimental design of the ACCESS evaluation, testing hypothesis 1 involved an intent-to-treat—or, in this instance, an intent-to-implement—analysis. The question of interest was whether the nine experimental sites attained greater systems integration over time than the nine comparison sites. Intent-to-implement analysis rests on the clinical trials principle that, in all analyses, subjects—or, in this study, sites—remain in the experimental condition to which they have been randomly assigned, regardless of subsequent adherence to the study protocol (18).

Testing hypothesis 3 focused on an "as-implemented" approach in which randomization was broken and sites were examined on the basis of their actual implementation behavior. The rationale for as-implemented analysis is based on evidence of nonadherence to study protocols (18). As reported below, some experimental sites did not carry out the implementation strategies as fully as expected, and a few comparison sites implemented some strategies by using non-ACCESS resources. The as-implemented approach is equivalent to a dose-response analysis, in which the question is whether implementation of a strategy—a dose—leads to higher integration scores. For the purpose of testing hypothesis 3 in this study, as-implemented analysis measured the extent to which changes in strategy implementation led to changes in systems integration or project-centered integration.

Random regression (19) was used to model the trends in systems integration and project-centered integration for the 18 sites across the three waves of data. The statistical package SAS PROC MIXED was used. The assumption that the performance of all sites for a given study condition was homogeneous was relaxed such that sites were also allowed to have random intercepts. These models adjusted the standard errors to account for the fact that there were 54 observations (18 sites times three waves) but only 18 independent cases.

The intraclass correlation coefficient provided an estimate of this dependency among the observations. Study condition variables (intent-to-implement analysis) and strategy implementation variables (as-implemented analysis) were treated as fixed effects. An additional measure was included in preliminary models to control for the amount of time the respondents had occupied their current positions (a mean±SD duration of 4.4±4.3 years at time 1). The effect of this variable was not significant in any of the models, so the measure was not included in the final analyses.

Preliminary analyses showed that the integration variables were positively skewed. A logit transformation of the scores was performed. (A log transformation did little to change the skewness.) The transformed scores approximated a normal distribution. Accordingly, all the analyses used the logit-transformed scores, and the findings were interpreted in terms of the odds of a change in either systems integration or project-centered integration.

Results

Strategy implementation

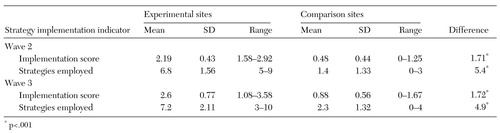

The mean±SD strategy implementation scores of the experimental sites and the comparison sites at waves 2 and 3 are listed in Table 1. (Wave 1 implementation data were not collected.) The scores for the experimental sites were significantly higher than those of the comparison sites: almost five times as high at wave 2 (2.19 compared with .48) and almost three times as high at wave 3 (2.60 compared with .88). Planned activities that were aimed at overcoming system-level fragmentation were undertaken and completed at a much higher level at the experimental sites than at the comparison sites.

In other words, the ACCESS experiment was carried out as intended. Nonetheless, the mean implementation score for the comparison sites was greater than zero at wave 2 and increased at wave 3 by an even greater proportion than it did at the experimental sites (more than 86 percent compared with more than 19 percent). The overlapping ranges at wave 3 indicate that some comparison sites actually "out-implemented" some of the experimental sites. This result strongly suggests that, over a nearly four-year period between wave 1 and wave 3, diffusion of the intervention from experimental sites to comparison sites occurred.

Overall systems integration

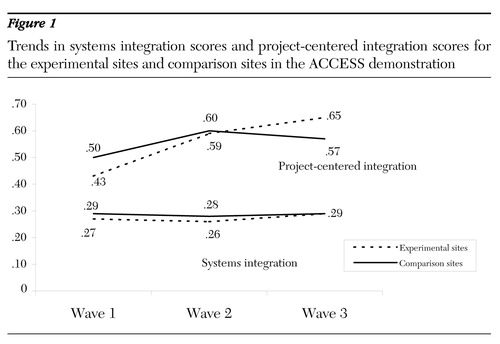

Figure 1 depicts trends in the mean scores for systems integration and project-centered integration for the experimental sites and the comparison sites over the study period. The experimental sites lagged behind the comparison sites at wave 1 (.27 compared with .29) and wave 2 (.26 compared with .28). Scores for both types of sites declined slightly at wave 2 and then rebounded at wave 3, when the level of integration was the same (.29). Although these differences in scores are small in absolute terms, the systems integration scores are based on interagency relationships aggregated to the site level, so each percentage difference between the experimental sites and the comparison sites actually involves many hundreds of service relationships.

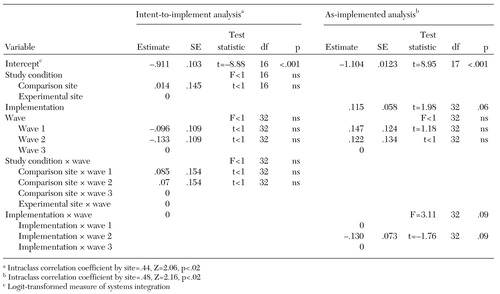

The results of the random regression analyses for predicting systems integration are summarized in Table 2. The intent-to-implement analysis showed a significant and moderately strong intraclass correlation between the repeated measures of each site's systems integration scores across the three waves. After adjustment for this correlation, no significant main effects were detected for study condition or for wave. Although the trend lines for the experimental sites were somewhat steeper than the line for the comparison sites between wave 2 and wave 3, the interaction of study condition and wave was not statistically significant. The lack of a significant difference between the two types of sites is evidence that the experiment did not produce its desired effect at the overall systems level. Thus hypothesis 1—that funding would result in greater improvements in systems integration at the experimental sites than at the comparison sites—was not supported by our findings.

In the as-implemented analysis, strategy implementation rather than study condition was the major predictor variable. The main effect for strategy implementation approached statistical significance (p=.06), and there was a slight but nonsignificant association for implementation times wave. These results suggest that, over time, sites with high strategy implementation scores were more likely to have a high systems integration score than were sites with low strategy implementation scores. These findings are consistent with hypothesis 3—that regardless of the study condition, sites that implemented the integration strategies more fully would demonstrate greater improvements in both systems integration and project-centered integration.

Project-centered integration

From the trends in project-centered integration scores depicted in Figure 1, it is immediately clear that all the sites started out with a much higher level of project-centered integration than systems integration. However, at wave 1 the experimental sites lagged even further behind the comparison sites (.43 compared with .50) on project-centered integration than they did on systems integration. By wave 2, the experimental sites had almost caught up with the comparison sites (.59 compared with .60), and by wave 3 they had surpassed them (.65 compared with .57).

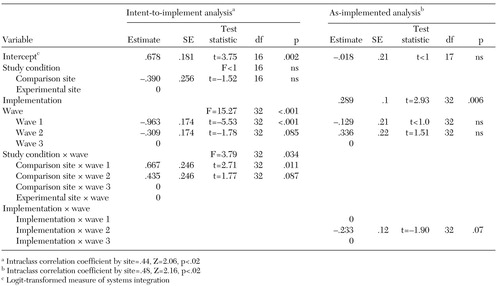

The results of the random regression analyses for predicting project-centered integration are summarized in Table 3. The intent-to-implement analysis, which used the adjusted values for project-centered integration, indicated that the main effect associated with study condition was not statistically significant but that the main effect of wave and the interaction of study condition by wave were significant. These results are consistent with the trends shown in Figure 1. On average, project-centered integration scores increased significantly across waves. However, the scores of the experimental sites changed more rapidly than those of the comparison sites between wave 1 and wave 2 and, whereas the scores of the comparison sites leveled off and decreased slightly between waves 2 and 3, those of the experimental sites continued their upward trend. Thus, over time, the experimental sites made up for an initial deficit and eventually attained a higher level of project-centered integration than did the comparison sites. These results are consistent with hypothesis 2—that there would be greater improvements in project-centered integration at the experimental sites than at the comparison sites.

The as-implemented analysis of the effects of strategy implementation on project-centered integration also yielded significant results (Table 3). There was a highly significant main effect for strategy implementation (p=.006). The association for the implementation by wave interaction approached significance (p=.07). These findings indicate that the sites that had high strategy implementation scores had a greater likelihood of having high project-centered integration scores at each wave. These results are consistent with hypothesis 3.

Discussion

Summary of findings

This study had two experimental findings, one negative and one positive. On the negative side, the ACCESS intervention did not produce its desired overall systems integration effect. On the positive side, the intervention did produce significantly greater project-centered integration.

At the systems level, the experimental findings show that conducting a well-specified and well-funded intervention did not have a significant effect on mean systems integration scores of the experimental sites beyond what was attained by the comparison sites. The absence of an experimental effect at the system level implies that additional funding and technical assistance for systems integration were neither necessary nor sufficient for changing the overall integration of these service systems. Thus our first hypothesis was not confirmed.

However, a much different picture emerged for project-centered integration. On this outcome, the intervention did have the expected effect. Over the course of the demonstration, the ACCESS grantee agencies at the experimental sites "outperformed" their counterparts at the comparison sites. This finding implies that the implementation strategies brokered by ACCESS grantee agencies had a positive impact on the agencies' integration with the other agencies in the service system. It is remarkable that, to accomplish this integration, the experimental sites had to overcome a sizeable lag relative to the comparison sites at the outset of the demonstration. The trajectory of the mean scores on project-centered integration for the experimental sites—a constantly increasing trend over time—was markedly different from the trend for the comparison sites, which trailed off after wave 2.

In addition, the findings suggest that when study condition is ignored and sites are analyzed by their level of strategy implementation, there is a strong association between implementation and integration at both the system level and the project-centered level. The implication is that these strategies can make a difference in the degree of integration both on a systems basis and on a project-centered basis. These findings are consistent with hypothesis 3: implementation of integration strategies helps to overcome fragmentation of services. Nevertheless, the positive association between strategy implementation and change in systems integration is suggestive rather than definitive. These results are not based on random assignment, and thus the association could be due to factors other than strategy implementation.

It is clear that it was easier for the experimental sites to improve project-centered integration than to integrate the overall system of mental health, substance abuse, primary care, housing, and social welfare and entitlement services. The policy implication is that a bottom-up approach—as opposed to top-down approach—may be both less costly and more effective in changing a system of services for homeless persons with mental illness, at least in the short term. The integration trends depicted in Figure 1 clearly demonstrate that both the experimental sites and the comparison sites increased their project-centered integration scores between wave 1 and wave 2, a period during which the overall systems integration scores remained relatively constant. The growth of project-centered integration at the comparison sites during this period suggests that clinical service interventions, such as assertive community treatment teams, can have integrating effects of their own without system-level interventions. Similar relationships between service expansion and interorganizational interaction have been observed in homeless programs operated by the Department of Veterans Affairs (20).

Lack of overall system effect

There are several possible explanations for the lack of an overall system effect: diffusion of the interventions, inadequate "dosage" of the interventions, other secular trends, delayed effects, and restricted scope.

Diffusion. As the demonstration progressed, a few of the comparison sites began to mimic the experimental sites by adopting some of the same implementation strategies. As a result, three comparison sites scored as high on strategy implementation as some of the experimental sites, and two experimental sites scored as low on strategy implementation as some of the comparison sites.

For example, most of the comparison sites participated in the Continuum of Care planning process of the Department of Housing and Urban Development (HUD) and thus were obliged to become members of interagency coalitions to receive HUD funding. Similarly, at one comparison site the county mental health agency was reorganized and merged with the county social services department. The combined agency fostered interagency coordination and pooling of resources, thus elevating that site's wave 3 integration score. As long as ACCESS grant funding was not being used to subsidize these efforts, federal officials were unable to prevent comparison sites from undertaking activities that amounted to adoption of some of the same interventions as those adopted by the experimental sites.

This example illustrates the difficulties of implementing long-term random designs in field situations. The subjects in this study were large cities or counties, and the incentives associated with the ACCESS grants were not substantial enough to blanket the sites or to insulate them from other opportunities and environmental influences. It is possible that diffusion of the intervention inflated the average systems integration scores at the comparison sites, thereby attenuating the overall system effect of the experiment. However, when we repeated the systems integration intent-to-implement analysis without these five sites, the results were essentially the same, which suggests that diffusion alone is not a complete explanation for the absence of an overall systems integration effect.

Dosage. A second explanation for the absence of a system-level effect has to do with dosage: the level of strategy implementation at the experimental sites may not have been strong enough to produce an improvement in systems integration. Although possible implementation scores range from 0 to 5, the average for experimental sites was only 2.19 at wave 2 and 2.60 at wave 3; these averages correspond to the 44th and 52nd percentiles, respectively, on the underlying scale. Thus, as a group, the experimental sites did not attain an especially high level of strategy implementation. It remains unclear whether more extensive implementation of integration strategies would have produced the differences in systems integration that were anticipated by the architects of the ACCESS demonstration.

Because of the small number of sites and a lack of variability in strategy implementation—most of the experimental sites implemented the same set of strategies—this study was unable to determine whether any one strategy is more effective in producing improved systems or project integration. Site visitors were impressed that the addition of a full-time service integration coordinator spurred other strategies (13,21). Knowing whether this position is the key to improving systems integration would be helpful for communities that have limited resources but a desire to improve the integration of services for targeted populations.

An idea related to the low-dosage argument is a recognition that the integration strategies used by the ACCESS experimental sites relied primarily on voluntary cooperation among participating agencies. Voluntary cooperation is a relatively weak mechanism for changing organizational behavior (7). Structural reorganization, program consolidation, and other forms of vertical integration (22,23,24) may have a much greater overall effect on systems integration. Clearly, the relative effectiveness of individual strategies for systems integration is an important avenue for further exploration.

Secular trends. A third explanation for the absence of an overall systems effect is related to secular trends that hindered systems integration, such as welfare reform and the spread of managed behavioral health care in the mid-1990s. Welfare reform during that period led to the tightening of eligibility requirements and the denial of income support and Medicaid benefits to beneficiaries with substance abuse. In addition, many cities experienced reductions in funding for mental health and substance abuse services during those years. The treatment strategy underlying ACCESS was that, after a year of intensive services, clients would be transferred to other agencies for ongoing services and support. However, with funding shortfalls, many community agencies were reluctant to take on these responsibilities. As a result, the interagency linkages envisioned by ACCESS were more difficult to establish and sustain.

The growth of managed behavioral health care at several sites also served to limit interagency ties as restrictive provider networks and bottom-line thinking forced many agencies to reevaluate their external relationships (25). Although these developments affected both the experimental sites and the comparison sites, they may partially explain the moderate levels of strategy implementation attained by the experimental sites.

Delayed effects. A fourth possible explanation for the lack of systems integration effects in this study is that the follow-up period was too short. This argument rests on the idea that service systems change very slowly, so that it might take three or four years for the effects to become fully apparent. Although this study did have a four-year follow-up period (1994 to 1998), in a number of ways the experiment was not fully implemented until mid-1996. In fact, site visits during the first 18 months revealed that the experimental sites were rarely implementing system-change strategies.

Faced with the prospect of no experiment, federal project officers initiated an intensive three-day technical assistance retreat for representatives of the experimental sites—six-to-eight-person teams representing key agencies—on strategic planning and strategies for achieving systems integration. The retreat was followed up with on-site technical assistance for the experimental sites. This intensive training coincided with the reversal in trends in systems integration scores between wave 2 and wave 3. The growth in systems integration at wave 3 is evidence of the positive effects of these efforts.

Viewed in this context, if the experiment did not really begin until mid-1996, then wave 3 data provide only an 18-month follow-up window. If the effects of strategy implementation were delayed, they may have been missed. Fortunately, a wave 4 follow-up was planned and conducted in early 2000 to assess the durability of the systems integration changes at the experimental sites after federal funding ended. These data, which will be reported in a future article, provide a full four-year follow-up to the 1996 retreat. Thus, the delayed-effects hypothesis can be evaluated empirically within the ACCESS evaluation.

Restricted range of impact. Finally, another explanation for the lack of overall systems integration effects is that the interventions were localized and rarely included the entire system of interest—mental health, substance abuse, primary care, housing, services to homeless persons, or social welfare and entitlement services. Rather, integration occurred primarily within the mental health care sector, and the interventions had little effect outside this service sector. Unpublished analyses at the level of the individual organization suggest this is exactly what happened. The odds of one agency having multiple relations with other agencies were much greater in the mental health sector than in primary care, substance abuse, social welfare and entitlement services, or housing and services for homeless persons. However, within the mental health sector, the odds were basically the same for the experimental sites as for the comparison sites, which suggests that the experiment did not differentially affect particular service sectors or the overall system as reported above.

Together, these analyses suggest that the ACCESS demonstration was primarily a mental health sector intervention. Homeless persons with serious mental illness represent such a small fraction of the caseloads of the targeted agencies that the incentives for agencies to voluntarily accept very difficult clients from the mental health sector were weak. On average, in each community, about a third of the interagency relations were in the mental health sector. With differential odds for service integration between the mental health sector and these others sectors, the overall levels of systems integration showed only slight changes over time. The similarity in integration scores between the experimental sites and the comparison sites speaks to the capacity of clinical service interventions to affect whole service systems.

Limitations

Because the unit of analysis in this study was the site, and because only 18 sites were available for study, there were limitations in establishing the internal validity of the ACCESS experiment. Moreover, there are both strengths and weaknesses associated with the social-networks method we used to measure systems integration. Social networks can be measured in many ways, and the application of these methods to the assessment of interorganizational service delivery is an evolving science.

Using self-reported data to measure actual communication links, client referrals, or funding exchanges introduces the potential for measurement error (26). We previously demonstrated the reliability of this method (27), but respondents may have reported interagency linkages inaccurately. One of the strengths of the network approach in this regard is that biases associated with one respondent's report of relationships are offset by the weighted responses of all other network members.

Conclusions

The findings reported here do not support the first part of the systems integration hypothesis that guided the ACCESS demonstration—that is, implementation of system-change strategies did not result in greater overall systems integration at the nine experimental sites than at the nine comparison sites. However, there was evidence that, in the later stages of the demonstration, integration between ACCESS grantee agencies and other community service agencies—that is, project-centered integration—was significantly greater at the experimental sites than at the comparison sites. Finally, the findings suggest that, regardless of whether a site was an experimental site or a comparison site, implementation of systems integration strategies did promote both better systems integration and better project-centered integration.

What remains to be determined is the support for the second part of the systems integration hypothesis: Does better integration of service systems lead to clinical and functional improvement for persons who are enrolled in the local ACCESS projects? Answers to this important question are presented in a companion article in this issue of the journal (2). Taken together, findings about the system, project, and clinical effects of ACCESS can guide future efforts to improve the effectiveness of mental health services for persons who are both homeless and mentally ill. These implications are discussed in a fourth article in this issue (3).

Acknowledgments

This study was funded through a contract between the Department of Health and Human Services, the Substance Abuse and Mental Health Services Administration, the Center for Mental Health Services and ROW Sciences, Inc. (now part of Northrop Grumman Corp.) and through subcontracts between ROW Sciences Inc. and the Cecil G. Sheps Center for Health Services Research at the University of North Carolina at Chapel Hill, Policy Research Associates of Delmar, New York, and the University of Maryland at Baltimore as well as an interagency agreement between the Center for Mental Health Services and the Department of Veterans Affairs Northeast Program Evaluation Center.

Dr. Morrissey, Dr. Calloway, and Dr. Thakur are affiliated with the Cecil G. Sheps Center for Health Services Research at the University of North Carolina at Chapel Hill, 725 Airport Road, Chapel Hill, North Carolina 27599-7590 (e-mail, [email protected]). Dr. Cocozza, Dr. Steadman, and Ms. Dennis are with Policy Research Associates, Inc., in Delmar, New York. Coauthors of this paper on the ACCESS National Evaluation Team are Robert A. Rosenheck, M.D., Frances Randolph, Dr.P.H., Matthew Johnsen, Ph.D., Margaret Blasinsky, M.A., and Howard H. Goldman, M.D., Ph.D. This paper is one of four in this issue in which the final results of the ACCESS program are presented and discussed.

Figure 1. Trends in systems integration scores and project-centered integration scores for the experimental sites and comparison sites in the ACCESS demonstration

|

Table 1. Mean strategy implementation scores for nine experimental sites and nine comparison sites at data collection waves 2 and 3

|

Table 2. Random regression models predicting system integration at experimental sites and comparison sites at data collection waves 1, 2 , and 3 using intent-to-implement and as-implemented analyses (N=18 sites × 3 waves=54)

|

Table 3. Random regression models predicting project-centered integration at experimental sites and comparison sites at data collection waves 1, 2 , and 3 using intent-to-implement and as-implemented analyses (N=18 sites × 3 waves=54)

1. Randolph F, Blasinsky M, Morrissey J, et al: Overview of the ACCESS program. Psychiatric Services 53:945-948, 2002Link, Google Scholar

2. Rosenheck R, Lam J, Morrissey J, et al: Do efforts to improve service system integration enhance outcomes for homeless persons with serious mental illness? Evidence from the ACCESS program. Psychiatric Services 53:958-966, 2002Link, Google Scholar

3. Goldman H, Rosenheck R, Morrissey J, et al: Lessons from the evaluation of the ACCESS program. Psychiatric Services 53:967-969, 2002Link, Google Scholar

4. Galaskiewicz J: Interorganizational relations. Annual Review of Sociology 11:281-304, 1985Crossref, Google Scholar

5. Morrissey J, Calloway M, Bartko W, et al: Local mental health authorities and service system change: evidence from the Robert Wood Johnson Foundation Program on Chronic Mental Illness. Milbank Quarterly 72(l):49-80, 1994Google Scholar

6. Provan K, Milward H: Institutional-level norms and organizational involvement in a service-implementation network. Journal of Public Administration Research and Theory 1:391-417, 1991Google Scholar

7. Alter C, Hage J: Organizations Working Together. Newbury Park, Calif, Sage, 1993Google Scholar

8. Morrissey J, Tausig M, Lindsey M: Interorganizational networks in mental health systems: assessing community support programs for the chronically mentally ill, in The Organization of Mental Health Services: Societal and Community Systems. Edited by Scott W, Black B. Beverly Hills, Calif, Sage, 1985Google Scholar

9. Provan K, Milward H: A preliminary theory of interorganizational network effectiveness: a comparative study of four community mental health systems. Administrative Science Quarterly 40(1):1-33, 1995Google Scholar

10. Morrissey J: Research in Community and Mental Health: Social Networks and Mental Illness. Stamford, Ct, JAI, 1998Google Scholar

11. Van de Ven A, Ferry D: Measuring and Assessing Organizations. New York, Wiley, 1980Google Scholar

12. Bolland J, Wilson J: Three faces of integrative coordination: a model of interorganizational relations in community-based health and human services. Health Services Research 29:341-66, 1994Medline, Google Scholar

13. Cocozza J, Steadman H, Dennis D, et al: Successful systems integration strategies: the ACCESS program for persons who are homeless and mentally ill. Administration and Policy in Mental Health 27:395-407, 2000Crossref, Medline, Google Scholar

14. Morrissey J, Calloway M, Johnsen M, et al: Service system performance and integration: a baseline profile of the ACCESS demonstration sites. Psychiatric Services 48:374-380, 1997Link, Google Scholar

15. Calloway M, Morrissey J: Overcoming service barriers for homeless persons with serious psychiatric disorders. Psychiatric Services 49:1568-1572, 1998Link, Google Scholar

16. Aldrich H, Herker D: Boundary spanning roles and organization structure. Academy of Management 2:217-230, 1977Google Scholar

17. Tushman M, Scanlan T. Boundary spanning individuals: their role in information transfer and their antecedents. Academy of Management Journal 24:289, 1981Crossref, Google Scholar

18. Piantadosi S: Clinical Trials: A Methodologic Perspective. New York, Wiley-Interscience, 1997Google Scholar

19. Bryk AS, Raudenbush SW: Hierarchical linear models: applications and data analysis methods, in Advanced Quantitative Techniques in the Social Sciences. Edited by De Leeuw J. London, Sage, 1992Google Scholar

20. McGuire J, Burnette C, Rosenheck R: Does expanding service delivery also improve interorganizational relationship among agencies serving homeless people with mental illness? Community Mental Health Journal, in pressGoogle Scholar

21. Dennis D, Cocozza J, Steadman H: What do we know about systems integration and homelessness? Arlington, Va, National Symposium on Homelessness Research, 1998Google Scholar

22. Clement J: Vertical integration and diversification of acute care hospitals: conceptual definitions. Hospital and Health Services Administration 33:99-110, 1988Medline, Google Scholar

23. Mick S: Explaining vertical integration in health care: an analysis and synthesis of transaction-cost economics and strategic management theory, in Innovations in Health Care Delivery: Insights from Organization Theory. Edited by Mick S. San Francisco, Jossey-Bass, 1990Google Scholar

24. Walston S, Kimberly J, Burns L: Owned vertical integration and health care: promise and performance. Health Care Management Review 21(1):83-92, 1996Google Scholar

25. Johnsen M, Morrissey J, Landow W, et al: The impact of managed care on service systems for persons who are homeless and mentally ill, in Research in Community and Mental Health: Social Networks and Mental Illness. Edited by Morrissey J. Stamford, Ct, JAI, 1998Google Scholar

26. Marsden P: Network data and measurement. Annual Review of Sociology 16:435-463, 1990Crossref, Google Scholar

27. Calloway M, Morrissey J, Paulson R: Accuracy and reliability of self-reported data in interorganizational networks. Social Networks 15:377-398, 1993Crossref, Google Scholar