The Decision to Adopt Evidence-Based and Other Innovative Mental Health Practices: Risky Business?

The pace at which research-guided mental health practices are widely adopted into practice tends to be slow, often taking a decade or more, despite extensive expenditures of money and effort ( 1 , 2 ). Exhaustive efforts are under way to accelerate this process with regard to the diffusion of evidence-based mental health practices ( 3 ) and other research-guided, innovative mental health practices ( 4 , 5 ). Much of this work focuses on facilitating the successful implementation of evidence-based practices among adopter organizations ( 3 , 6 , 7 ) and elaborating upon what it means to be "ready" to implement them at both the organization ( 3 , 8 ) and system levels ( 9 ). It is clear that getting implementation right is a complex process that requires attention to a wide range of issues. Accelerating the rate at which this occurs with regard to innovative health care practices presents an added challenge.

Although it is critical to be cognizant of the core ingredients of implementation success, it is equally important to understand what drives organizations to adopt innovative health care practices in the first place. The decision to adopt is prerequisite to implementation. In addition, aspects of this decision are expected to have enduring effects on the course and success of subsequent implementation efforts within organizations ( 10 , 11 , 12 ) and also are likely to influence diffusion patterns within broader systems ( 13 ). Consequently, it is important to understand key factors associated with this decision. This study examined the extent to which a risk-based decision-making framework is useful for understanding the decision to adopt research-guided, innovative mental health practices.

Evidence-based practices ( 3 ) and other research-guided mental health practices can be classified as innovations because they are likely to be seen as new by organizations considering their adoption, regardless of the amount of time that they have been available in the marketplace ( 14 ). As with decisions concerning other innovations, the decision to adopt an innovative mental health practice is not likely to be routine for most organizations ( 15 , 16 ). Instead, it is likely to be complex ( 17 ) and political ( 18 ) and to entail costs and benefits that have implications for the organization as a whole and for many of its stakeholders. In other words, the decision to adopt an innovative practice is likely to be a strategic decision and a risky decision.

One might argue that risks to organizations are modest and invariant when it comes to adopting research-guided practices, especially evidence-based practices, because, unlike some innovations, their benefits have been established. However, research suggests that evidence may be neither a necessary nor a sufficient prerequisite to adoption ( 18 , 19 ). In addition, with benefits come costs, and the magnitude of those costs cannot be estimated in the abstract. This is because "innovations are neither introduced into a vacuum nor do they exist in isolation" ( 20 ). Instead, features of innovations, including evidence-based practices, are expected to interact with characteristics of organizations ( 16 , 21 ) and their contexts ( 16 , 18 , 22 ) to result in differences among organizations in the perceived costs and benefits of adoption. These perceptions, in turn, are expected to influence the decisions and actions of top managers ( 23 , 24 , 25 ).

For example, in one organization, the adoption of a particular evidence-based practice may entail offering an entirely new service to a new consumer market. That is, it would be classified as an independent innovation ( 19 ). In contrast, in a second organization, adopting that same practice may involve dismantling and replacing an existing service. That is, it would be classified as a substitute innovation ( 19 ). The perceived totality of benefits and costs (estimated risk) of adopting this practice is likely to be different for these two organizations, despite the fact that the benefits of the practice have been well documented in the literature. Consequently, the perceived risk of adopting a particular innovative mental health practice is likely to vary from one organization to the next.

The vast body of literature related to the diffusion of innovations, much of which focuses on the adoption decision itself, lends support to this logic ( 17 , 20 , 26 ). Although the decisions of individuals have been the major focus of this work, there is growing evidence that many findings also apply to decisions made by organizations ( 22 ). However, the research has been criticized for lacking a basis in theory.

We assert that a risky-decision-making paradigm can be applied to the decision to adopt innovative mental health practices. This argument is primarily based on the observation that numerous features of innovations consistently linked to the decision to adopt innovations also are related to two concepts commonly connected to risky-decision-making behavior: perceived risk and capacity to manage risk ( 27 , 28 , 29 , 30 , 31 ).

The concept of perceived risk is reflected in several key innovation attributes because they deal with the probability and magnitude of benefits associated with adopting innovations ( 17 , 32 ). Specifically, the attributes include perceived utility, scientific evidence, evidence from the field, and perceived relative advantage. Similarly, the concept of capacity to manage risk ( 33 ) is mirrored in an array of important features of innovations, such as the complexity of the innovation, cost, expected ease of use, reversibility (amenability to trial use), availability of in-house know how (knowledge set), and compatibility with existing practices ( 17 , 32 ). Thus features of innovations that have been found to be central to their diffusion are conceptually connected to important concepts included within models of decision making under risk.

Organizational risk propensity is another variable commonly used to explain risky-decision-making behavior ( 29 ). This is not surprising given that risk propensity is defined as the general tendency of individuals or organizations to be innovative, to take risks, or both ( 15 , 30 , 34 ). Consequently, risk propensity is the third risk-related measure included in our framework.

Following this logic, our primary hypotheses predicted that the decision to adopt an innovative mental health practice is negatively related to the perceived risk of adopting the practice and positively related to the perceived capacity to manage risks that may arise during implementation. In addition, we expected to find a positive relationship between organizations' propensity to take risks and the decision to adopt innovative mental health practices.

In other words, our major hypotheses implied that organizations that are the first to adopt, referred to as "front-runners" and "early adopters" in our study, are expected to see the risks of adopting as lower, view their capacity to manage risk as higher, and have a greater propensity to take risks compared with organizations that have recently decided to adopt ("recent adopters"), organizations still considering whether to adopt ("potential adopters"), and those with no intention to adopt ("nonadopters"). Consequently, our predictions paint a picture of what early adoption implies with regard to risk taking that contrasts with the views of some experts. For example, whereas Gladwell ( 35 ) described early adoption as demonstrating a "willingness to take enormous risks," we assert that early adopters take quick action because they see the level of risk as lower and more manageable than organizations choosing not to adopt.

The study also examined relationships between the three core risk constructs in our framework (that is, perceived risk, risk management capacity, and risk propensity) and several innovation attributes (for example, ease of use) and organizational attributes (for example, management's attitude toward change). Specifically, we predicted perceived risk to be negatively related to views about the availability of both scientific evidence and field-based evidence (that is, experiential evidence) ( 36 ). We also expected risk management capacity to be positively related to perceptions about the availability of dedicated resources ( 37 , 38 , 39 ), the existence of in-house expertise (that is, knowledge set) ( 36 ), and the extent to which the practice is viewed as compatible with current practices ( 17 , 20 ) and easy to use ( 40 ). Finally, we predicted that organizations that fostered employee learning ( 41 ) and employed managers with favorable attitudes toward change ( 42 ) would report a greater propensity to take risks than organizations lacking those attributes ( 36 ).

Methods

The four research-guided, innovative mental health practices that were the focus of this investigation are cluster-based planning, a research-based consumer classification scheme ( 43 ); multisystemic therapy, an evidence-based practice involving intensive home-based treatment for youths ( 6 ); the Ohio medication algorithms related to schizophrenia and depression, which are based on the Texas Medication Algorithms Project ( 44 ); and integrated dual disorder treatment, an evidence-based practice tailored to individuals with mental illness and substance abuse issues ( 45 ). Each of these practices is supported by its own Coordinating Center of Excellence. The centers have statewide service areas and are charged with promoting the practice and providing information and technical assistance to interested organizations. Although Coordinating Centers of Excellence receive funding from the state mental health authority (SMHA), the decision to adopt practices supported by the centers is voluntary.

Although resource limitations dictated we could study only four practices, all eight Coordinating Centers of Excellence that had been established concurrent with the start of this study indicated an interest in participating in the research. Consequently, a deliberative process was undertaken involving university-based experts on organizational behavior to select that subset of four practices that was likely to be seen as varying on attributes central to testing study hypotheses (for example, perceived risk and scientific evidence) and on attributes that have been empirically linked to the decision to adopt innovations (for example, complexity and cost) ( 17 ). In addition, although it was desirable to have variability in perceived extent of scientific evidence, we preferred to choose practices supported by a reasonable amount of research. Consequently, we limited our selection to practices seen by SMHA experts as being supported by moderate to extensive scientific evidence.

This longitudinal research began early in 2001. For most of that year, the research team and staff of the Coordinating Centers of Excellence were engaged in start-up activities. In 2002 the Coordinating Centers began forwarding the names of organizations that had made inquiries about the practice (which occurred on a rolling basis over about one year) to the research team. Our research team agreed not to make contact until the Coordinating Center of Excellence indicated either the adoption decision had been made or had stalled.

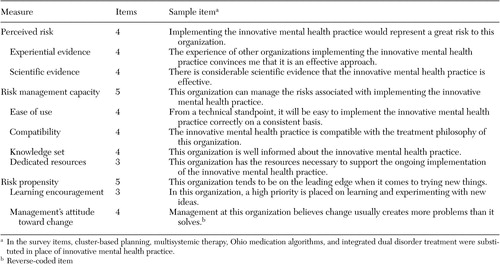

Data for testing our hypothesis related to the adoption decision were gathered only at the first point of contact with project informants to minimize the effects of retrospection. Consistent with accepted practice, data were gathered from key informants (for example, chief executive officers) directly involved in or privy to the decision ( 16 , 24 , 46 , 47 ). Confidential, one-hour, face-to-face interviews were conducted by a lead interviewer accompanied by a scribe. Questions in the interview protocol and also in a follow-up survey pertained to perceptions about the practice, the organization and the decision, including the decision status (that is, adopt or not adopt). As explained in the results section, decision status was refined further into decision stage on the basis of information gleaned from initial interviews. Decision stage categories were consistent with those employed by Meyer and Goes ( 16 ) in their study of assimilation of health care innovations. Variables assessed by the survey were measured with multi-item, 7-point, Likert scales. Item response options ranged from 1, strongly disagree, to 7, strongly agree. Scales either were adapted from existing measures ( 31 , 32 , 34 , 37 , 48 ) or developed on the basis of theoretical definitions of constructs. ( Table 1 shows sample items associated with the 11 variables examined in our analyses.)

|

Most surveys were returned within ten business days. Confidentiality was guaranteed, but informants were asked to write their name on follow-up surveys to allow for matching with interview data. In addition to key informants involved in interviews, chief financial officers and employees of the Coordinating Centers of Excellence also were asked to complete related versions of the survey. Information provided by employees of the Coordinating Centers of Excellence allowed us to verify decision stage assignments.

The internal consistency reliability of scales was evaluated using Cronbach's alpha. In addition, two kinds of aggregation statistics were computed: reliability and agreement indices. The intraclass correlation coefficient (ICC) and the eta-squared values ( 49 ), both of which are calculated from one-way, between-project analyses of variance (ANOVAs), are reliability indices that reflect the extent to which knowing which project an informant is associated with helps us predict an informant's response. With regard to agreement, r wg values for each measure and each organization ( 50 , 51 ) were examined as an index of agreement among responses within each project. These indices were used to determine if hypotheses could be tested at the project level. Bivariate correlations, one-way ANOVAs, and regression analyses were conducted to test study hypotheses.

Results

Information sufficient for hypothesis testing was received from 83 of 85 projects (98 percent) referred to the research team. Projects were distributed across practice as follows: 23 projects involved cluster-based planning, 12 projects involved the Ohio medication algorithms, 33 projects involved integrated dual diagnosis treatment, and 15 projects involved multisystemic therapy. Fifty of the 66 participating organizations inquired about one practice (50 of 83 projects), 15 inquired about two practices (30 of 83 projects), and one inquired about three practices (three of 83 projects). There were only five cases in which more than one practice was being implemented concurrently. Consequently, and consistent with similar research ( 52 ) and theory ( 21 ), project rather than organization was our unit of analysis.

The 66 participating organizations included comprehensive mental health agencies (58 of 66, or 88 percent, were mental health agencies) and inpatient behavioral health care organizations (eight of 66, or 12 percent, were inpatient facilities). The median number of full-time employees at agencies was 125, including six executives, whereas the median at inpatient facilities was 383 including nine executives. Annual budgets of a majority of agencies (27 of 58, or 47 percent) ranged from three to eight million dollars, and all inpatient facilities reported annual budgets exceeding 20 million dollars. Whereas most inpatient facilities were located in urban settings (six of eight, or 75 percent), mental health agencies were equally distributed across rural, suburban, and urban areas.

Interviews were conducted with 177 informants, many of whom were chief executive officers, medical directors, and program managers. A total of 146 of these informants (82 percent) also returned follow-up surveys. In addition, related surveys were received from chief financial officers connected with 76 projects (92 percent) and informants from Coordinating Centers of Excellence connected with 75 projects (90 percent). Overall, 297 surveys were received and 177 interviews were conducted. The number of informants per project ranged from one (in the case of one project) to seven (in the case of two projects). However, it was more common to have multiple informants per project: three informants (31 projects, or 37 percent), four informants (19 projects, or 23 percent), and two informants (18 projects, or 22 percent).

Although projects could simply have been classified by decision status (that is, nonadopter versus adopter), information gathered during interviews allowed us to make finer distinctions, as reflected in our decision stage variable. Two categories of projects that did not adopt the innovation were apparent: nonadopters, which included the ten projects for which a firm decision had been made not to adopt the practice, and potential adopters, which included the 17 projects for which the decision was pending. In addition and consistent with an implementation progression framework ( 16 ), three subgroups of adopters were distinguishable: eight projects that just decided to adopt, although efforts were not yet under way to implement the practice (recent adopters); 27 projects that were among the first to decide to adopt the practice in response to efforts by the Coordinating Centers of Excellence and for which start-up implementation activities such as hiring or training were under way (early adopters); and 16 projects that decided to adopt before formal efforts by the Coordinating Centers of Excellence to promote the practice and for which actual implementation of the practice was under way (frontrunners). In addition, five of the 83 projects which we refer to as de-adopters had discontinued implementing by the time we arrived for interviews. Because we had no a priori hypotheses related to de-adoption, these five projects were excluded, leaving 78 projects for hypothesis testing.

Finally, it is worth noting that nine of the 16 frontrunner projects had received some start-up funding through the local mental health authority to help with initial implementation. Although no difference was found between early adopters (mean±SD score of 4.4±.56) and frontrunners (mean score of 4.8±.66) in terms of the extent to which ongoing implementation was seen as dependent on outside funding, we tested all major hypotheses in two ways to control not only for the potential effects of start-up funding but also for the potential impact of retrospection—that is, we excluded and included frontrunners (62 projects when they were excluded, and 78 projects when they were included).

Reliability of scales and ratings

The internal consistency of scales was acceptable, with Cronbach's alphas ranging from .63 to .92, with the majority exceeding .80 ( Table 2 ). Ten of 11 ICCs were significant at p<.05 or better, and one was significant at the p<.10 level. That is, "project" explained between 17 and 67 percent of the variance in dependent variables, suggesting adequate variability in perceptions across project to allow us to go forward with examining within-project agreement. In fact, the magnitude of these eta-squared values compares favorably with other studies involving aggregation of data to the project level ( 52 ). Following accepted practice, r wg was used as the indicator of within-group interrater agreement. The r wg values of .70 or higher are acceptable to support the use of group means for hypothesis testing ( 53 ). Average values for variables were well above that cutoff, so mean scale scores were used to test hypotheses at the project level.

|

Results of hypothesis testing

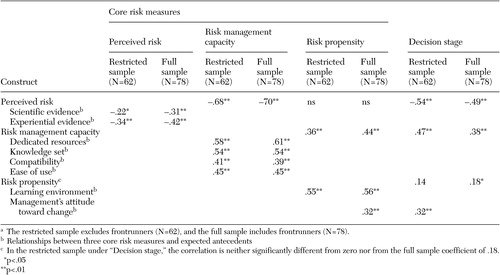

As noted above, all hypotheses were tested in two ways: by using a restricted sample (N=62), which excluded the 16 frontrunners, and by using the full sample (N=78). Results shown in tables are notated accordingly. Unless otherwise noted, findings are the same for the full and restricted samples.

Correlations were computed between the three risk measures and decision stage ( Table 3 ). As predicted, decision stage was negatively related to perceived risk (r=-.54, p<.01) and positively related to capacity to manage risk (r=.47, p<.01). The relationship between risk propensity and decision stage was in the expected direction for both samples, but it was significant only for the full sample (N=78; r=.18, p<.05).

|

a The restricted sample excludes frontrunners (N=62), and the full sample includes frontrunners (N=78).

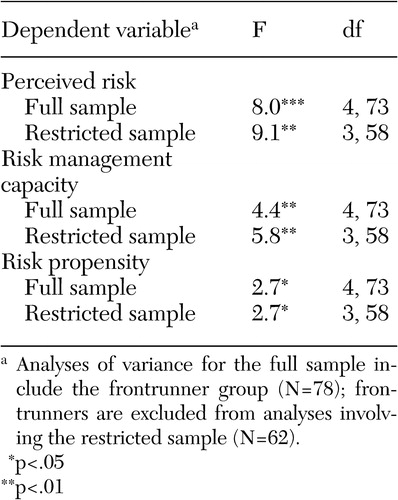

To further explore findings, three one-way ANOVAs were conducted with decision stage as the between-subject variable and the three risk measures as dependent variables. The ANOVA for perceived risk was significant (F=9.1, df=3 and 58, p<.01) and the rank ordering of perceived risk by stage was consistent with expectations ( Table 4 ). For example, perceived risk was significantly lower for frontrunners (mean score of 2.8±.8), early adopters (mean score of 2.7±.8), and recent adopters (mean score of 2.8±.8), compared with potential adopters (mean score of 3.5 ±1.2) and nonadopters (mean score of 4.6±1.2).

|

The ANOVA for risk management capacity also was significant ( Table 4 ). Consistent with expectations, frontrunners (mean score of 5.4±.9), early adopters (mean score of 5.6±.6), and recent adopters (mean score of 5.3±.4) reported having greater risk management capacity than nonadopters (mean score of 4.4±1.4). Potential adopters were about at the midpoint among the groups (mean score of 5.0±.8).

Similarly, the ANOVA for risk propensity was significant, but post-hoc comparisons revealed some unexpected findings ( Table 4 ). Although both frontrunners (mean score of 5.9±.8) and early adopters (mean score of 5.8±.6) reported the highest risk propensity in terms of absolute scores, nonadopters followed closely behind (mean score of 5.6±1.1). In addition, the risk propensity of recent adopters (mean score of 4.9±.9) was significantly lower than the scores for frontrunners and early adopters. Finally, the potential adopter group (mean score of 5.3±.9) was at about the midpoint of all groups.

The relative explanatory power of the three risk variables was then examined with stepwise regression analyses. Perceived risk explained 29 percent of the variance in decision stage (F=24.9, df=1 and 60, p<.001) for the restricted sample and 24 percent for the full sample (F=24.1, df=1 and 76, p<.001). Risk management capacity and risk propensity did not explain significant additional variance. Therefore, we examined whether perceived risk mediated the effects of risk management capacity and risk propensity on decision stage.

Baron and Kenny's ( 54 ) four-step approach was used for testing perceived risk as a mediator. As shown in Table 5 , findings for both the restricted and full sample indicate that perceived risk fully mediated the effects of risk management capacity on decision stage. That is, after establishing that risk management capacity explained significant variability in both decision stage (step 1) and in perceived risk (step 2) and after establishing that perceived risk explained significant variability in decision stage (step 3), Table 5 shows that the effects of risk management capacity on decision stage were no longer significant when perceived risk was added into the equation as a second predictor variable (step 4). However, the mediating effect of perceived risk was not tested for risk propensity because the first criterion established by Baron and Kenny ( 54 ) was not satisfied: risk propensity did not explain significant variability in decision stage for either the full or restricted sample.

|

a The restricted sample excludes frontrunners (N=62), and the full sample includes frontrunners (N=78).

Bivariate correlations between the three risk measures and relevant antecedent variables were then considered ( Table 3 ). As expected, for the restricted sample, perceived risk was negatively related to views about the availability of scientific evidence (r=-.22, p<.05) and experiential evidence (r=-.34, p<.05), and risk management capacity was positively related to views about the availability of dedicated resources (r=.58, p<.01), in-house expertise (that is, knowledge set) (r=.54, p<.01), the compatibility of the innovative mental health practice with the organization's treatment philosophy (r=.41, p<.01), and ease of implementing the practice (r=.45, p<.01). Finally, and as expected, organizational learning environment (r=.55, p<.01) and management's attitude toward change (r=.32, p<.01) were positively related to reported risk propensity. As shown in Table 3 , results for the full sample are parallel.

To further examine linkages with antecedents, the risk measures were regressed on relevant antecedents by using stepwise regression analyses. Experiential evidence explained 10 percent of the variance in perceived risk (F=8.0, df=1 and 60, p<.01). In addition, dedicated resources (33 percent) and knowledge set (10 percent) explained significant variability in risk management capacity (F= 22.2, df=2 and 59, p<.01). Finally, learning encouragement explained 30 percent of the variability in risk propensity (F=18.0, df=1 and 60, p<.01).

Finally, and for exploratory purposes, decision stage was regressed by using a stepwise approach on the three risk measures and all the antecedent variables noted in Table 2 . For the full sample, perceived risk explained the greatest amount of variance (24 percent) in decision stage followed by experiential evidence (7 percent) (F=17.0, df=2 and 75, p< .01). When frontrunners were excluded, perceptions about the availability of dedicated resources explained the greatest amount of variance (32 percent) followed by perceived risk (6 percent) (F=18.0, df=2 and 61, p<.01), a difference that may be partly attributable to the start-up funding acquired by two-thirds of the frontrunner projects.

Discussion

Results suggest that the decision to adopt an innovative mental health practice, in part, is a decision made in consideration of risk. Perceived risk explained a substantial part of what differentiated adopters from nonadopters. Moreover, and contrary to popular views that early adopters of innovations are willing to take "enormous risks" ( 35 ), these data offer the novel idea that early adopters act because they see the risks associated with adopting as lower than their nonadopter counterparts, partly because the risks are seen as more manageable. Risk apparently is in the eye of the beholder. Consequently, the risks and benefits of adopting innovative mental health practices, including evidence-based practices, cannot be evaluated in the abstract but need to be considered with regard to particular organizational and contextual conditions.

Findings also suggest that perceptions about risk are malleable, implying that it is possible to impact the likelihood of adopting an innovative mental health practice. Results reconfirm the idea that exposure to information about an innovative practice is important. This includes information about what science says and perhaps, more importantly, what the field says. Efforts must continue to disseminate scientific findings in ways that are accessible to key organizational decision makers. In addition, and consistent with diffusion models that emphasize the importance of communication between early adopters and potential adopters, energy must be directed toward exposing potential adopters of innovative practices to current adopters who are seeing favorable results in the field and toward carefully managing and monitoring early field testing of innovative mental health practices to increase the odds that findings from the field will be favorable. Views about perceived risk and, consequently, the likelihood of adoption are likely to be affected by exposure to these types of information.

In addition, findings indicate that views about capacity to manage risks related to implementation have an indirect influence on organizations' decisions to adopt innovative mental health practices (that is, the effects operate through perceived risk). It is no surprise that the extent to which time, money, and human resources devoted to implementation (that is, dedicated resources) are seen as available has a sizeable influence on assessed capacity. Yet time may be the only facet of dedicated resources that is available to many resource-scarce organizations. Even so, time affords additional opportunities to plan, build consensus among key stakeholders, and manage the timing of the adoption decision.

Views about the extent to which the innovative practice is compatible with current practices and is easy to implement also are related to views about capacity to manage risk. The compatibility issue suggests a selection strategy. That is, organizations would be wise to choose to adopt innovative practices that fit well with their strategic plans, missions, and philosophies of treatment. This also may increase the receptivity of current staff to the new practice. With regard to ease of use, perceptions of ease are likely to covary with the availability of implementation-related resources, including but not limited to implementation manuals and other forms of educational and technical expertise, including access to peer organizations with successful adoption experiences.

The well-worn phrase "knowledge is power" also is somewhat relevant to the issue of capacity to manage risk. Findings indicate that organizations that are well informed about innovative practices and that actively engage in efforts to gather information about them from peers in the field are inclined to report a greater capacity to manage risk and also are more likely to adopt the innovative practice. With greater knowledge comes understanding, not only of potential challenges but also of strategies for addressing those challenges.

Finally, data from this study confirm the established finding that organizations vary in their propensity to take risks. However, our data indicate that risk propensity may not be the best indicator of an organization's likelihood to adopt a particular innovative practice. In fact, frontrunners, early adopters, and nonadopters reported an equally high propensity to take risks. However, this may be a good thing because risk propensity and related attributes (for example, learning environment) are relatively enduring characteristics of organizations that are less subject to modification. Even so, risk propensity may be an important factor in the long run if it differentiates adopter organizations that are willing to persist with implementation in the face of adversity from those that are not.

There are a number of potential limitations to this study. First, data were gathered in only one state mental health system, which may have some idiosyncratic characteristics (for example, the existence of Coordinating Centers of Excellence). In addition, only public-sector organizations participated in the study, and the practices examined represent only that subset of innovative mental health practices that are supported by at least a moderate level of research. These conditions may set boundaries on the generalizability of findings. Further, as is typical with interorganizational studies, analyses were constrained by sample size. Even though our sample is larger than most and our measures were working well, statistical power limited our choices of approaches used to test hypotheses and to explore associations among measures.

Conclusions

Future research is needed to examine the extent to which our adoption decision-making model generalizes to other innovative practices and systems. Perhaps the conduct of multistate, collaborative studies that share basic design elements and common assessment tools would provide a data set rich enough to address these important concerns. In addition, alternative frameworks need to be explored to enrich our understanding of factors affecting the adoption and diffusion of innovative mental health practices within state mental health systems.

Acknowledgments

This research was funded by grant 1168 from the Ohio Department of Mental Health and by grant 00-65717-HCD from the John D. and Catherine T. MacArthur Foundation Network on Mental Health Policy Research. The authors gratefully acknowledge the key contributions of Dushka Crane-Ross and the dedication of other members of the research team, including Bev Seffrin, Richard Massatti, Sheri Chaney-Jones, Carol Carstens, Vandana Vaidyanathan, Sheauyuen Yeo, Pud Baird, and Helen Anne Sweeney. The authors are also indebted to the organizational participants in the research who gave generously of their time to make this project possible.

1. Corrigan PW, Steiner L, Glaser SG, et al: Strategies for disseminating evidence-based practices to staff who treat people with serious mental disabilities. Psychiatric Services 52:1598-1606, 2001Google Scholar

2. Goldman HH, Ganju V, Drake R, et al: Policy implications for implementing evidence-based practices. Psychiatric Services 51:1126-1129, 2001Google Scholar

3. Drake RE, Goldman HH, Leff HS, et al: Implementing evidence-based practices in routine mental health settings. Psychiatric Services 52:179-182, 2000Google Scholar

4. Drake RE: The principles of evidence-based mental health treatment, in Evidence-based Mental Health Practice. Edited by Drake RE, Merrens ME, Lynde DW. New York, Norton, 2005Google Scholar

5. Achieving the Promise: Transforming Mental Health Care in America. Pub no SMA-03-3832. Rockville, Md, Department of Health and Human Services, President's New Freedom Commission on Mental Health, 2003Google Scholar

6. Aarons GA: Mental health provider attitudes toward adoption of evidence-based practice: the evidence-based practice attitude scale. Mental Health Services Research 6:61-74, 2004Google Scholar

7. McFarlane WR, McNary S, Dixon L, et al: Predictors of dissemination of family psychoeducation in community mental health centers in Maine and Illinois. Psychiatric Services 52:935-942, 2001Google Scholar

8. Henggler SW, Schoenewald SK, Pickrel SG: Multisystemic therapy: bridging the gap between university and community-based treatment. Journal of Consulting and Clinical Psychology 63:709-717, 1995Google Scholar

9. Finnerty M, Lynde D, Goldman HH: State mental health authority yardstick: examining the impact of state mental health actions on the quality and penetration of EBPs in community mental health centers. Presented at the Conference of State Mental Health Agency Services Research. Baltimore, Feb 6-8, 2005Google Scholar

10. Nutt PC: Planning Methods for Healthcare Organizations, 2nd ed. New York, Wiley, 1992Google Scholar

11. Nutt PC: Surprising but true: half of the decisions in organizations fail. Academy of Management Executive 13:75-90, 1999Google Scholar

12. Vroom VH, Jago A: The New Leadership: Managing Participation in Organization. Englewood Cliffs, NJ, Prentice Hall, 1998Google Scholar

13. Majahan V, Peterson RA: Integrating time and space in technological substitution models. Technological Forecasting and Social Change 14:127-146, 1979Google Scholar

14. Woodman R, Sawyer J, Griffin R: Toward a theory of organizational creativity. Academy of Management Review 18:293-321, 1999Google Scholar

15. Damanpour F: Organizational innovation: a meta-analysis of effects of determinants and moderators. Academy of Management Journal 34:355-590, 1991Google Scholar

16. Meyer AD, Goes JB: Organizational assimilation of innovations: a multilevel contextual analysis. Academy of Management Journal 31:897-923, 1988Google Scholar

17. Rogers EM: Diffusion of Innovations, 4th ed. New York, Free Press, 1995Google Scholar

18. Denis, JL Hébert Y, Langley A, et al: Explaining diffusion patterns for complex healthcare innovations. Health Care Management Review 27:60-74, 2002Google Scholar

19. Abrahamson E: Managerial fads and fashions: the diffusion and rejection of innovations. Academy of Management Review 16:586-612, 1991Google Scholar

20. Majahan V, Peterson RA: Models for Innovation Diffusion. Newbury Park, Calif, Sage, 1985Google Scholar

21. Damanpour F: The adoption of technological, administrative, and ancillary innovations: impact of organizational factors. Journal of Management 13:675-688, 1987Google Scholar

22. Frambach RT, Schillewaert N: Organizational innovation adoption: a multi-level framework of determinants and opportunities for future research. Journal of Business Research 55:163-176, 2002Google Scholar

23. Daft R, Weick K: Toward a model of organizations as interpretation systems. Academy of Management Review 9:284-296, 1984Google Scholar

24. Hambrick DC, Mason PA: Upper echelons: the organization as a reflection of its top managers. Academy of Management Review 9:193-206, 1984Google Scholar

25. Wolfe RA: Organization innovation: review, critique, and suggested research directions. Journal of Management Studies 31:405-430, 1994Google Scholar

26. Kimberly JR, Evanisko M: Organizational innovation: the influence of individual, organizational, and contextual factors on hospital adoption of technological and administrative innovations. Academy of Management Journal 24:689-713, 1981Google Scholar

27. Kahneman D, Tversky A: Prospect theory: an analysis of decisions under risk. Econometrica 47:263-291, 1979Google Scholar

28. Johnson E, Tversky A: Affect, generalization, and the perception of risk. Journal of Personality and Social Psychology 45:20-31, 1983Google Scholar

29. MacCrimmon KR, Wehrung DA: Taking Risks: The Management of Uncertainty. New York, Free Press, 1986Google Scholar

30. Sitkin SB, Pablo AL: Reconceptualizing the determinants of risk behavior. Academy of Management Review 17:9-39, 1992Google Scholar

31. Sitkin SB, Weingart LR: Determinants of risky decision-making behavior: a test of the mediating role of risk perceptions and risk propensity. Academy of Management Journal 38:1573-1592, 1995Google Scholar

32. Davis FD, Bagozzi RP, Warshaw PR: User acceptance of computer technology: a comparison of two theoretical models. Management Science 35:982-1003, 1989Google Scholar

33. March JG, Shapira Z: Managerial perspectives on risk and risk taking. Management Science 33:1404-1418, 1987Google Scholar

34. MacCrimmon KR, Wehrung DA: A portfolio of risk measures. Theory and Decision 19:1-29, 1985Google Scholar

35. Gladwell M: The Tipping Point. New York, Little, Brown, 2002Google Scholar

36. Panzano PC, Roth D, Crane-Ross D, et al: The innovation diffusion and adoption research project (IDARP), in New Research in Mental Health, 16th ed. Edited by Roth D, Lutz W. Columbus, Ohio, Office of Program Evaluation and Research, Ohio Department of Mental Health, 2004Google Scholar

37. Panzano PC, Billings RS: An organizational-level test of a partially mediated model of risky decision-making behavior, in Academy of Management Best Paper Proceedings. Edited by Dosier LN, Keys B. Statesboro, Ga, Georgia Southern University, Office of Publications, 1997Google Scholar

38. Baird IS, Thomas H: Toward a contingency model of strategic risk taking. Academy of Management Review 10:230-243, 1985Google Scholar

39. Singh J: Performance, slack and risk taking in organizational decision-making. Academy of Management Journal 29:562-585, 1986Google Scholar

40. Moore GC, Benbassat I: Development of an instrument to measure the perception of adopting an information technology innovation. Information Systems Research 2:172-191, 1991Google Scholar

41. Senge PM: The Fifth Discipline: The Art and Practice of Learning the Organization. New York, Doubleday/Currency, 1990Google Scholar

42. Dunham RB, Grube JA, Gardner DG, et al: The development of an attitude toward change instrument. Presented at the annual meeting of the Academy of Management. Washington, DC, Aug 1989Google Scholar

43. Rubin WV, Panzano PC: Identifying meaningful subgroups of adults with severe mental disabilities. Psychiatric Services 53:452-457, 2002Google Scholar

44. Chiles JA, Miller AJ, Crismon ML, et al: The Texas Medication Algorithm Project: development and implementation of the schizophrenia algorithm. Psychiatric Services 50:69-74, 1999Google Scholar

45. Drake R, Essock S, Shaner A, et al: Implementing dual diagnosis services for clients with severe mental illness. Psychiatric Services 52:469-476, 2001Google Scholar

46. Real K, Poole S: Innovation implementation: conceptualization and measurement in organizational research, in Research in Organizational Change and Development. Edited by Pasmore WA, Woodman RW. London, United Kingdom, Elsevier, 2005Google Scholar

47. Kumar NA, Stern LW, Anderson JC: Conducting inter-organizational research using key informants. Academy of Management Journal 36:1633-1651, 1993Google Scholar

48. Panzano PC, Billings RS: The influence of issue frame and organizational slack on risky decision making: a field study, in Academy of Management Best Paper Proceedings. Edited by Moore D. Charleston, SC, The Citadel, 1994Google Scholar

49. Shrout PE, Fleiss JL: Intraclass correlations: uses in assessing rater reliability. Psychological Bulletin 86:420-428, 1979Google Scholar

50. James LR, Demaree RG, Wolf G: Estimating within-group interrater reliability with and without response bias. Journal of Applied Psychology 69:85-98, 1984Google Scholar

51. James LR, Demaree RG, Wolf G: r wg : an assessment of within-group interrater agreement. Journal of Applied Psychology 78:306-309, 1993 Google Scholar

52. Klein KJ, Conn AB, Sorra JS: Implementing computer technology: an organizational analysis. Journal of Applied Psychology 86:811-824, 2001Google Scholar

53. George L: Personality, affect, and behavior in groups. Journal of Applied Psychology 75:107-116, 1990Google Scholar

54. Baron RM, Kenny DA: The moderator-mediator variable distinction in social psychological research: conceptual, strategic, and statistical considerations. Journal of Personality and Social Psychology 51:1173-1183, 1986Google Scholar