Effectiveness, Transportability, and Dissemination of Interventions: What Matters When?

Abstract

The authors identify and define key aspects of the progression from research on the efficacy of a new intervention to its dissemination. They highlight the role of transportability questions that arise in that progression and illustrate key conceptual and design features that differentiate efficacy, effectiveness, and dissemination research. An ongoing study of the transportability of multisystemic therapy is used to illustrate independent and interdependent aspects of effectiveness, transportability, and dissemination studies. Variables relevant to the progression from treatment efficacy to dissemination include features of the intervention itself as well as variables pertaining to the practitioner, client, model of service delivery, organization, and service system. The authors provide examples of how some of these variables are relevant to the transportability of different types of interventions. They also discuss sample research questions, study designs, and challenges to be anticipated in the arena of transportability research.

The executive summary of the Surgeon General's Conference on Children's Mental Health has cited the prevention of mental health problems and treatment of mental illnesses among youths as a national health priority (1). Four of the eight goals on the conference's action agenda pertain to increased deployment of "scientifically proven" prevention and treatment services.

In response to this call to action, leading researchers in child treatment and services are scrutinizing traditional models of validating new treatments, in which attributes of the practice context were largely ignored or considered to be "nuisance variables." New models designed to speed the progression from early evidence of efficacy to effective deployment in service systems are being developed (2,3,4). One model, the clinic-based treatment development (CBTD) model (4), has the scientific rigor needed to establish efficacy; the model sequences effectiveness studies to increasingly include populations, clinicians, and clinical settings that are reflective of usual-care circumstances.

To further accelerate the pace of progression from development to deployment, the clinic intervention development (CID) model (3) recommends modifications of the CBTD model that include practice-setting variables in the initial construction of an intervention protocol. The CID model specifies research on dissemination and sustainability as the final step of the treatment validation process.

Thus the literature on the diffusion of innovation is increasingly relevant to treatment and services research. This paper begins with a brief description of constructs and methods found in that literature. Subsequent sections of the paper identify key aspects of the research progression from efficacy to dissemination and illustrate conceptual and methodological issues to be addressed in this progression.

Diffusion and dissemination of innovation

Research on the diffusion of innovation spans diverse fields, from agriculture to manufacturing to medicine. Such research examines the diffusion of information; the diffusion of "hard" technologies, such as computer chips and seeds; and the diffusion of "soft" technologies, such as management and teaching strategies. It also looks at factors that influence the adoption of innovation by individuals, organizations, and communities and investigates proactive dissemination of information and technological innovations. A primary objective of most studies of the diffusion of innovation is to identify types of organizations, communities, or individuals that do or do not adopt a particular innovation and factors that have an impact on the adoption or nonadoption of the innovation. A second objective is to observe whether the innovation is adopted as it was originally designed or adapted. Finally, some researchers have begun to examine whether the innovative practice is sustained over time and what factors influence sustainability (5).

Qualitative reviews of research on the diffusion of innovation and technology transfer have been written by Everett Rogers (6), perhaps the leading contemporary scholar on the topic. Efforts to apply that knowledge base to the transfer of behavioral sciences technologies to the field have also been reviewed (7,8). The resulting body of literature is replete with broad principles and recommendations about the diffusion of innovation. Some scholars make distinctions between "diffusion" and "dissemination," with the former term indicating the unplanned or spontaneous spread of ideas and the latter term reserved for diffusion that is directed and planned (6).

Although Rogers subsumes both terms under the concept of diffusion, retaining the distinction may better serve the development of the science needed to speed the progression from the validation of a treatment to its widespread deployment. Two related observations inform this recommendation. Most of the literature focuses on the naturalistic spread of innovations rather than proactive dissemination efforts; thus few studies are experimental or prospective, two design features that are required to build an evidence base on how to embed effective treatments in service systems.

Diffusion and dissemination in the mental health field

A few studies have examined the diffusion of empirically validated mental health treatments or services. These studies examined retrospectively whether a particular model of service delivery had been implemented outside of the original innovator's shop, identified the extent to which users implemented the practice as it was designed, and described possible barriers to fidelity of implementation and more widespread adoption of the model. Prominent examples are assertive community treatment for adults with persistent mental illness (9,10,11) and the Teaching Family group home model for youths with behavior problems (12,13).

In the children's services arena, widespread diffusion of the Homebuilders model of family preservation services as an alternative to foster care placement (14) and the Child and Adolescent Service System Program (CASSP) system of care model for children and families has also occurred (15), although these innovations were not empirically validated before dissemination efforts began. In both instances, however, key stakeholders across a variety of contexts—government agencies, advocacy groups, and foundations—perceived a significant problem, such as dramatic increases in foster care and a lack of services for youth with mental health needs. The stakeholders collaborated to initiate legislative and funding changes necessary to encourage dissemination of these innovations.

Because measures of critical ingredients of the Homebuilders model were not developed before its dissemination and because such measures are still being validated for the CASSP system of care (16), it is not known whether the programs diffused under each of these labels resemble the programs as designed. However, the results of rigorous studies conducted after the diffusion of family preservation programs suggest that they are not particularly effective in preventing placement of at-risk children in foster care (17,18). In addition, systems of care have been shown to improve service access, coordination, and satisfaction but not outcomes (19).

The Fairweather Lodge experiment (20) stands out as an innovative and rare examination of proactive dissemination efforts. The experiment was a prospective study of dissemination strategies for an alternative to psychiatric hospitalization for adults that had demonstrated promise in a randomized trial. Although significant methodological problems complicate efforts to draw clear conclusions from the study, the original study design was clear. Three distinct methods of persuading hospitals to establish a lodge for mentally ill adult patients were deployed; hospitals were randomly assigned to implement one of these methods, and moves to adopt the model were tracked. The study took a planned approach to disseminating the lodge model by designing information packages and persuasion activities that targeted a variety of hospital administrators and staff. Nonetheless, fewer then 10 percent of hospitals attempted to adopt the lodge model, and many of those did so incompletely.

Recently, initiatives to disseminate interventions with established efficacy have been sponsored by several federal agencies and philanthropic foundations. For example, the Blueprints for Violence Prevention project of the Office of Juvenile Justice and Delinquency Prevention was designed to promote dissemination of delinquency prevention and intervention programs with established efficacy. A descriptive evaluation of the implementation of these programs, which unfortunately does not include evaluation of outcomes, is under way (21). The "toolkit" approach to implementation of evidence-based practices for adults with severe mental illnesses (22) is designed to address potential barriers to practitioners' implementation of evidence-based guidelines at various levels, such as barriers among clinicians and administrators and financial barriers. This approach will be evaluated in future studies.

Precursors to dissemination

Proactive dissemination of efficacious treatments seems a compelling next step in efforts to increase the prevalence of evidence-based practices. However, given likely differences in the conditions surrounding efficacy trials and community practice, transportability issues should probably be examined before dissemination efforts are undertaken. Transportability research examines the movement of efficacious interventions to usual-care settings. Three broad questions can help organize the complex issues inherent in such research. What is the intervention? Who can conduct the intervention in question, under what circumstances, and to what effect (for clients and systems)? Who will conduct the intervention in question, under what circumstances, and to what effect (for clients and systems)?

Each of these questions can be answered at multiple levels—the consumer, the practitioner, the organization that employs the practitioners, and the service system, including referral agents and payers. The first and second questions are likely to be answered in efficacy studies and initial studies of effectiveness. The second question is likely to be answered in later-phase effectiveness and transportability studies, and the third in transportability and dissemination studies.

Transportability from efficacy to effectiveness

The fledgling area of research on the transportability of efficacious treatments to usual-care settings (23) is a precursor to dissemination research. An analogy from agriculture may help clarify one distinction between transportability and dissemination. A new seed that is demonstrated in a research laboratory to yield greater and more nutritional corn harvests if watered, fertilized, and planted in a soil with a particular pH balance can generally be successfully used outside the laboratory in settings with similar water, fertilization, soil pH, and climatic conditions. However, if the experimental seed grew to bear corn only in a test tube, efforts to disseminate it to the agriculture industry would be premature. Instead, evidence that the seed will grow in soil and under typical growing conditions would have to be generated.

The study designed to obtain this evidence could be considered a transportability study. If crop yields in the study were greater and more nutritious, dissemination efforts would be warranted. Having obtained evidence that the new technology (the seed) produces the desired outcomes under conditions faced by the ultimate consumers of the technology (individual and industrial farmers), it is reasonable and ethical to attempt its broader distribution and to evaluate the impact of distribution efforts. At this point dissemination research can begin. Strategies to raise awareness of the seed among potential consumers and identify consumers likely to reject, adopt, or adapt it, and other such efforts, can now be implemented and evaluated.

Research on treatment for children and adolescents more often resembles test-tube conditions rather than soil-test conditions. The resulting dilemma is that treatments validated in efficacy studies may not be effective when implemented under conditions facing most community practitioners. For example, most research studies include treatment manuals, special training for clinicians, and ongoing clinical support and monitoring of treatment implementation. However, few community-based treatment settings and practices can implement all features of the research intervention. Heterogeneous populations and high caseloads characterize few research settings but most community-based settings in which children are treated (24,25). Thus some aspects of both validated treatment protocols and community practice settings may have to be modified so that effective treatments can be delivered in real-world settings. Which aspects of the protocols and practice settings require modification? What kinds of modifications are required? These are transportability questions at the efficacy-effectiveness interface.

Transportability from effectiveness to dissemination

In effectiveness studies that encompass representative practice conditions, the need to study transportability before dissemination may be obviated. On the other hand, initial effectiveness studies may overlook or minimize relevant variations in client populations, practitioners, models of service delivery, provider organizations, and financing of services. Factors such as clinician turnover, salary, or allegiance to the treatment model may vary little in an effectiveness study conducted in a community clinic, but variation may be considerable in other settings in which the intervention is designed to be used. A parent management training protocol delivered during evening hours at a neighborhood school in an effectiveness study may be slated for dissemination to community clinics that operate on a 9-to-5 schedule.

Thus potential barriers to service access would be introduced, including inconvenient location of the facility and changed service hours. These barriers may influence the effectiveness of the intervention. In such circumstances, it may be prudent to examine transportability before dissemination is undertaken. This intermediary step may slow the delivery of an effective intervention to clients in need. On the other hand, the literature on the diffusion of innovation suggests that the risk is high of outright rejection of a new treatment or of the introduction of adaptations that dilute its effectiveness. Premature dissemination of treatments that ill fit clients, practitioners, provider agencies, or service systems can "poison the waters" among these groups, not only for the treatment in question but for the use of any empirically validated treatment.

An example

An ongoing study of the implementation and outcomes of multisystemic therapy (26) in diverse communities illustrates the potential overlap and distinctions that characterize efficacy, effectiveness, transportability, and dissemination research. Multisystemic therapy had been validated in studies with designs that might be considered "hybrids" of efficacy and effectiveness research. On several dimensions these studies would likely meet the criteria of effectiveness research. For example, they employ few exclusionary criteria in enrolling participants; many participants have comorbid disorders, are involved with several agencies, and are economically disadvantaged. The clinicians are community-based practitioners hired for the study. A home-based model of service delivery is used, and the study includes cost estimates. On other dimensions these studies embody features typical of efficacy studies. For example, participants were randomly assigned to study conditions, and the investigators trained clinicians in the intervention, supervised them, and monitored their fidelity to the intervention.

The results of these studies showed promising long-term outcomes. After the results were published, the demand for multisystemic therapy programs increased considerably. A clinical training and consultation process was designed to approximate for clinicians at remote locations the clinical training, support, and monitoring of adherence provided to clinicians in clinical trials. However, it quickly became apparent that a variety of organizational and service system factors not present in the clinical trials could influence clinicians' implementation of the model. Research on organizational behavior and technology transfer also pointed to the potential influence of organizational factors on the implementation and outcomes of human services. The convergence of research and practical experiences thus led to the design of the multisystemic therapy transportability study, funded by the National Institute of Mental Health, of which the first author is principal investigator.

The multisystemic therapy transportability study examines several factors that are believed to influence the implementation and outcomes of this intervention in diverse provider organizations and communities. A mediation model of effectiveness is being tested in which outcomes for children are predicted by clinicians' adherence to multisystemic therapy, which in turn is predicted by specific supervisory, organizational, and interagency factors. It is not clear that such variables could or should have been investigated during the early phases of treatment validation. The first order of business in the initial investigation was to specify this highly individualized treatment model and the clinical support needed to implement it and evaluate the outcomes for children.

The multisystemic therapy transportability study is not examining the diffusion of the multisystemic therapy treatment model. Classic diffusion questions, such as which organizations will choose to adopt the model and what factors influence that decision, are not being asked. The organizations involved in the study started to implement the model before the study began. Also, in contrast with the Fairweather Lodge experiment, no efforts were made to disseminate information about multisystemic therapy to potential users and persuade them to adopt the model. To the extent that dissemination is occurring, it is therefore "customer driven" rather than "innovator driven." Thus the study is not asking whether a particular dissemination strategy is successful in persuading organizations or individuals to adopt multisystemic therapy. However, results from this study may inform proactive dissemination efforts and their evaluation.

Implications for dissemination of efficacious interventions

The CBTD framework developed by Weisz (4) and the CID framework developed by Hoagwood and colleagues (3) for validating treatments and interventions have the potential to increase the range and utility of empirically supported interventions available to children and families and the pace at which treatment validation and dissemination occurs. At each step in the progression from construction and refinement of a treatment through testing of efficacy, levels of effectiveness, cost, and sustainability, decisions must be made about which variables, at which levels, are most relevant. If conditions for the anticipated users of the intervention—clients, practitioners, provider organizations, and funding sources—are considered at the outset of treatment development, some steps in the proposed progression may be taken quickly or even skipped. Of course, not all notions about the factors most relevant to treatment implementation and outcomes can be divined in advance. Such notions emerge from research experiences and findings and practical experiences. For example, research may uncover moderators of outcome, and replication studies may succeed or fail.

The following discussion illustrates some issues that investigators will face on the journey from treatment efficacy to "street-ready" status to dissemination. The term street-ready describes interventions that can be implemented in representative service settings and systems.

Differences in conditions

Several treatments for children have already been deemed efficacious, probably efficacious, or effective on the basis of research reviews and consensus documents published by the American Psychological Association, the American Association of Child and Adolescent Psychiatry, the Practice Guidelines Coalition, and the Office of the Surgeon General. Dimensions on which research-based and clinic-based deployment of treatments may differ can be identified, some on the basis of child psychotherapy research (23,24,25) and others on the basis of research on organizational behavior, technology transfer, and diffusion.

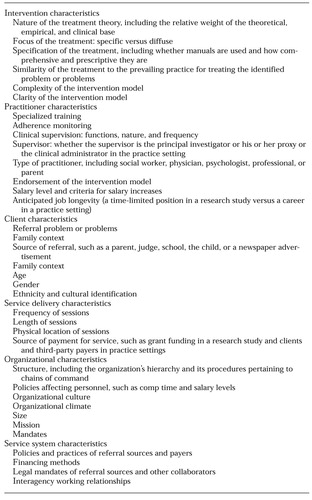

Table 1 presents a preliminary list of dimensions and examples of variables that might be subsumed under each dimension. The dimensions are the intervention itself; the practitioners delivering the intervention, including clinical training, support, and monitoring; the client population; service delivery characteristics; the organization employing the practitioners; and the service system, including referral and reimbursement mechanisms and interagency relations.

Research questions might arise out of anticipated differences on one or more dimensions. Beginning with intervention and practitioner dimensions (Table 1), several variables have been shown to differentiate laboratory-based and community-based child treatment conditions (24,25). Specifically, research treatments tend to be behavioral and problem focused and based on written manuals; clinicians receive specialized training in the experimental intervention and monitoring in the fidelity of its implementation.

For which interventions and under what circumstances is specialized training and monitoring necessary for effective implementation in real-world service settings? Research on organizational behavior suggests that routine human service tasks generally require less training and support than do more complex and individualized ones (27). Even routine tasks in industry, however, are monitored to ensure that the quality of the product received by the last customer or client of the day is equal to that received by the first. Specialized training and monitoring may be less necessary for treatments that are less complex, but there is probably no promising treatment for which specialized training and ongoing support for fidelity of implementation can be eliminated altogether.

This proposition challenges norms and regulations that have governed the work of mental health and social service professionals (28) and thus has implications for dissemination. The literature on diffusion of innovation suggests that the extent to which the innovation is perceived as similar to or different from prevailing practice will influence adoption of the practice. Moreover, individual endorsement of prevailing practice is supported by organizational, financial, and value-based structures. Thus the likelihood that practitioners will not only adopt a new treatment but also implement it as intended may be contingent on perceived differences between the treatment and current practice and the extent to which organizational and fiscal influences support the new practice over the prevailing one. Other features of an innovation, its end users, and the context in which it is introduced may also influence the adoption of innovation (6,29). Discussion of such features is beyond the scope of this paper; however, they have been addressed elsewhere (30).

Multisystemic therapy generally supplants either residential or outpatient treatment in which an eclectic mix of interventions is deployed by a variety of clinicians who meet primarily with the child and occasionally with the child's parent or parents. Three aspects of multisystemic therapy—the service delivery model, the intervention itself, and clinical support and monitoring-contrast sharply with these prevailing practices. First, multisystemic therapy is delivered in a home-based service model that requires a flexible work schedule. Second, it is operationalized in terms of nine treatment principles that integrate key aspects of empirically based treatment approaches for youths and families into an ecological framework. Third, because evidence suggests that therapists' adherence to the multisystemic therapy model predicts outcomes for children (31), intensive clinical supervision and support and monitoring of progress in treatment and barriers to such progress is ongoing.

In contrast to multisystemic therapy, most evidence-based treatments for children and adolescents have been validated in outpatient service delivery settings. To implement a treatment such as multisystemic therapy, the substance of the practitioner-client interchange during sessions would change, but the location, frequency, and, in some cases, the targets of the treatment may not.

For example, an outpatient clinician who previously spent an hour conducting play therapy with a ten-year-old child with conduct disorder could spend the same hour implementing parent management training with the parent or parents of the child. Ostensibly, the changes required for the practitioner and provider organization to adopt parent management training would be fewer than those required to adopt multisystemic therapy. When multisystemic therapy is adopted, the location, intensity, timing, focus (family and ecology versus the individual child), and content of the intervention changes. To adopt parent management training, only two features would change: the focus—on the parents rather than the child—and the content. However, these changes are by no means insignificant. To the extent that parent management training is less different from current outpatient practice, clinicians, the organizations that employ them, and the entities that provide reimbursement for services may be quicker to adopt it than multisystemic therapy. On the other hand, the presence of all the trappings of previous practice may tempt clinicians and organizations to adapt the parent management training model until it more closely resembles previous practice, and treatment fidelity may erode quickly.

In the service system domain (Table 1), different methods of calculating the costs of treatment and paying for it in research settings and usual-care settings may influence treatment implementation, fidelity, and outcomes. Grant funding of a research study of effectiveness may require time-limited deployment of an intervention, whereas financing at local clinics may be on a fee-for-service basis. Thus outcomes achieved in four months in the study may take six months to achieve in the clinics. If the clinic sessions occur with the frequency and duration of the study sessions, then the clinic-deployed version of the intervention will be more costly because of the prolonged treatment time. Factors contributing to the prolonged treatment time would have to be examined to determine whether they also influence the clinical effectiveness of the intervention.

For example, it will be important to demonstrate to payers, including clients and third-party payers, that replacing an hour of play therapy for a ten-year-old child with parent management training will yield better outcomes at the same or a lower cost. The cost of training and monitoring associated with implementation of parent management training, if training and monitoring are found necessary in effectiveness studies, would be included in the cost equation.

Differences in questions

The scenarios about the implementation of multisystemic therapy and parent management training illustrate how treatment models might differ from usual-care conditions with respect to the intervention itself, the model of service delivery, and costs. Other efficacious and effective interventions can be similarly evaluated by using the dimensions listed in Table 1. For each treatment, the magnitude of similarity or difference between the conditions that characterized the validation studies and those that characterize real-world service settings and systems can be estimated. Data on similarities and differences are not yet available for many dimensions, precisely because we have not conducted research in ways that assess them. Moreover, not all differences will be equally relevant to the real-world effectiveness and ultimate dissemination of all interventions. Thus some educated guessing will be needed to "individualize" the progression of existing treatments to street-ready status.

As suggested by others (3,4), case studies may be needed to explore differences hypothesized to be salient or similarities presumed to exist. If the training and clinical support of clinicians differentiates conditions in the study from those in practice sites, then case studies would focus on these features. If the service setting—for example, an outpatient clinic, a school, or a public social service agency—differentiates conditions in the study from those in practice sites, then case studies would focus on these variables.

However, case studies cannot provide strong evidence for effectiveness or transportability. Larger-scale quasi-experimental or experimental studies are needed to achieve that goal. For example, the type or amount of specialized training and clinical support provided to community-based clinicians who are implementing a new treatment could be experimentally manipulated, with fidelity and outcomes compared across experimental training conditions. The climate or culture of an organization may influence clinicians' implementation of a new treatment. Conversely, clinicians' implementation of the treatment may have an impact on the organization's climate or culture. Effectiveness studies should therefore include a large enough sample of organizations and clinicians in each organization to detect such influences.

The variables most critical to dissemination may or may not include those central to effectiveness. For example, the impact of an organization's climate on treatment adherence may be of primary interest in a study of the effectiveness of parent management training in outpatient settings. If such a study found that climate was associated with adherence and that adherence, in turn, predicted outcomes, is it reasonable to assume that climate is important to dissemination? Such an assumption might be valid if climate were found to be associated with organizational variables that predict the adoption of innovation. Otherwise, perhaps not.

Suppose that a dissemination study indicated that the presence of a "champion" of parent management training predicted the willingness of local mental health agencies to adopt it. An organization's climate, which was found to predict clinician adherence in the hypothetical effectiveness study of parent management training described above, may or may not be correlated with the presence of a champion or with the champion's ability to cultivate interest in the innovation. Thus one variable at the organizational level—that of the champion—may be important for dissemination but not for effectiveness. If the presence of a champion also predicts adherence to the treatment model, then the variable is relevant both to dissemination and to effectiveness.

Conclusions

The process of moving efficacious treatments to usual-care settings is complex and may require adaptations of treatments, settings, and service systems. Three broad questions can help organize the progression of research on this complex process. What is the intervention? Who can conduct the intervention, under what conditions, and to what ends? Who will conduct the intervention, under what conditions, and to what ends? The "who" is pertinent at the level of the client, the clinician, the organization, and the service system. Transportability may be an issue in the translation of efficacious interventions to effective ones and in the transition from effectiveness to planned dissemination. Some variables relevant to effectiveness may also be relevant to dissemination, but other variables will not.

It may be possible to design studies that simultaneously test questions pertinent to effectiveness, transportability, and dissemination, but a prototype for such designs is not yet available (2). As illustrated in Figure 1, recursive cycles of investigation may be needed to examine conditions necessary for effective practice and for successful dissemination of effective practices and to design subsequent efficacy studies with increased external validity. The design and execution of such investigations may require expertise and resources not needed to design and execute efficacy trials. Experts in child treatment research rarely have expertise in organizational behavior, adult learning, financing of services, or other substantive topics pertinent to questions of transportability and dissemination. Thus collaborations that are truly interdisciplinary are needed to design and implement studies of effectiveness, transportability, and dissemination.

Significant effort must be invested in the development and maintenance of active collaborations with both the leadership and the line staff in the public and private service sectors. In addition, the levels of human and financial resources required to conduct such studies may be considerably higher than those required for efficacy studies. An efficacy study may involve three to six clinicians, all clinically supervised by the principal investigator or project director. An effectiveness study of the same intervention may require several times that number of clinicians, and these clinicians may be working in different types of treatment settings, supervised by agency personnel who must be trained to supervise in the new treatment model. The costs of training the supervisors and clinicians in such a study are significantly greater than the costs of training a few clinicians for an efficacy trial.

Finally, there is a special kind of chicken-and-egg problem that pertains to dissemination. The capacity to train clinicians in a new technology must exist before dissemination can be undertaken. If such training cannot readily be incorporated into traditional academic programs, such as graduate programs, internships, and residencies, then funding for its development is difficult to obtain. Capacity building thus becomes an unpaid and often undone task.

Discussion of potential solutions to such dilemmas is taking place among numerous federal funding agencies, foundations, graduate and residency training directors, behavioral health care organizations, and local and state mental health administrators.

Acknowledgments

The authors thank Heather Ringeisen, Ph.D., for her helpful review. This work was partly supported by grant MH-59138 from the National Institute of Mental Health and by the Annie E. Casey Foundation.

Dr. Schoenwald is associate professor in the department of psychiatry and behavioral sciences and associate director of the Family Services Research Center at the Medical University of South Carolina, 67 President Street, Suite CPP, P.O. Box 250861, Charleston, South Carolina 29425. Dr. Hoagwood is chief of the child and adolescent services branch in the office of the director at the National Institute of Mental Health in Bethesda, Maryland.

|

Table 1. Dimensions and variables that can be used to compare conditions in research set-tings and practice settings

1. Report of the Surgeon General's Conference on Children's Mental Health: A National Action Agenda. US Public Health Service, Washington, DC, 2000Google Scholar

2. Norquist G, Lebowitz B, Hyman S: Expanding the frontier of treatment research. Prevention and Treatment 2 (Mar 21, 1999). Available at http://journals.apa.org/prevention/volume2/pre0020001a.htmlGoogle Scholar

3. Hoagwood K, Burns BJ, Weisz J: A profitable conjunction: from science to service in children's mental health, In Community-Based Interventions for Youth With Severe Emotional Disturbances. Edited by Burns BJ, Hoagwood K. New York, Oxford University Press, in pressGoogle Scholar

4. Weisz JR: President's message: Lab-clinic differences and what we can do about them: I. the clinic-based treatment development model. Clinical Child Psychology Newsletter 15(1):1-10, 2000Google Scholar

5. Van de Ven AH, Polley DE, Garud R, et al: The Innovation Journey. New York, Oxford University Press, 1999Google Scholar

6. Rogers E: Diffusion of Innovations, 4th ed. New York, Free Press, 1995Google Scholar

7. Backer TE, David SL: Synthesis of behavioral science learnings about technology transfer, in Reviewing the Behavioral Science Knowledge Base on Technology Transfer. Edited by Backer TE, David SL, Soucy G. NIDA monograph 155. National Institute on Drug Abuse, Rockville, Md, 1995Google Scholar

8. Backer TE, Liberman RP, Kuehnel TG: Dissemination and adoption of innovative psychosocial interventions. Journal of Consulting and Clinical Psychology 54:111-118, 1986Crossref, Medline, Google Scholar

9. McGrew JH, Bond GR, Dietzen L, et al: Measuring the fidelity of implementation of a mental health program model. Journal of Consulting and Clinical Psychology 62:670-678, 1994Crossref, Medline, Google Scholar

10. Stein L: Innovating against the current. New Directions for Mental Health Services, no 56:5-23, 1992Google Scholar

11. Teague GB, Bond GR, Drake RE: Program fidelity in assertive community treatment: development and use of a measure. Orthopsychiatry 68:216-232, 1998Crossref, Medline, Google Scholar

12. Fixsen DL, Blase KA: Creating new realities: program development and dissemination. Journal of Applied Behavior Analysis 26:597-615, 1993Crossref, Medline, Google Scholar

13. Wolf M, Kirigin KA, Fixsen DL, et al: The teaching-family model: a case study in data-based program development and refinement (and dragon-wrestling). Journal of Organizational Behavior Management 15:11-68, 1995Crossref, Google Scholar

14. Adams P: Marketing social change: the case of family preservation. Children and Youth Services Review 16:417-431, 1994Crossref, Google Scholar

15. Stroul B (ed): Children's Mental Health: Creating Systems of Care in a Changing Society. Baltimore, Brookes, 1996Google Scholar

16. Vinson NB, Brannan AM, Baughman LN, et al: The system-of-care model: implementation in twenty-seven communities. Journal of Emotional and Behavioral Disorders 9:30-41, 2001Crossref, Google Scholar

17. Fraser MW, Nelson KE, Rivard JC: The effectiveness of family preservation services. Social Work Research 21:138-153, 1997Crossref, Google Scholar

18. Henegan AM, Horwitz SM, Leventhal JM: Evaluation of intensive family preservation programs: a methodological review. Pediatrics 97:535-542, 1997Google Scholar

19. Bickman L, Summerfelt WT, Noser K: Comparative outcomes of emotionally disturbed children and adolescents in a system of services and usual care. Psychiatric Services 48:1543-1548, 1997Link, Google Scholar

20. Fairweather GW, Sanders DH, Tornatzky LG: Creating Change in Mental Health Organizations. Elmsford, NY, Pergamon, 1974Google Scholar

21. Elliot D: The importance of implementation fidelity. Blueprints News (Office of Juvenile Justice and Delinquency Prevention) 2:1-2, 2001Google Scholar

22. Torrey WC, Drake RE, Dixon L, et al: Implementing evidence-based practices for persons with severe mental illnesses. Psychiatric Services 52:45-50, 2001Link, Google Scholar

23. Hoagwood K, Hibbs E, Brent D, et al: Efficacy and effectiveness in studies of child and adolescent psychotherapy. Journal of Consulting and Clinical Psychology 63:683-687, 1995Crossref, Medline, Google Scholar

24. Weisz JR, Donenberg GR, Han SS, et al: Child and adolescent psychotherapy outcomes in experiments versus clinics: why the disparity? Journal of Abnormal Child Psychology 23:83-106, 1995Google Scholar

25. Weisz JR, Donenberg GR, Han SS, et al: Bridging the gap between laboratory and clinic in child and adolescent psychotherapy. Journal of Consulting and Clinical Psychology 63:688-701, 1995Crossref, Medline, Google Scholar

26. Henggeler SW, Schoenwald SK, Borduin CM, et al: Multisystemic Treatment for Antisocial Behavior in Children And Adolescents. New York, Guilford, 1998Google Scholar

27. Glisson C: Structure and technology in human service organizations, in Human Services as Complex Organizations. Edited by Hasenfeld Y. Newbury Park, Calif, Sage, 1996Google Scholar

28. Bickman L: Practice makes perfect and other myths about mental health services. American Psychologist 54:965-978, 1999Crossref, Google Scholar

29. Brown BS: Reducing impediments to technology transfer in drug abuse programming, in Reviewing the Behavioral Science Knowledge Base on Technology Transfer. Edited by Backer TE, David SL, Soucy G. NIDA monograph 155. Rockville, Md, National Institute on Drug Abuse, 1995Google Scholar

30. Schoenwald SK, Henggeler SW: Mental health services research and family-based treatment: bridging the gap, in Family Psychology Intervention Science. Edited by Liddle H, Santisteban D, Bray J, et al. Washington, DC, American Psychological Association, in pressGoogle Scholar

31. Schoenwald SK, Henggeler SW, Brondino MJ, et al: Multisystemic therapy: monitoring treatment fidelity. Family Process 39:83-103, 2000Crossref, Medline, Google Scholar