Statistical Benchmarks for Process Measures of Quality of Care for Mental and Substance Use Disorders

Over the past decade, quality measurement has become an integral part of the infrastructure of mental health care ( 1 ). Increasingly, results from quality measurement are used by hospitals ( 2 ), health plans ( 3 ), state mental health authorities ( 4 ), and payers ( 5 ) to encourage accountability among providers (for example, clinicians and hospitals), provide guidance to consumers and policy makers, and facilitate quality improvement.

Process measures constructed from administrative data are commonly used in national and many state quality assessment initiatives. Some have expressed concern that widespread use of these measures is premature, noting that most are based on face validity rather than on rigorous analyses demonstrating links between better performance and improved patient outcomes. Other limitations of these measures have also slowed their implementation. Available measures address relatively few conditions and treatment modalities ( 6 ). The mental health care system lacks consensus among stakeholders on the best measures for common use ( 7 ). There is a limited capacity to adjust comparisons of performance across providers on the basis of differences in the case mix of providers' patient populations ( 8 ). In recent years, however, progress has been made in each of these areas, expanding opportunities for measures to be used to compare performance across providers and to encourage improvements in clinical practice.

Among remaining barriers is the absence of benchmarks to guide quality improvement. Commonly used by managers outside of health care, benchmarks represent the level of performance achieved by the best-performing organization in an industry ( 9 , 10 ). In health care, benchmarks would identify high-performing clinicians, hospitals, and health plans and allow for investigation of contributing practices. The policy makers and oversight organizations have called for increased use of benchmarks in quality improvement ( 11 , 12 ), and some mental health initiatives have embraced the concept of benchmarks ( 13 , 14 , 15 ). However, measurement-based quality improvement activities in health care more commonly rely on comparisons to average results. Such comparisons can be useful to identify outliers from normative practice, but they are less useful for quality improvement activities intended to promote excellent rather than average performance.

Health care organizations have tried establishing standards—numerical performance thresholds—as a basis for interpreting results. Typically lacking an empirical foundation, standards can be arbitrary and set unrealistic expectations. For instance, health care organizations often set uniform standards of 90 percent across measures, despite variability in the degree to which full conformance is achievable or the processes measured are under a provider's control. The National Inventory of Mental Health Quality Measures found that among more than 300 measures, single-site or average results were available for 54 percent ( 1 , 6 ). Performance standards were established for 23 percent. Benchmarks were not available for any process measures assessing quality of care for mental or substance use disorders.

Weissman and colleagues ( 16 , 17 ) pioneered an approach to statistical benchmarks in primary care that uses Bayesian methods to identify high but achievable levels of performance. Their method has several strengths: it is objective and reproducible, and it addresses statistical biases that result from providers who have few patients meeting measure criteria. The impact of these benchmarks on quality improvement has been shown in a randomized controlled trial of audit and feedback of results on five measures of quality of care for diabetes among 70 community physicians. Physicians who received comparisons of their own results with statistical benchmarks subsequently performed better on these measures than physicians who received their results in comparison to the group's average performance ( 18 ).

In this study, we report on the development of statistical benchmarks for 12 quality measures of mental health and substance-related care. Developed by nationally prominent organizations, the quality measures are from a core set selected in 2000 for common use by a diverse panel of stakeholders, including consumers, families, clinicians, payers, purchasers, and oversight organizations ( 19 ). We applied these measures to assess quality of care for Medicaid beneficiaries in six states using administrative claims data from 1994 and 1995. For each measure, we calculated a statistical benchmark and compared it with the mean result and with the result achieved by the provider at the 90th percentile, evaluating its utility for informing quality improvement.

Methods

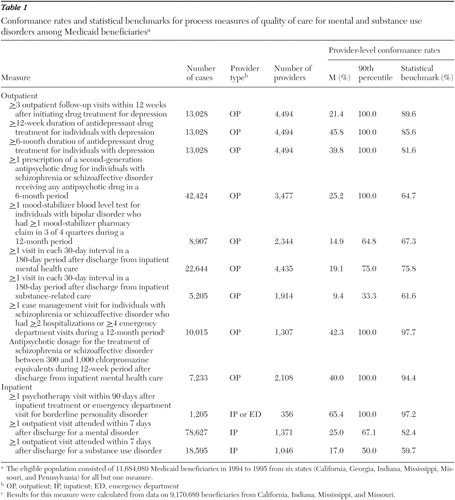

Quality measures

Twenty-eight measures assessing clinical processes—including prevention, access, assessment, treatment, continuity, coordination, and safety—were selected for a core measure set by a diverse panel of stakeholders. Selection was based on a formal consensus process guided by a conceptual framework, empirical data, and ratings of meaningfulness, feasibility, and actionability ( 19 ). Ten of the 28 measures could be constructed from administrative data and were included in this study. Two measures were dichotomized to evaluate mental disorders and substance use disorders separately, resulting in 12 measures for analysis. The clinical rationale, evidence base, and source of these 12 measures are described below, and measure specifications are summarized in Table 1 . Detailed information on these and the other 18 core measures has been published elsewhere ( 1 , 19 ).

|

Antidepressant medication management. These three Health Plan Employer Data and Information Set (HEDIS) measures are used by the National Committee for Quality Assurance (NCQA) in health plan accreditation ( 20 ). The first assesses the proportion of patients initiating an antidepressant medication for depression who complete a 12-week acute treatment phase, whereas the second examines the proportion of those who complete a six-month continuation phase. Research studies have found that likelihood of remission and relapse, respectively, are diminished by completion of these courses of treatment. The third measure assesses the proportion of patients who attend three clinical visits during the acute treatment phase, a standard supported by expert consensus but not research evidence ( 21 ).

Second-generation antipsychotic use for schizophrenia. Developed by the National Association of State Mental Health Program Directors, this measure examines the proportion of patients with schizophrenia or schizoaffective disorder treated with an antipsychotic drug who receive a second-generation antipsychotic ( 22 ). Most but not all practice guidelines have recommended that second-generation drugs should be used as first-line agents ( 23 ). However, the evidence on the effectiveness and side-effect profile of these agents continues to evolve.

Blood level monitoring with mood stabilizers. Originally developed to evaluate care for Pennsylvania Medicaid beneficiaries, this measure assesses the proportion of patients treated with a mood stabilizer for bipolar disorder whose blood levels are monitored in accordance with clinical practice guidelines ( 24 ). Lithium, valproate, and carbamazepine are most effective when blood concentrations fall within specified ranges. Lower levels are associated with increased risks of relapse; higher levels typically cause increased side effects without therapeutic benefit ( 25 ).

Continuity of outpatient visits. Developed to assess care for patients of the Department of Veterans Affairs, these two measures assess the proportion of patients hospitalized for either a mental disorder or a substance use disorder who attend a follow-up visit during each 30-day period for six months after discharge ( 26 ). Most individuals who receive inpatient psychiatric or substance-related care require follow-up ambulatory care to promote further recovery and prevent relapse. Research studies of the relationship between aftercare and rehospitalization have shown mixed results ( 27 , 28 ).

Case management among high-risk patients with schizophrenia. Derived from the Schizophrenia Patient Outcomes Research Team (PORT) recommendations, this measure examines the proportion of patients with schizophrenia or schizoaffective disorder at high risk of relapse who receive case management services ( 29 ). Research studies have found strong evidence of effectiveness for assertive community treatment and other intensive case management models, but evidence is not conclusive regarding the effectiveness of nonintensive case management ( 30 ).

Antipsychotic drug dosing for schizophrenia. Adapted from the Schizophrenia PORT recommendations, this measure assesses the proportion of patients with schizophrenia or schizoaffective disorder treated with antipsychotic medication who receive a dosage between 300 and 1,000 chlorpromazine equivalents ( 31 ). Controlled trials indicate that, on average, antipsychotic dosages within this range provide the best balance between efficacy and side effects ( 32 ).

Psychotherapy after hospital care for borderline personality disorder. Developed by the American Psychiatric Association, this measure assesses whether patients receive psychotherapy after an inpatient stay or emergency department visit for borderline personality disorder ( 33 ). Randomized controlled trials have established the efficacy of specific types of therapy for this disorder; case-based and observational studies provide support for other types of therapy to varying extents ( 34 ).

Timely follow-up after hospitalization. Also used in health plan accreditation, these two measures assess the proportion of patients hospitalized for a mental disorder or substance use disorder who receive a follow-up visit within seven days of discharge ( 35 ). Scheduling outpatient appointments proximally to discharge is recommended to support compliance. Research is mixed on the association between the timeliness of follow-up care and the likelihood of relapse ( 27 , 28 ).

Data source

State Medicaid Research Files (SMRF) from the Center for Medicare and Medicaid Services were used to assess mental health care received by Medicaid beneficiaries. These data sets include data on demographic characteristics, enrollment information, and claims for all clinical services and prescription drugs reimbursed by Medicaid. SMRF data from 1994 and 1995 were analyzed for Medicaid beneficiaries in six states—California, Georgia, Indiana, Mississippi, Missouri, and Pennsylvania. These states were chosen on the basis of geographic distribution, data completeness, and lower levels of managed care penetration. Because individuals enrolled in Medicaid managed care plans may not have claims, we excluded these enrollees. We also excluded individuals with dual enrollment in Medicare and those who were institutionalized, because SMRF data are incomplete for these populations. For all but one measure the total eligible sample consisted of 11,684,089 individuals, including 1,256,972 in Pennsylvania, 1,256,428 in Georgia, 7,266,403 in California, 657,426 in Indiana, 510,912 in Mississippi, and 735,948 in Missouri. The measure assessing case management was limited to 9,170,689 beneficiaries from the latter four states, which used specific codes to reimburse case management services.

Analysis

Conformance rates were calculated for each measure for all eligible individuals in the sample by using detailed specifications obtained from the developers of the measure (available from the authors upon request). Each measure was constructed as a proportion or rate, with the denominator specification describing the eligible sample and the numerator describing the desired characteristics of care. Measure specifications used ICD-9-CM codes for diagnosis and National Drug Codes for medications. Healthcare Common Procedure Coding System (HCPCS) codes were used for procedures and service use. HCPCS level I codes (also known as Current Procedural Terminology [CPT] codes) and level II codes are used nationwide, and level III codes are specific to each state. Many states use the level III codes to reimburse case management, partial programs, and other mental health services.

Provider-level conformance rates were calculated by using unique patient and provider identification numbers in the SMRF data sets. For measures of inpatient and emergency department care, the providers were hospitals. For measures of outpatient care, providers were individual clinicians or clinicians practicing together in a clinic or group. Mean conformance rates and 90th-percentile results were calculated at the provider level for each measure.

Statistical benchmarks were calculated at the provider level by using the methodology developed by Kiefe and colleagues ( 36 ). A statistical benchmark for a quality measure was operationally defined as the care received by the top 10 percent of patients, adjusted for the number of patients per provider. The adjustment step is critical, because many providers have only a few patients who meet criteria for a measure, which can skew benchmarks toward the high (for example, 1/1=100 percent) or the low (for example, 0/1=0 percent) ends of the distribution. This method adjusts for small denominators in two ways. First, a Bayesian estimator approach was used to adjust each provider's conformance rate on the basis of his or her sample size, pulling the influence of very small denominators toward the mean result. This was carried out as follows. An adjusted performance fraction (APF) was calculated for each provider: APF=(x+1)/(d+2), where x equals the actual number of patients described in the measure's numerator criteria and d equals the total number of patients for whom the process is appropriate.

Second, an approach using a pared mean (that is, weighted by denominator size) was used to pool results from the top 10 percent of the sample. Providers were ranked in descending order on the basis of their APF. Beginning with the highest-ranked provider, the number of patients in the measure denominator was summed in descending order until a breakpoint of 10 percent of patients was reached. Added to that sum was the total number of patients below the breakpoint who were part of the caseload of providers who had the same conformance rate as the breakpoint provider. From this summed cohort of patients, a benchmark was calculated as the proportion of patients meeting the numerator criteria for the measure.

Results

The eligible sample and number of providers varied widely on the basis of each measure's inclusion criteria ( Table 1 ). Across the nine measures assessing outpatient care, the eligible sample ranged from 5,205 to 42,424 patients. The number of providers treating these patients ranged from 1,307 to 4,494. Among the three hospital measures, the sample ranged from 1,205 patients receiving care in 356 hospitals to 78,627 patients treated in 1,371 hospitals.

Average performance did not reach a conformance rate of 50 percent on any measure of outpatient care. The mean conformance rate was lowest for the measure of continuity of care for substance use disorders (9.4 percent) and highest for the measure of acute-phase antidepressant adherence (45.8 percent). Among the inpatient measures, average performance was lowest on the measure of follow-up care within seven days of hospitalization for a substance use disorder (17.0 percent) and highest on a measure of psychotherapy for patients with borderline personality disorder (65.4 percent).

For seven of the 12 measures, the performance achieved by the provider at the 90th percentile was 100 percent. The influence of small caseloads on these results can be seen in the following example. Among 4,494 providers who treated one or more patients meeting denominator criteria for acute-phase antidepressant adherence, 1,415 providers had a conformance rate of 100 percent. However, 83.9 percent of these providers treated only one such patient. Statistical benchmarks showed greater variability ranging from 59.7 percent for the measure of timely follow-up care after hospitalization for substance use disorder to 97.7 percent for the measure of case management use among high-risk patients with schizophrenia. Detailed results are shown in Table 1 .

Discussion

Collectively, the mean results for these 12 measures provide a snapshot of the quality of mental health and substance-related care for Medicaid beneficiaries. They examine diverse processes of care across several clinical disorders in six states. Overall, the performance depicted by these results is poor, suggesting ample opportunity for more in-depth evaluation and, most likely, for improving care. These findings are consistent with other reports of quality of care received by Medicaid patients ( 24 , 37 , 38 ). Medicaid beneficiaries have disproportionately low incomes and a large proportion of beneficiaries are from racial or ethnic minority groups—characteristics associated with receiving poor quality of care ( 39 , 40 ).

These findings also demonstrate the limitations of mean results as guidance for providers seeking to improve quality of care. Comparative data can provide guidance to clinicians and managers seeking to interpret the significance of their results in the context of what others have achieved in similar populations. However, mean results—in the case of these 12 measures, ranging from 9.4 to 65.4 percent—give little indication of what level of care is desirable or achievable. Comparisons to these results may motivate improvement among poorly performing providers but provide little guidance for those at or above the mean.

In contrast, comparison to statistical benchmarks provides a higher threshold: an achievable level of excellence. Among the 12 measures in this study, benchmarks ranged from 59.7 to 97.7 percent. In each case, the benchmark was at least 30 percentage points higher than the mean result, providing a meaningful improvement goal for most providers, including many of those with above-average performance.

Contrary to the common practice of establishing a single performance standard (for example, 90 percent conformance) across all measures in a set, variation among the benchmark results makes clear that one standard does not fit all measures. Five of the 12 benchmarks fall between 60 and 80 percent, four fall between 80 and 90 percent, and three fall between 90 and 100 percent.

This variability occurs because process measures differ not only in provider performance but also in the degree to which performance is under the provider's control. For example, hospitals can achieve a 100 percent rate on a measure of the proportion of patients hospitalized for psychiatric care who receive a documented mental status exam each day—a process that is fully under the provider's control. On the other hand, other commonly measured processes, such as antidepressant medication adherence, are influenced by both the provider and the patient. Clinicians may be able to influence adherence through patient education and timely attention to side effects and nonresponse ( 41 ), but other factors affecting adherence—such as patient preference, financial barriers, and functional limitations associated with depression—are outside clinicians' control. In such cases, providers achieving average performance (45.8 percent for the adherence measure) may conclude that this is the best that could be expected. In contrast a benchmark can suggest an achievable level of excellent performance (in this case, 85.6 percent).

Our results illustrate a drawback of a simpler alternative to statistical benchmarks—identification of the performance achieved by the uppermost quartile or decile of providers. In a large sample, many providers will have only a few patients meeting a measure's inclusion criteria. Providers with a small number of patients skew percentile-based benchmarks toward high or low ends of the distribution, making them less meaningful as quality improvement goals. The 90th-percentile result was 100 percent for seven of the 12 measures in our study, driven by a large cohort of providers with only one qualifying patient. One could eliminate from the sample all providers with less than a threshold number of cases, but this raises other problems, particularly when dealing with a smaller sample of providers. The benchmark method we employed adjusts for the small-number effect without a loss of information.

The use of benchmarks does not obviate the need for case-mix adjustment. When results on a measure are influenced by clinical and demographic compositions of patient populations, statistical adjustment may be needed to ensure fair comparisons among providers or fair comparison to a benchmark. Each quality measure addressed here presents different issues with regard to case-mix adjustment, including whether it is needed and which patient characteristics should be adjusted for. Elsewhere we review the status of case-mix adjustment in mental health care ( 8 ) and the development of adjustment models for specific quality measures ( 1 ). Case-mix adjustment is gradually becoming available for a wider array of measures and populations. As these applications expand, the utility of benchmarks will increase as well.

Limitations to this study include the age of the Medicaid data, because clinical practice may have changed during the intervening decade. However, results for some of these measures have been examined over the ten-year period, and significant changes have not been observed. Our mean results from 1994 to 1995 on the HEDIS measures are quite similar to NCQA's 2003 Medicaid results ( 37 , 42 ). Over the past decade, there has been a marked decrease in the length of inpatient stays and growing use of intermediate levels of care, such as partial programs and intensive outpatient programs. Some measures—for example, the HEDIS measure of continuity of care—have specifications that account for use of intermediate levels of care. Nonetheless, results on other measures may have been influenced by temporal trends. Studies using contemporaneous data will be needed to update our results.

As noted earlier, the use of these measures for quality assessment remains controversial in some quarters. First, fewer than half the measures proposed for mental health care examine clinical processes that are supported by evidence from research studies ( 1 , 19 ). Moreover, this evidence base may change over time. For example, as data have continued to emerge on the effectiveness and side effects of second-generation antipsychotics, the effectiveness of these drugs has been subject to shifting assessments of their value relative to traditional agents.

Second, selection of measures for use in national assessment activities has, for the most part, rested on consideration of the measures' face validity rather than on rigorous analysis of their predictive validity or association with improved patient outcomes. Such analyses are available for few measures of mental health care ( 1 , 19 ). Third, the measures used in this study rely on administrative data, which are commonly used for quality measurement because they are relatively inexpensive, are reasonably accurate, and use standardized codes. However, administrative data rely on clinician diagnoses rather than structured diagnostic interviews and lack clinical detail, limiting what can be evaluated ( 8 , 43 ). Additional research is needed on measure validity as well as on data accuracy and the adequacy of current measure specifications.

Finally, process measures are but one method of quality assessment and are ideally used in conjunction with other methods, such as assessment of patient perspectives of care, fidelity to empirically based interventions, and clinical outcomes resulting from treatment. The strengths and weaknesses of these approaches tend to complement one another and, collectively, provide a more robust armamentarium for evaluating quality of care ( 44 ).

Conclusions

Despite its limitations, the use of quality measures is rapidly expanding and provides a potentially powerful tool for improving mental health care. Their utility will further increase as case-mix adjustment becomes more sophisticated, health care systems adopt common measures and specifications, and electronic medical records make clinical information more readily accessible. Benchmarks have the potential to enhance measurement-based quality improvement by identifying levels of high performance that are potentially achievable. In identifying top-performing providers, benchmarks may also provide a first step toward determining practices that contribute to excellent care. In general medical care, incorporation of benchmarks into quality improvement activities has been shown to lead to a greater magnitude of improvement ( 18 ). Further studies will be needed to determine whether and under what circumstances similar gains can be achieved in mental health care.

Acknowledgments

This work was supported by grant R01-HS-10303 from the Agency for Healthcare Research and Quality, grant K08-MH-001477 from the National Institute of Mental Health, and by the Substance Abuse and Mental Health Services Administration.

1. Hermann RC: Improving Mental Healthcare: A Guide to Measurement-Based Quality Improvement. Washington, DC, American Psychiatric Press, 2005Google Scholar

2. 2004 Comprehensive Accreditation Manual for Hospitals (CAMH). Oakbrook Terrace, Ill, Joint Commission on Accreditation of Healthcare Organizations, 2004Google Scholar

3. Health Plan Employer Data and Information Results, 2000-01. Washington, DC, National Committee for Quality Assurance, 2002Google Scholar

4. Implementation of the NASMHPD Framework of Mental Health Performance Measures by States to Measure Community Performance: 2001, Vol 02-05. Alexandria, Va, National Association of State Mental Health Program Directors Research Institute, 2002Google Scholar

5. FY99 Performance Standards. Boston, Massachusetts Behavioral Health Partnership, 1999Google Scholar

6. Hermann RC, Leff HS, Palmer RH, et al: Quality measures for mental health care: results from a national inventory. Medical Care Research and Review 57:135-154, 2000Google Scholar

7. Hermann RC, Palmer RH: Common ground: a framework for selecting core quality measures. Psychiatric Services 53:281-287, 2002Google Scholar

8. Hermann RC: Risk adjustment for mental health care, in Risk Adjustment for Measuring Health Care Outcomes. Edited by Iezzoni LI. Chicago, Health Administration Press, 2003Google Scholar

9. Berkey T: Benchmarking in health care: turning challenges into success. Joint Commission Journal on Quality Improvement 20:277-284, 1994Google Scholar

10. Hermann RC, Provost SE: Interpreting measurement data for quality improvement: means, norms, benchmarks, and standards. Psychiatric Services 54:655-657, 2003Google Scholar

11. Standards and Guidelines for the Accreditation of MBHOs Effective July 1, 2003. Washington, DC, National Committee for Quality Assurance, 2002Google Scholar

12. Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC, National Academy Press, 2001Google Scholar

13. Nechasek SL, Perlman SB: Lessons learned from the Children's Mental Health Benchmarking Project. Washington, DC, National Conference on Mental Health Statistics, May 30, 2001Google Scholar

14. Sluyter G, Barnette J: Application of total quality management to mental health: a benchmark case study. Journal of Mental Health Administration 22:278-285, 1995Google Scholar

15. Kramer TL, Glazer WN: Our quest for excellence in behavioral health care. Psychiatric Services 52:157-159, 2001Google Scholar

16. Weissman NW, Allison JJ, Kiefe CI, et al: Achievable benchmarks of care: the ABCs of benchmarking. Journal of Evaluation in Clinical Practice 5:269-281, 1999Google Scholar

17. Allison J, Kiefe C, Weissman N: Can data-driven benchmarks be used to set the goals of healthy people 2010? American Journal of Public Health 89:61-64, 1999Google Scholar

18. Kiefe C, Allison J, Williams O, et al: Improving quality improvement using achievable benchmarks for physician feedback: a randomized controlled trial. JAMA 285:2871-2879, 2001Google Scholar

19. Hermann RC, Palmer RH, Leff HS, et al: Achieving consensus across diverse stakeholders on quality measures for mental healthcare. Medical Care 42:1246-1253, 2004Google Scholar

20. Health Plan Data and Information Set (HEDIS 2000). Washington, DC, National Committee for Quality Assurance, 1999Google Scholar

21. Depression in Primary Care: Vol 2: Treatment of Major Depression Clinical Practice Guideline no 5. AHCPR pub no 93-0551. Rockville, Md, US Department of Health and Human Services, 1993Google Scholar

22. Report of the Technical Workgroup of the NASMHPD President's Task Force on Performance Indicators. Alexandria, Va, National Association of State Mental Health Program Directors, 1997Google Scholar

23. Osser DN, Sigadel R: Short-term inpatient pharmacotherapy of schizophrenia. Harvard Review of Psychiatry 9:89-104, 2001Google Scholar

24. Marcus S, Olfson M, Pincus H, et al: Therapeutic drug monitoring of mood stabilizers in Medicaid patients with bipolar disorder. American Journal of Psychiatry 156:1014-1018, 1999Google Scholar

25. Practice guideline for the treatment of patients with bipolar disorder: American Psychiatric Association. American Journal of Psychiatry 151(Dec suppl):1-36, 1994Google Scholar

26. Leslie D, Rosenheck R: Comparing quality of mental health care for public-sector and privately insured populations. Psychiatric Services 51:650-655, 2000Google Scholar

27. Huff ED: Outpatient utilization patterns and quality outcomes after first acute episode of mental health hospitalization: is some better than none, and is more service associated with better outcomes? Evaluation and the Health Professions 23:441-456, 2000Google Scholar

28. Foster EM: Do aftercare services reduce inpatient psychiatric readmissions? Health Services Research 34:715-736, 1999Google Scholar

29. Report of the American Psychiatric Association Task Force on Quality Indicators. Washington, DC, American Psychiatric Association, 1998Google Scholar

30. Mueser KT, Bond GR, Drake RE, et al: Models of community care for severe mental illness: a review of research on case management. Schizophrenia Bulletin 24:37-74, 1998Google Scholar

31. Lehman AF, Steinwachs DM: Patterns of usual care for schizophrenia: initial results from the Schizophrenia Patient Outcomes Research Team (PORT) client survey. Schizophrenia Bulletin 24:11-20, 1998Google Scholar

32. Dixon L, Lehman A, Levine J: Conventional antipsychotic medications for schizophrenia. Schizophrenia Bulletin 21:567-577, 1995Google Scholar

33. Report of the American Psychiatric Association Task Force on Quality Indicators. Washington, DC, American Psychiatric Association, 1999Google Scholar

34. Practice guideline for the treatment of patients with borderline personality disorder: American Psychiatric Association. American Journal of Psychiatry 158(Oct suppl):1-52, 2001Google Scholar

35. Health Plan Employer Data and Information Set (HEDIS) 2000: Vol 2. Washington, DC, National Committee for Quality Assurance, 2000Google Scholar

36. Kiefe CI, Weissman NW, Allison JJ, et al: Identifying achievable benchmarks of care: concepts and methodology. International Journal for Quality in Health Care 10:443-447, 1998Google Scholar

37. State of Health Care Quality 2004: Industry Trends and Analysis. Washington, DC, National Committee for Quality Assurance, 2004Google Scholar

38. Yanos PT, Crystal S, Kumar R, et al: Characteristics and service use patterns of nonelderly Medicare beneficiaries with schizophrenia. Psychiatric Services 52:1644-1650, 2001Google Scholar

39. Mental Health: Culture, Race, and Ethnicity: A Supplement to Mental Health: A Report of the Surgeon General. Washington, DC, Department of Health and Human Services, US Public Health Service, 2001Google Scholar

40. Mental Health: A Report of the Surgeon General. Washington, DC, Department of Health and Human Services, US Public Health Service, 1999Google Scholar

41. Chen A: Noncompliance in community psychiatry: a review of clinical interventions. Hospital and Community Psychiatry 42:282-287, 1991Google Scholar

42. Druss BG: A review of HEDIS measures and performance for mental disorders. Managed Care 13:48-51, 2004Google Scholar

43. Garnick D, Hendricks A, Comstock C: Measuring quality of care: fundamental information from administrative data sets. International Journal for Quality in Health Care 6:163-177, 1994Google Scholar

44. Hermann RC: Linking outcome measurement with process measurement for quality improvement, in Outcome Measurement in Psychiatry: A Critical Review. Edited by IsHak W, Burt T, Sederer L. Washington, DC, American Psychiatric Press, 2002Google Scholar