Field Test of a Tool for Level-of-Care Decisions in Community Mental Health Systems

Abstract

OBJECTIVE: Tools for supporting decisions about placing clients in different levels of mental health care can facilitate several important functions, such as structuring service allocation, providing standards for quality assurance and concurrent review, designing service and benefit packages, and planning for resource needs. This paper describes a level-of-care decision-support tool and tests its reliability and concurrent validity. METHODS: Panels of clinical managers and administrators of mental health centers developed a decision-support tool with eight levels of care and a computerized decision-tree algorithm for level-of-care placement. A random sample of 1,034 adults from one county mental health system were assessed by their case managers using a package that included variables in the eight-level model, but not the algorithm itself. Other variables were included to assess the tool's concurrent validity. RESULTS: Level-of-care placements based on the decision-support tool showed strong interrater reliability. Concurrent validity was demonstrated by significant relationships in the expected direction between level of care and psychiatric hospitalizations, arrests, residential moves, homeless periods, residential independence, lack of work activity, medication noncompliance, and functioning as measured by the Global Assessment of Functioning Scale. CONCLUSIONS: Preliminary empirical evidence indicates that the level-of-care decision-support tool is reliable and valid. It could be further refined by incorporating the impact of social supports, collateral services, current mental health services, and motivation for services.

Levels of care are used implicitly whenever service providers design treatment plans to meet clients' needs. Although service providers' decisions about level of care are highly flexible, they are also idiosyncratic, resulting in wide variability of services provided to clients with similar needs (1). Increasing the predictability of level-of-care decisions through the use of decision-support tools (2) can help both service providers and program planners, particularly under managed care.

Support tools for level-of-care decisions can facilitate appropriate and equitable allocation of services based on a client's clinical needs, enhancing client-treatment matching. Development of decision-support tools for specific clinical populations can also guide the creation of service or benefit packages required for managed care (3). Standards for making decisions about the appropriate level of care can support concurrent-review decisions and quality assurance under managed care systems that otherwise may create incentives for restricting services (4-7). Placement of clients in a level of care based on standardized decision-support tools can also facilitate rational planning for resource needs. Overall, level-of-care decision-support tools can help overcome the wide variations in practice patterns and quality that can distract from effective care (8,9).

Few tools exist for guiding level-of-care decisions for mental health outpatient clients. In some studies, cluster analysis is used to first identify case-mix group characteristics in a population or sample (10,11). Level-of-care placement criteria and service programs are then designed for these "discovered" case-mix groups. Cluster-analytic level-of-care models generate relatively homogeneous case-mix groups. However, their purely inductive, empirical method can be insensitive to differences among clients that are clinically but not statistically meaningful (Uehara E, unpublished data, 1995). An a priori clinical framework for identifying case-mix groups and an algorithm for level-of-care placements can model complex clinical decision making more closely while retaining many of the same strengths as cluster-based approaches.

For the clinically based level-of-care decision-support models available (12-17), empirical testing has been limited to the relationship of level-of-care placement to service utilization. However, past service utilization is an insufficient indicator of validity, because it further institutionalizes system inadequacies, such as lack of funding and inflexible funding streams, that restrict service providers from making service decisions based on clinical need.

The purposes of this paper are to describe a level-of-care decision-support tool based on clinical need and to show how its reliability and validity were tested independently of past service utilization. Specifically, we present the components of the decision-support tool, including the level-of-care model and a computerized algorithm for level-of-care placement. We then describe how interrater reliability of the tool's algorithm-based level-of-care placement was tested. Concurrent validity of the tool's placement was examined in relation to independent indicators of clinical severity, such as psychiatric hospitalizations (18), dependent residential status and instability (number of moves) (11), homelessness (11), arrests (18,19), lack of work activity (12), medication noncompliance (20), and score on the Global Assessment of Functioning Scale (GAF) (21). We hypothesized that placement in a more intensive (higher) level of care would be related to a client's increased likelihood of experiencing indicators of clinical severity and to a lower GAF score.

Finally, we examined the relationship of the tool's level-of-care placement to sociodemographic characteristics (age, gender, and ethnicity), which are key potential sources of bias to validity. Because sociodemographic characteristics were not included in the algorithm, level-of-care placement decisions should be, in theory, blind to these characteristics. Thus we predicted that level-of-care placement would not be significantly related to sociodemographic characteristics.

Methods

The level-of-care decision-support tool has two components. The first, the level-of-care model, includes descriptions of the levels of care based on typical service intensity and goals. The second component is an algorithm for placement in a level of care.

The level-of-care model

Three panels of clinical managers and administrators from 12 community mental health centers from a large county in Washington State and county-level administrators were guided by the authors over a two-year period (1992 to 1994) in the development of the decision-support tool. The concept of a level-of-care decision-support tool was presented to these groups as a general guide to placing clients in a minimum appropriate level of care, a guide that could be overridden by clinically sound reasons not accounted for by the tool's level-of-care algorithm. With these caveats, the panels delineated levels of care along three dimensions: need for continuity of care (case management) or 24-hour supervision, range of service hours, and principal goals.

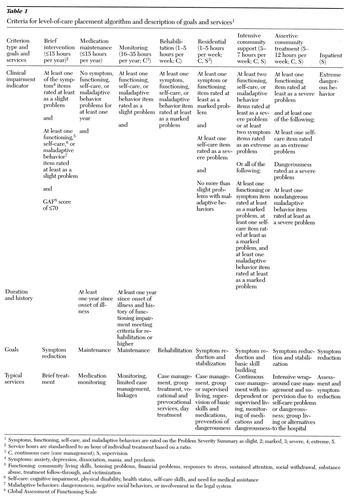

Eight levels of care were defined. Shown in Table 1, they are brief intervention, medication maintenance, monitoring, rehabilitation, residential care, intensive community support, assertive community treatment, and inpatient services. While the level-of-care model described is primarily focused on outpatient care, an inpatient level was included to help distinguish clients for whom outpatient treatment may not provide sufficient support. The number of levels and principal goals of intervention for the levels are consistent with other level-of-care models (12-17).

The algorithm for level-of-care placement

Panel members also chose clinical criteria for placement of clients into the eight levels of care. These criteria were used in an algorithm for level-of-care placement. Most criteria were based on research examining factors that differentiate clients with respect to service need or utilization.

The criteria can be grouped into four domains. The first domain is symptom severity, including symptoms of anxiety, depression, dissociation, mania, and psychosis (22,23). The second domain is functioning, which encompasses community living skills (12), housing problems, income and financial problems, response to stress (12,13), sustained attention (13), social withdrawal (12,13), substance abuse (22), treatment compliance (21), and victimization. The third domain is self-care, which includes cognitive impairment (24,25), physical disability, health status (22), self-care skills (22), and need for medical assistance. The fourth domain is maladaptive behavior, including dangerousness (22), negative social behaviors (13), and involvement in the legal system.

A 22-item scale developed by the authors, the Problem Severity Summary (PSS), is used to assess the criteria listed above. Each item has behavioral anchors as well as anchors for the severity ratings. Each item is rated 0 to 5, indicating above average or average status (that is, the problem is absent) and slight, marked, severe, or extreme impairment. The PSS can be completed by bachelor's-level case managers, and it has shown psychometric promise, with an interrater reliability above .50 for 19 of the individual items (an intraclass correlation of .61 for the scale total) and concurrent validity for 15 items. Concurrent validity was shown by significant correlations with conceptually related measures; for example, community living skills were related to GAF scores, and self-care skills were related to the presence of a physical disability (26).

As Table 1 shows, in addition to the PSS items, history of functional impairment and time since illness onset are included in the level-of-care assessment package to indicate chronicity of illness (10,13). The GAF score is used as the criterion for entering the brief intervention level.

The criteria are used in a computerized algorithm for level-of-care placement based on decision-tree logic, beginning at the inpatient level. If a client meets the criterion for the inpatient level, the client is placed there. Otherwise the next set of queries refers to criteria for the level of assertive community treatment and so forth. For example, if a client is given a rating of 4 (severe) on community living skills and 4 on dangerousness, the client is placed in assertive community treatment.

Individuals who do not meet placement criteria for the levels of inpatient or assertive community care are screened for levels with successively more inclusive criteria so that those meeting the fewest criteria are served at a lower level of care. Criteria were designed by panel members to limit the range of individuals placed in the levels of brief intervention, residential care, and inpatient treatment. This design was imposed because the population for whom the tool is intended consists largely of adults with severe and persistent mental illness, who are generally considered inappropriate for brief intervention. Also, local clinical philosophy emphasizes community-based rather than residential treatment.

Field-test participants

Two samples were drawn for the study: a random sample of clients (and their associated case managers) to describe level-of-care placement and test its validity, and a random subsample of clients (and their case managers and an additional case manager for each client) to test reliability.

Level-of-care validity sample.

Hypothetical level-of-care placement was conducted for a randomly drawn sample of 14 percent of adults (N= 1,034) enrolled in a single county mental health system in Washington State. As Table 2 shows, 50 percent of the clients were women, and 32 percent were from ethnic minority groups. Consistent with the definition of severe and persistent mental illness, most had a schizophrenia-spectrum diagnosis (41 percent), bipolar disorder (18.5 percent), or unipolar depression (17.6 percent). The remaining diagnoses included organic disorders (7.2 percent), anxiety disorders (5.1 percent), and personality disorders (2.1 percent). The mean± SD GAF score for the sample was 48.39±15.45, indicating prominent impairment in functioning. Most participants (73 percent) lived in permanent independent settings.

Representativeness of the sample was assessed relative to the adult population served in the county mental health system (N=7,928). The sample was found to be representative in terms of gender and proportions of clients with bipolar and unipolar depression. However, as Table 2 shows, the county caseload had a significantly lower proportion of ethnic minority clients, those with schizophrenia-spectrum disorders, and those living in independent settings.

Case managers (N=369) of each client in the sample completed level-of-care assessments, which are described below. Case managers completed assessments for one to four clients.

>Reliability sample.

Seventy-five clients were randomly selected from the larger study sample to be assessed independently by their primary case manager and an additional case manager who knew the client. The additional case manager was selected by the client's primary case manager.

Instruments

The level-of-care assessment package used by the case managers included level-of-care variables for the criteria in the model but not the algorithm for placement. Most clinical severity indicators (arrests, residential instability, homelessness, medication noncompliance, and hospitalizations) were included in the assessment package. Information about the remaining severity indicators (work activity and dependent residential status) and sociodemographic characteristics was available from the regional mental health information system.

Results

The case managers' level-of-care assessments were examined within the decision-support tool's algorithm. Based on the algorithm, the 1,034 clients in the sample would have been given the following placements: brief intervention, 1 percent (N= eight clients); medication maintenance, 2 percent (N=20); monitoring, 8 percent (N=83); rehabilitation, 40 percent (N=413); residential care, 6 percent (N=60); intensive community support, 31 percent (N=324); assertive community treatment, 10 percent (N=106); and inpatient treatment, 2 percent (N=20). These proportions were consistent with how the model was conceptualized—that is, there were low rates of placement in brief intervention and residential care.

Reliability

The level-of-care assessments from the two case managers for the clients in the reliability sample were also used within the decision-support tool's algorithm to determine level-of-care placements. Interrater reliability for placement was strong (intraclass correlation=.63).

Concurrent validity

Clinical severity indicators.

Validity of the decision-support tool was tested against clinical severity indicators not included in the algorithm. Residential independence was defined as currently living in a permanent, noncongregate, noncorrectional setting. Residential stability was measured by the number of residential moves within the previous year. The number of moves, arrests, psychiatric hospitalizations, and homelessness episodes over the past year were dichotomized (none versus one or more) due to highly skewed distributions. Work activity was a dichotomous yes-no variable indicating whether the person had worked (full or part time) within the previous six months. Medication noncompliance was a dichotomous yes-no question (current problems versus compliance).

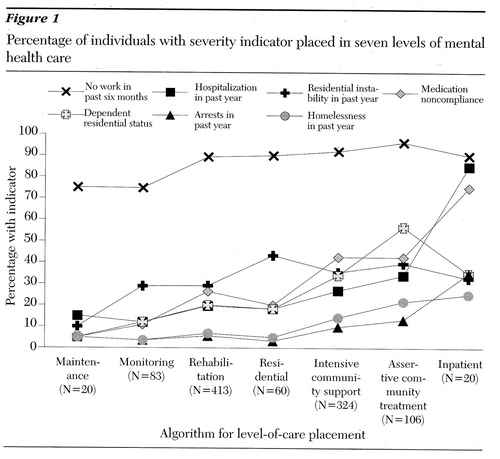

As Figure 1 shows, consistent with expectations, the level-of-care placement was significantly related to each indicator of clinical severity. Cramer's V tests of the linear association of level-of-care placement and all indicators were significant: the number of hospitalizations (V=.24, p≤.001), number of arrests (V=.18, p≤.001), number of moves (V=.13, p=.02), number of homeless periods (V=.19, p≤.001), dependent residential status (V=.29, p≤.001), lack of work activity (V=.18, p≤.001), and medication noncompliance (V=.27, p≤.001). These findings indicate that the proportion of individuals experiencing each severity indicator increased at higher (more intensive) levels of care.

Discriminant function analysis indicated adequate overall goodness of fit. It revealed a single function that was significant (c2=61.50, df=30, p<.001), which had a canonical correlation of .43. The brief intervention level of care is not shown in Figure 1 because only eight clients were placed in this level, and the small number caused data to be skewed disproportionately based on scores of two individuals.

The relationship between the level-of-care placement and the GAF score was also significant (r=-.61, p≤.001), indicating that higher functioning was associated with placement in a less intensive (lower) level of care, as predicted. The brief intervention level was not included in this analysis because the GAF score is one of the decision criteria for this level.

Sociodemographic characteristics.

As predicted, chi square analyses demonstrated that placement was not significantly related to either gender or ethnicity. However, a significant relationship was found with age (c2=60.6, df=28, p<.001). Closer examination of the data suggests that the effect can largely be accounted for by older adults (age 60 and older) disproportionately placed in the assertive community treatment. This effect could be expected because one of the decision criteria for this level of care is high self-care needs, including physical impairment and health problems, which generally occur more frequently among older adults.

Discussion and conclusions

Consensus panels of clinical managers and administrators were guided in the development of a decision-support tool. The tool has two components—an eight-level model of levels of care and an associated computerized algorithm for level-of-care placement. Case managers' assessments of clients using the criteria from the level-of-care algorithm supported the tool's interrater reliability, which was found to be strong. Concurrent validity was demonstrated first by significant relationships in the expected direction between level-of-care placement and psychiatric hospitalizations, arrests, residential moves, homeless periods, residential independence, work activity, medication noncompliance, and GAF score. Also, placement decisions based on the algorithm were not related to or biased by sociodemographic characteristics.

A significant limitation of this study is that the algorithm for level-of-care placement is relevant only for systems serving primarily adults with severe and persistent mental illness. The algorithm would likely need modification if used with different populations or in different settings.

Next steps

Although results of this study are promising, several steps are necessary for refining the decision-support tool. First, further study should be undertaken of the reliability and validity of the Problem Severity Summary, the assessment instrument on which the algorithm is based. Improving this instrument would likely further strengthen reliability and validity of the resulting algorithm-based level-of-care placement. Tests of concurrent validity could also be enhanced by examining level-of-care placement relative to additional indicators of clinical severity such as independent ratings of functioning and symptoms. Also, the decision-support tool should be tested in other geographic locations to determine generalizability.

If further evidence is found of the tool's reliability and validity, tests of utility of the tool in actual clinical decisions about level of care and resource utilization can proceed. A useful decision-support tool would, for example, result in level-of-care placements that correspond to level-of-care decisions made by clinicians, without use of the tool's algorithm, at the time clients are first seen for services. Assessment of clients already receiving services may reveal only modest levels of symptoms or functional impairment, when in fact these levels may be largely due to the ameliorating affect of current services.

Along these lines, the tool's level-of-care algorithm should systematically take into account the impact of current treatment if the tool is to be used for placement decisions once a client has initiated treatment. Estimating the impact of services based on a client's impairment history or "titrating" services to the point at which symptoms reappear are possible ways of supporting such level-of-care placement decisions.

Additional tests of the tool's utility should show that clients placed at a particular level of care utilize services within the suggested range for that level and demonstrate positive clinical outcomes as a result. Variables that affect the relationship of level-of-care placement to service use and outcomes can be identified through concurrent review and used for refining the tool. Refining the level-of-care decision-support tool must therefore occur over time and in conjunction with meaningful outcome measurement to move from simply linking similar services to similar clients to guiding appropriate service decisions (11).

The decision-support tool presented here also does not address some constructs important for decisions about level of care. For example, the decision criteria do not account for the ameliorating effects of social and paraprofessional support. Clearly, a client who is well supported by family, friends, or support groups will need less support from mental health providers than a client with comparable clinical severity but without such support.

Clients' preferences for services and motivation to participate in services are also not accounted for by the tool's level-of-care algorithm. Clients with little motivation for treatment might be considered for a lower level of care. Services could also be tailored to increase their attractiveness to these clients.

Implications for use

Preliminary empirical evidence indicates that the level-of-care decision-support tool is reliable and valid. It also appears to be practical, in that it is based on a brief assessment that is readily translated into a computerized algorithm for level-of-care placement. These strengths make the tool potentially very useful for predicting and supporting level-of-care decisions. Such a tool will always need to be supplemented by clinical judgment, which is based on far more subtle information than can be captured by a standardized tool. Clinicians' reasons for disagreement with placements based on the tool can be documented as a means of refining the tool. As such, while additional clinical input can be useful, the strength of the tool is not negated.

With further refinement, the level-of-care decision-support tool could serve as a practice standard for concurrent review decisions and quality assurance. In this way, the tool could complement providers' efforts to match treatment to clinical needs. Service or benefit packages could also be based on the tool's level-of-care model and range of service hours. Aggregate level-of-care placement data can also provide a means to shift from the apparent tautology of planning for service and resource needs based on past utilization to planning based on clinical need. Before any of these uses are fully implemented, the tool must demonstrate predictable relationships between level-of-care placement, service utilization within the range for that level, and positive clinical outcomes. This study found support for such further use and study of the decision-support tool. In sum, the level-of-care decision-support tool shows promise of being a valuable practice standard and system-level planning tool.

Acknowledgment

This research was sponsored by the King County Mental Health Division in Seattle.

Dr. Srebnik is affiliated with the department of psychiatry and behavioral sciences at the University of Washington, Box 359911, Seattle, Washington 98195. Dr. Uehara is with the School of Social Work at the University of Washington. Mr. Smukler is affiliated with Eastside Mental Health Services in Bellevue, Washington.

Figure 1. Percentage of individuals with severity indicator placed in seven levels of mental health care

|

Table 1. Criteria for level-of-care placement algorithm and description of goals and services1

1Symptoms, functioning, self-care, and maladaptive behaviors are rated on the Problem Severity Summary as slight, 2; marked, 3; severe, 4; extreme, 5.

2Service hours are standardized to an hour of individual treatment based on a ratio.

3C, continuous care (case management); S, supervision

4Symptoms: anxiety, depression, dissociation, mania, and psychosis

5Functioning: community living skills, housing problems, financial problems, responses to stress, sustained attention, social withdrawal, substance abuse, treatment follow-through, and victimization

6Self-care: cognitive impairment, physical disability, health status, self-care skills, and need for medical assistance

7Maladaptive behaviors: dangerousness, negative social behaviors, or involvement in the legal system

8Global Assessment of Functioning Scale

|

Table 2. Characteristics of a random sample of 1,034 adults and of the total population of 7,928 adults being served in a county mental health system in Washington, in percentages

† df=1 for all comparisons

1. Weed L: Knowledge Coupling: New Premises and New Tools for Medical Care and Education. New York, Springer-Verlag, 1991Google Scholar

2. Newman F, Tejeda M: The need for research that is designed to support decisions in the delivery of mental health services. American Psychologist 51:1040-1049, 1996Crossref, Medline, Google Scholar

3. Feldman S (ed): Managed Mental Health Services. Springfield, Ill, Thomas, 1992Google Scholar

4. Smukler M, Srebnik D, Sherman P, et al: Developing local service standards. Journal of Mental Health Administration, in pressGoogle Scholar

5. Burns BJ, Smith J, Goldman HH, et al: The CHAMPUS Tidewater demonstration project. New Directions for Mental Health Services, no 43:77-86, 1989Google Scholar

6. Schlesinger M: Striking a balance: capitation, the mentally ill, and public policy. New Directions for Mental Health Services, no 43:97-116, 1989Google Scholar

7. Koyanagi C, Manes J, Surles R, et al: On being very smart: the mental health community's response in the health care reform debate. Hospital and Community Psychiatry 44:537-542, 1992Google Scholar

8. Berlant L: Quality assurance in managed mental health, in Managed Mental Health Services. Edited by Feldman S. Springfield, Ill, Thomas, 1992Google Scholar

9. Gottlieb G: Diversity, uncertainty, and variations in practice: the behaviors and clinical decisionmaking of mental health care providers, in The Future of Mental Health Services Research. Edited by Taube CA, Mechanic D, Hohmann A. DHHS pub (ADM) 89-1600. Washington, DC, US Government Printing Office, 1989Google Scholar

10. Bartsch D, Shern D, Coen A, et al: Service Needs, Receipt, and Outcomes for Types of Clients With Serious and Persistent Illness. Denver, Colorado Mental Health Division, 1995 Google Scholar

11. Uehara E, Smukler M, Newman F: Linking resource use to consumer level of need: field test of the Level of Need-Care Assessment (LONCA) method. Journal of Consulting and Clinical Psychology 62:695- 709, 1994Medline, Google Scholar

12. Leff S: A Mental Health Service System Model for Managing Mental Health Care: Theory, Application, and Research. Boston, Human Services Research Institute, 1992Google Scholar

13. Minsky S, Kamis-Gould E: Classification of persons with severe and/or persistent mental disorders: the New Jersey experience. Paper presented at the northeastern Mental Health Statistics Improvement Program meeting, New York, Feb 1991 Google Scholar

14. Nyman G, Harbin H, Book J, et al: Green Spring criteria for medical necessity of outpatient treatment and its use in a mental health utilization review program. Quality Assurance and Utilization Review 7:65-69, 1992Crossref, Medline, Google Scholar

15. Richman A, Britton B: Level of care for deinstitutionalized psychiatric patients. Health Reports 4:269-275, 1992Medline, Google Scholar

16. Herman S, Mowbray C: Client typology based on functioning level assessments: utility for service planning and monitoring. Journal of Mental Health Administration 18:101-115, 1991Crossref, Medline, Google Scholar

17. Newman F, Tippett M, Johnson D: A screening instrument for consumer placement in level of CSP: psychometric characteristics. Paper presented at the annual meeting of the American Evaluation Association, Seattle, Nov 1992. Google Scholar

18. Postrado L, Lehman A: Quality of life and clinical predictors of rehospitalization of persons with severe mental illness. Psychiatric Services 46:1161-1165, 1995Link, Google Scholar

19. Draine J, Solomon P: Comparison of seriously mentally ill case management clients with and without arrest histories. Journal of Psychiatry and Law 20:335-349, 1992Crossref, Google Scholar

20. Draine J, Solomon P: Explaining attitudes toward medication compliance among a seriously mentally ill population. Journal of Nervous and Mental Disease 182:50-54, 1994Crossref, Medline, Google Scholar

21. Diagnostic and Statistical Manual for Mental Disorders, 3rd ed, rev. Washington, DC, American Psychiatric Association, 1987Google Scholar

22. Glazer W, Kramer R, Montgomery J, et al: Medical necessity scales for inpatient concurrent review. Hospital and Community Psychiatry 43:935-937, 1992Medline, Google Scholar

23. Terkelsen K, McCarthy R, Munich R, et al: Development of clinical methods for utilization review in psychiatric day treatment. Journal of Mental Health Administration 21:298-312, 1994Crossref, Medline, Google Scholar

24. Phillips C, Hawes C: Nursing home case-mix classification of residents suffering from cognitive impairment: RUGII and cognition in the treatment case-mix data base. Medical Care 30:105-116, 1992Crossref, Medline, Google Scholar

25. Royall D, Mahurin R, True J, et al: Executive impairment among the functionally dependent: comparisons between schizophrenic and elderly subjects. American Journal of Psychiatry 150:1813-1819, 1993Link, Google Scholar

26. Smukler M, Srebnik D, Uehara E: Problem Severity Summary: Final Report. Seattle, King County Division of Mental Health, 1992 Google Scholar