An Ethnographic Study of Implementation of Evidence-Based Treatments in Child Mental Health: First Steps

There are numerous evidence-based treatments for depression, anxiety, disruptive conduct, and other mental, emotional, and behavioral problems among children and adolescents ( 1 , 2 , 3 ). However, these practices are not widely used in everyday clinical care ( 4 , 5 , 6 ). The causes of this gap between science and practice remain elusive, in part because of the slim body of evidence about factors that affect implementation of evidence-based treatments ( 7 , 8 , 9 ). Conceptual frameworks of implementation have been developed in other contexts, such as business and primary care ( 10 , 11 , 12 , 13 , 14 , 15 , 16 , 17 ), but it is not known whether they are transferable to implementation in the context of children's mental health services. Therefore, empirical studies of implementation in that context are important ( 10 , 14 ).

The Child System and Treatment Enhancement Projects (Child STEPs) initiative was launched in 2003 by the Research Network on Youth Mental Health, funded by the John D. and Catherine T. MacArthur Foundation, to help bridge the science-practice gap in children's mental health services. The conceptual framework guiding Child STEPs is depicted in a recently published paper by Schoenwald and colleagues ( 18 ). Synthesizing theory and research on technology transfer and implementation as they might apply to treatment adoption and implementation in clinical practice, this framework depicts dynamic patterns of influence on adoption and implementation of new treatments and services that arise within and among key elements of the children's mental health system. At the macro level, these key elements include the government agencies with mandated responsibility for oversight and financing of services, the provider organizations that are subsumed within or contracted by these state systems to provide mental health services, the staff who work in these organizations, the specific treatments and services that are delivered, and the children and families themselves ( 18 ).

Findings of the Research Network on Youth Mental Health have recently been published in regard to service system and organizational variables that are likely to have an impact on child treatment implementation in community mental health settings ( 19 , 20 ). The ethnographic study described here focused on the interface between two other domains in the Child STEPs conceptual model: the clinical staff—in this case, clinicians and clinical supervisors—and the treatment and services delivered. It also focused on the interactions between clinicians and researchers. The objectives of this study were to identify factors and processes in these domains related to the delivery of evidence-based treatments that facilitate or impede implementation in the short term and have an impact on intentions to implement such treatments over the long term among clinicians engaged in randomized clinical effectiveness trials. Such trials are seen as a key step in the translation of research to practice ( 21 ).

Participant observation and extended semistructured interviews focused on implementation in 11 agencies of evidence-based practices for the treatment of depression, anxiety, and disruptive conduct among children and adolescents. Ethnographic methods have long been used by researchers to understand the process and context of delivery of mental health services in general ( 22 , 23 , 24 ) and, more recently, the implementation of evidence-based treatments ( 22 , 25 , 26 ). Consistent with Klein and Sorra ( 13 ), Simpson ( 17 ), and Rogers ( 27 ), we defined implementation in this study as the gateway or the phase of innovation that lies between the decision to adopt the innovation (in this case, to participate in an effectiveness trial of evidence-based treatments) and the routine use of the innovation (in this case, evidence-based treatment sustainability). We further defined short-term implementation as the processes and activities that occur after training and during the first three to six months of use of a treatment in a randomized clinical effectiveness trial.

Methods

The Clinic Treatment Project

The ethnographic study took place in the context of the Clinic Treatment Project (CTP), which was carried out by the Research Network on Youth Mental Health. The CTP focused on children ages eight to 13 who had been referred for treatment of problems involving depression, anxiety, disruptive conduct, or any combination of these. Five agencies in Honolulu and six agencies in Boston participated in the project. Therapists in the same clinic who consented to participate were randomly assigned to provide specific empirically supported treatments for children who were recruited into the study. The therapists used standard manualized treatment (see below), modular manualized treatment (see below), or their usual approach to clinical care.

For treatment of anxiety, the specific manualized program was Coping Cat, developed by Kendall and colleagues ( 28 ). For treatment of depression, the specific manualized program was Primary and Secondary Control Enhancement Training, developed by Weisz and colleagues ( 29 ). For treatment of conduct problems, the specific manualized program was Defiant Children: A Clinician's Manual for Assessment and Parent Training, developed by Barkley ( 30 ). These evidence-based treatments are being tested in two forms: standard manualized treatment, which uses full treatment manuals, in the forms that have been tested in previous efficacy trials, and modular manualized treatment, in which clinicians learn all the component practices of the evidence-based treatments but individualize use of the components for each child, guided by a clinical algorithm ( 31 ).

Clinicians randomly assigned to standard manualized treatment or modular manualized treatment received training in the specific treatment procedures plus weekly case consultation from project supervisors familiar with the protocols to assist the clinicians in applying the treatment procedures to youngsters in their caseload. The children and families who consented to participate in the study were randomly assigned to receive one of these two approaches to treatment or usual care.

In Honolulu clinicians were trained in three cohorts, during winter 2004–2005, summer 2006, and summer 2007. In Boston clinicians were trained in two cohorts, during winter and spring 2005 and fall 2006. Although clinicians assigned to usual care may have had access to the manuals or materials (some of which can be purchased commercially online) used by clinicians in the standard or modular manualized conditions, actual implementation of the evidence-based treatments required extensive clinician supervision, coaching, and rehearsal, which was not provided to clinicians across treatment condition boundaries.

Participants

Participants in this study were four trainers, six project supervisors, and 52 clinicians in the first Honolulu and Boston cohorts. A majority of clinicians (36 clinicians, or 69%) were women, and the mean±SD age of all clinicians was 40.2±10.4 years. Most of the clinicians had master's degrees (37 clinicians, or 72%) or doctoral degrees (15 clinicians, or 22%). Of the professional disciplines represented, the largest percentage of participants was trained in social work (22 clinicians, or 43%), followed by clinical psychology (12 clinicians, or 23%), mental health counseling (ten clinicians, or 20%), education (three clinicians, or 6%), marriage and family therapy (three clinicians, or 6%), psychiatry (one clinician, or 2%), or care management (one clinician, or 2%). Clinicians encompassed a range of ethnic groups, including Caucasian (30 clinicians, or 58%), Asian American or Pacific Islander (15 clinicians, or 29%), African American (one clinician, or 2%), and Latino (one clinician, or 2%). Four clinicians (8%) did not report information on race or ethnicity.

The protocol was approved by institutional review boards of the University of Southern California, University of Hawaii, and Judge Baker Children's Center at Harvard University. After complete description of the study to participants, verbal informed consent was obtained. Participation in both the CTP trial and the ethnographic study was voluntary. Although peer pressure among clinicians may have influenced some clinicians to continue participation in the CTP once training had been completed, researchers attempted to create an atmosphere in which all clinicians were excited about and committed to participation. One clinician withdrew from the CTP because of a client family's dissatisfaction with her use of one of the treatments, ten clinicians withdrew because they were laid off by the clinic for fiscal reasons or sought employment elsewhere for reasons unrelated to the CTP, and six clinicians withdrew because the organization employing them had elected to discontinue participation because of a transition in leadership and the new director's assessment that the organization would be unable to fully commit its staff and resources to the project during this period. Nevertheless, all 17 of these clinicians remained in the ethnographic study as long as they and their clinics were participants in the CTP; thus, their experience during this period was included in the analysis reported here.

Data collection

Data collection consisted of participant observation, interviews with study participants, and review of minutes of teleconferences between supervisors, trainers, and CTP investigators. Participant observation occurred at meetings of the research network, attendance at training workshops, visits to study clinics, and social events with project clinicians. Approximately 230 hours of observations between January 2004 and March 2007 provided an opportunity to obtain information on study progress and process. These observations occurred during the nine months before clinician training, 12 months before the enrollment of youths in treatment, and after 26 months of active treatment, thus providing a window into the preimplementation and early implementation experiences of clinicians.

Extended semistructured interviews with six clinical supervisors—all postdoctoral students employed by the CTP—were conducted by the first author, an experienced medical anthropologist, in September 2006 (four supervisors in Boston) and October 2006 (two supervisors in Honolulu). The interviews were conducted with use of an interview guide that facilitated collection of information about clinician understanding of the principles and procedures of the standard and modular manualized treatments, clinician experience in using the treatments, supervisor experience in supervising and interacting with project clinicians to date, and indicators of clinicians' acceptance of the treatments. The interviews were sufficiently open ended to enable participants to discuss issues they considered to be relevant to implementation. Consistent with the iterative nature of qualitative research ( 32 ), the content of the guide was modified over time as preliminary analyses of initial interviews suggested new directions of inquiry or the need for more detailed information on particular topics. All interviews lasted approximately one hour.

Brief semistructured interviews were also conducted with 17 clinicians and two clinic directors in Boston and seven clinicians and two clinic directors in Honolulu. All these clinicians were in the standard or modular manualized treatment conditions. The interviews were used to collect information on experiences in using evidence-based treatments to date, initial assessments of the usefulness and practicality of the standard and modular manualized treatments, and motivations for participating in the project.

Data management and analysis

Interviews with clinical supervisors were digitally recorded and transcribed. Transcriptions were reviewed and checked for accuracy by the first author. By use of a methodology rooted in grounded theory ( 33 ), all data were analyzed in the following manner. First, all data were reviewed to develop a broad understanding of content as it relates to the project's specific aims and to identify topics of discussion and observation. During this step, short descriptive statements or "memos" were prepared to document initial impressions of topics and themes and their relationships and to define the boundaries of specific codes (that is, the inclusion and exclusion criteria for assigning a specific code) ( 34 ). Second, material in field notes, interviews, and meeting minutes was coded to condense the data into analyzable units. Segments of text ranging from a phrase to several paragraphs were assigned codes on the basis of a priori themes (that is, those from the interview guide) or emergent themes (also known as open coding) ( 35 ). Third, codes were then assigned to describe connections between categories and between categories and subcategories (also known as axial coding) ( 35 ). The final list of codes (or codebook) consisted of a list of themes, issues, accounts of behaviors, and opinions associated with implementation of evidence-based treatments.

Fourth, based on the codes, the computer program QSR NVivo ( 36 ) was used to generate a series of categories arranged in a treelike structure connecting text segments grouped into separate categories of codes or "nodes" to further the process of axial or pattern coding to examine the association between different categories. Fifth, the technique of constant comparison was used to further condense the categories into broad themes that were then linked together into a heuristic framework by identifying instances in texts where themes were found to "co-occur" (that is, where different codes were assigned to the same or adjacent passages in the texts).

Results

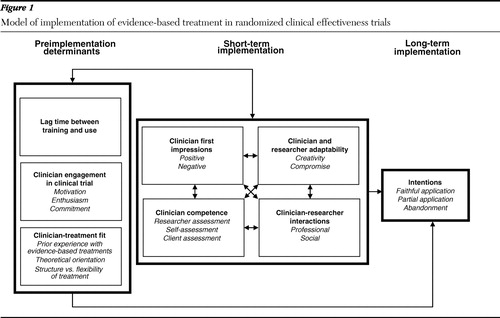

Our analyses identified eight general themes that reveal a heuristic model of implementation of evidence-based treatments for child mental health. The themes are illustrated in Figure 1 . The eight themes, in turn, were placed into three categories: long-term implementation intentions, determinants of implementation, and short-term implementation. Each of these categories and the themes that constitute these categories are discussed below.

Long-term implementation intention

Because the CTP was not completed at the time that this ethnographic study was conducted, it was impossible to assess the likelihood of continued use of the standard or modular manualized approach of any of the three evidence-based treatments. However, reports by clinicians in the standard and modular manualized conditions and clinical supervisors revealed three discrete patterns of potential long-term implementation. The first pattern was faithful application of the treatments as specified with all or most of the clients in need of these treatments. One clinician interviewed indicated that she hoped to have at least one of each kind of case so that she would know how to do all of the treatments once the study was over. Another clinician stated that she wanted to learn the protocol for use with a client who was not in the study. Four of the six clinicians who indicated their intention to continue using the treatments with fidelity to the protocols as trained were in the modular manualized treatment condition.

In contrast, some clinicians indicated that they were unlikely to continue using the treatments once the study has ended. For example, one clinician commented that she would "definitely not be doing this were it not for the fact that I agreed to participate in the study." Another clinician expressed dissatisfaction with the techniques of parent management training because it left her little time to devote to other practices, such as play therapy, in which she had more confidence. All four clinicians who indicated that it was their intention to stop using the evidence-based treatments once the study was completed were in the standard manualized treatment condition.

Most clinicians in both conditions, however, seemed likely to adopt a third pattern—selective use of treatment components. Fourteen of the 24 clinicians interviewed reported that they would continue using some of the techniques, but, as described by one of the clinical supervisors, "either they might select one of the protocols and use it or use it for some of their clients but not for the majority of them." One clinician in the standard manualized treatment condition stated, "I would like to use them again but not necessarily in the same order. I like some of these pieces a lot, and some of these not so much." Ten of the 14 clinicians who anticipated selective use after study completion were in the modular manualized condition, and four were in the standard manualized condition; all indicated they would attempt to apply the selected components for specific clients with fidelity as prescribed in the modular manualized version of treatment.

Determinants of implementation

Three primary factors emerged as perceived determinants of evidence-based treatment implementation: lag time between training and use, clinician engagement with the clinical trial, and clinician-treatment fit.

Lag time between training and use. Supervisors cited the lag time between initial training in the treatment protocol and treatment use in practice, as well as the number of practice cases, as primary factors related to clinician intention to continue using the treatments once the project had ended, as well as clinician competence in using the treatments. One supervisor observed, "For the first group [of clinicians trained in Hawaii], there was such a long lag time between when they went through the training and when they picked up cases that they lost a lot of what they had in the training." A few clinicians expressed frustration that using the new skills was delayed by slower-than-expected client recruitment. As the CTP progressed, however, the length of time between training and application was reduced considerably.

Clinician engagement in the clinical trial. The level of engagement of clinicians in the standard and modular manualized treatment conditions was another important determinant of implementation intention. By engagement, clinicians and supervisors meant the motivation and enthusiasm for and commitment to participating in the implementation of the evidence-based treatments within the controlled and highly regulated process specified by the design of a randomized clinical trial. All clinicians had provided informed consent to participate in the study; however, some were more excited than others about participating, citing factors such as the opportunity to learn new techniques that could increase their effectiveness as clinicians as well as their marketability and the opportunity to contribute to the field by participating in a research project. One supervisor described this group of clinicians as follows: "They read their manuals every week before sessions. They get it in terms of getting that there is a protocol for them to follow, where they can do it without being coached."

Clinicians who were less enthusiastic about participating in the CTP were identified by research supervisors as being more difficult to supervise and more likely to express the intention to discontinue treatment use upon project completion. During training, clinicians randomly assigned to the standard manualized condition were less enthusiastic about participating than clinicians assigned to the modular manualized condition, citing fears that it would be too inconvenient to use and not acceptable to clients and would limit the ability of the clinician to exercise creativity and control over the treatment process.

Perhaps the best indication of engagement, based on statements provided by clinicians and clinical supervisors during interviews, was the perception that clinicians remained committed to adopting the treatments despite the challenges involved. This commitment was evident even among clinicians who held negative opinions of the treatments, and it seemed to be independent of assignment to treatment condition. As one clinician stated, "I am going to do their study, whether it feels good or not. This is what I signed up for, and I'm going to do it!"

Clinician-treatment fit. A third determinant of intended long-term use of the evidence-based treatments was the match between certain clinician and treatment attributes. For example, clinicians randomly assigned to the standard manualized treatment condition who preferred or needed structure in working with clients or who had previous experience in using structured practices were more likely than clinicians without these needs or preferences to have positive impressions and experiences and exhibit competence in applying these treatments with clients. Clinicians whose clinical experiences and theoretical orientation called for a more flexible approach to working with clients were better suited to the modular manualized condition.

Among the factors that influenced this fit was the clinician's previous clinical experience. For instance, some clinicians expressed difficulty adopting the behavioral techniques of parent training because they were not accustomed to working with parents. One supervisor noted that clinicians who were struggling with the standard manualized treatment protocol were accustomed to more unstructured practices, whereas those who were successful had "some components of their usual approach to treatment that were a little bit more structured." Two clinicians reported that they had never before used a manual to treat a client.

Another factor related to the fit between clinician and evidence-based treatment was the clinician's theoretical orientation, as exemplified in this comment from one supervisor about one of the clinicians in the standard manualized condition: "Having a plan was a bad thing because it was supposed to come and be derived from the child. It was really in the opposite direction. And there was direct conflict between what we had been talking about and what she had been trained in." Other clinicians reported that they did not believe in timeout as specified in the behavioral parent training protocols. Although these clinicians used the techniques as instructed, it was unclear whether they would continue to use them once the CTP was finished.

Short-term implementation

Four additional themes emerged from our analysis of possible determinants of long-term intention to implement the evidence-based treatments, and these appeared to represent components of the initial or first steps of implementation—after training and during the first six months of treatment use. These four themes were clinicians' first impressions (positive and negative) of features of the treatments, clinician competence in treatment application, clinician and researcher adaptability, and clinician-researcher interactions.

Clinician first impressions. This theme represented a cognitive dimension of evidence-based treatment use that included positive and negative attitudes and beliefs and expectancies informed by preimplementation factors and by initial experiences with treatment use. Many clinicians made positive comments about features of the treatments, especially the depression and anxiety programs, noting that they represented something new in terms of clinical practice; provided a more structured approach to things that the clinicians were doing already, which was especially helpful for clinicians with limited clinical experience; and were somewhat familiar. One clinician interviewed during a training workshop expressed satisfaction that the treatments were based on clinical experience. Other clinicians came to accept the treatments after beginning to use them. Several clinicians interviewed after training expressed surprise at how well they had been able to use the manuals or how well parents had been engaged in treatment. In general, clinicians who were perceived by supervisors to be using a treatment well were positive about it.

However, many clinicians also had negative impressions, particularly of the behavioral training for parents. The clinicians had several practical concerns about this treatment. One was having to "translate" the treatment for parents to make it sound less scientific. Another was having to anticipate that the child might refuse parental instructions and that the parent would feel it was not worth fighting for. Clinicians also had concerns that families would be unable to handle the sophistication of a point system designed to reinforce positive behavior. The clinicians were concerned that they had insufficient time to teach a skill in a session and that they lacked clinical resources to make it work. Clinicians also expressed more philosophical concerns. Some were concerned that actual cases would be more complex than those described in the training materials. Some were ambivalent about using protocols that they perceived to be too structured, rigid, and inflexible. For some clinicians, these impressions were reinforced by initial experiences in using the treatments. They reported that the treatment was hard to do, both for the client and for themselves.

Clinical competence. A behavioral dimension of the first steps of implementation was the clinician's skill in using the evidence-based treatment. Supervisors reported considerable variability in clinician competence. For instance, some clinicians failed to complete homework assignments or prepare for sessions as instructed. Some clinicians were quick to learn the skills and apply them in practice cases and with actual clients, whereas other clinicians were perceived to be "just not getting it." This assessment was made by supervisors, clients, and the clinicians themselves. One clinician, for instance, claimed that she had been "fired" by a parent of one of her clients because of dissatisfaction with the lack of treatment progress.

Clinician and researcher adaptability. The degree to which both clinicians and researchers exhibited adaptability in assuming their respective roles within the CTP was another important component of short-term use of the evidence-based treatments and a determinant of intended long-term use. One indicator of this adaptability among clinicians was the extent to which they took initiative and exercised creativity in applying the material and integrating it with their own theoretical orientation and previous training. According to a supervisor, one clinician "used these techniques a bit more flexibly than we would have wanted. But she pulled it off really well, and so I kind of … felt more comfortable [in the therapist's] straying from maybe what would have been ideal for the study." Another clinician who believed that nutrition is fundamental to mental health added a component about nutrition in the behavioral parent training. Other clinicians, as one supervisor put it, "pretty much do what they need to do to stay on track."

A second indicator of adaptability was the extent to which a clinician was willing to compromise by abandoning, at least in part, usual patterns of treatment and theoretical orientation toward treatment. For instance, one clinician commented, "I don't want to take on more cases because they are more work than I had realized." However, he was prevailed upon to do so by his research supervisor. Other clinicians agreed to instruct parents in using timeout techniques as specified by the behavioral parent training manual ( 30 ), even though they personally did not believe in timeout.

The same two indicators of creativity and compromise on the part of the researchers also were perceived to be a critical component of short-term use of the treatments and a determinant of intentions for long-term use. To varying degrees, project investigators and supervisors worked to create positive first impressions of both standard and modular manualized treatment approaches and exercised creativity in working with clinicians to improve competence in treatment use. Activities that were undertaken to find common ground with the clinicians included identifying the consistency between the treatments and a clinician's own theoretical orientation, exhibiting a willingness to understand that orientation and fit the treatments within its framework, incorporating clinician suggestions in treatment use, accommodating clinician priorities, building a common language or using the clinician's language to more effectively communicate with the clinician, seeking out clinician strengths and motivations, and being deferential to certain clinicians and directive with others. Not every supervisor employed these strategies, and not every strategy was employed with every clinician. However, both researchers and clinicians who reported using these strategies suggested that they played an important role in improving clinician performance in treatment use.

Similarly, although supervisors were charged with helping clinicians use the treatments as they had been trained to do, they were reluctant to push clinicians too far for fear of having them withdraw from the study, thereby jeopardizing the study's integrity and power to detect a statistical difference between the standard and modular manualized approaches to treatment and between the two approaches and usual care. Project investigators thus devoted considerable time and energy looking for the "right balance" between the treatments as designed and as preferred by the clinicians. In doing so they often exerted a degree of compromise in working with clinicians. For instance, when looking for reassurance that they might not be able to cover everything in a session as prescribed by the manual because of a lack of time, clinicians were told by investigators, "This is fine as long as you get to an understanding of the skill."

Clinician-researcher interactions. Of all the positive features of the treatments, the one cited most frequently by clinicians and supervisors alike was the supervision that came with project participation. Clinicians who had begun working with supervisors on actual cases commented on how much they enjoyed being supervised and how much it helped to improve their clinical skills in general. According to one supervisor, "All the clinicians who were participating [at one clinic] were saying really positive things. They like getting paid for getting an hour of supervision on a case. To have that in their schedule … they see as a luxury." A clinician reported that the time spent interacting with a research supervisor during her weekly sessions was "the best thing about the project."

CTP investigators and supervisors also exercised creativity and compromise in engaging in non-work-related social interactions with clinicians. They planned and participated in social activities with clinicians, such as dinners to honor clinicians for their involvement in the project or potluck picnics and lunches, and they engaged in non-project-related discussions and activities at clinicians' request, including providing advice to clinicians who sought their help about how to handle problems at work, take care of ailing parents, or cope with children going off to college or who had questions about career opportunities or about cases unrelated to the study. However, one supervisor reported that such interactions required considerable time and energy on her part, and not all supervisors or clinicians were willing or able to engage in such interactions.

Discussion

Our analysis of ethnographic field notes, interview transcripts, and meeting minutes revealed eight themes grouped into three categories: long-term implementation, determinants of implementation, and short-term implementation or first steps. We identified three patterns of intentions regarding long-term implementation of evidence-based treatments in the context of a randomized clinical effectiveness trial: faithful application of the treatments as taught by the CTP investigators, abandonment of the treatments upon completion of the CTP, and selective application. These patterns, in turn, appear to be related to three preimplementation factors—lag time between training and use, clinician engagement in the clinical trial, and clinician-treatment fit—and four short-term implementation factors—clinician first impressions of the treatments, clinical competence in treatment use, clinician and researcher adaptability, and clinician-researcher interactions. As illustrated in Figure 1 , the short-term implementation factors were also associated with the preimplementation factors.

For the most part, these factors are similar to those identified in the research literature on evidence-based practice implementation and innovation. For instance, staff attributes in Simpson's ( 17 ) model, including professional growth, efficacy, and adaptability, can be found in the themes of clinician engagement, competence, and adaptability. Implementation drivers in the model of Fixsen and colleagues ( 10 ), including preservice and in-service training, ongoing consultation and coaching, and staff and program evaluation, can be found in the themes of lag time between training and use, adaptability, and clinician-researcher interactions. The themes of adaptability and interaction also contain elements of the participatory approaches that consider the needs and preferences of all stakeholders, knowledge transfer (source, content, medium, and user), and building face-to-face relationships found in the model proposed by Barwick and colleagues ( 37 ). Clinician willingness to adopt and adapt the treatments and the ability to implement key intervention components in routine practice, part of the RE-AIM model developed by Glasgow and colleagues ( 11 ), can be found in the themes of clinician engagement, competence, and adaptability. Elements of the predisposing, enabling, and reinforcing factors in the precede stage of the precede-proceed model ( 12 ) can be found in the themes of clinician engagement, clinician-treatment fit, adaptability, and clinician-researcher interactions.

Our analyses also revealed interrelationships among these factors. For example, positive or negative first impressions of the treatments contributed to and were the product of clinical competence in using them. Both first impressions and clinical competence contributed to the adaptability of researchers and clinicians and to the interactions between researchers and clinicians. Changes in first impressions and clinical competence, in turn, were related to patterns of adaptability and interactions as researchers sought to improve both clinician engagement and performance through training and supervision.

On the basis of elicitation of these themes through grounded-theory techniques and identification of interrelationships among these themes, a heuristic model of evidence-based treatment implementation at the level of clinical supervisors and clinicians and the level of treatment and service content, outlined in Figure 1 , was proposed. This model can guide future research in two ways. First, it offers a series of propositions or hypotheses that may be confirmed either quantitatively or qualitatively with subsequent and more extensive data collected from the CTP. For instance, one potential hypothesis emanating from this model is that the initial experiences with implementation, based on the interaction of attitudes, beliefs, expectancies, and behavioral competence, are significantly associated with the pattern of long-term implementation (complete application with fidelity, partial application, or abandonment). Another hypothesis is that the components of short-term implementation moderate the effects of preimplementation factors of actual implementation and of intended long-term implementation. Alternatively, patterns of either short-term or long-term implementation may be mediated by attributes of specific treatments and clinician-treatment fit. Second, this model can serve as a baseline by which to evaluate changes in implementation processes, facilitators, and barriers over time.

As to the nature of these changes, the results of this study suggest a dynamic and ongoing relationship between preimplementation factors and experience that is manifested in the components of short-term implementation or first steps. Whereas preimplementation factors shaped clinician experience of the CTP as a whole and of clinician implementation of evidence-based treatments in particular, that experience, in turn, led to changes or modifications in all seven determinants of long-term implementation intention. For instance, as supervisory sessions were modified on the basis of clinician feedback and researcher adaptability, researchers and supervisors reported more engagement on the part of clinicians. Engagement also followed increased competence in treatment use and more positive impressions of the treatments. These experiences also improved the fit between clinician and treatment. The reciprocal nature of the associations between preimplementation factors and short-term implementation experience suggest that the strength of these associations may increase or decrease over time as they are modified by experience.

Finally, the results of this study suggest that one of the major drivers of clinician intentions to implement evidence-based treatments is the interaction between treatment developers and users or, in this instance, CTP investigators and clinicians. CTP investigators used several strategies to promote clinician engagement in use of the evidence-based treatments. These included making deliberate efforts to find common ground, creating and participating in social activities, and accommodating clinicians' desires to discuss non-project-related issues during supervision sessions. CTP clinicians collaborated with investigators and supervisors in deliberate efforts to seek common ground and develop a common language that incorporated elements of the principles and practice of the treatments and the clinical experience and theoretical orientations of the clinicians. Such interactions are viewed as critical to the diffusion of innovations ( 25 ). Several clinicians came to accept and adopt the treatments largely on the basis of their professional and social interactions with researchers.

There are several limitations to our study that deserve mention. This investigation focused on only the initial or first steps of evidence-based treatment implementation. Although our findings suggest that there will be changes in implementation patterns and processes over time, it would be premature to specify the nature of those changes. Implementation intention is not the same as actual implementation, especially when clinicians are exposed to practices that are novel and unfamiliar. We focused only on the clinical supervisor-clinician and treatment content domains of evidence-based treatment implementation. As hypothesized in the conceptual model underlying the CTP ( 18 ), the extent of implementation will also be influenced by organization and client factors. For instance, as noted above, one organization withdrew from the clinical trial because of a transition in clinic management unrelated to the CTP. The premature withdrawal of the six clinicians employed by this organization and the ten other clinicians who withdrew to seek employment elsewhere may have influenced the likelihood of their actual long-term implementation of the evidence-based practices; however, their experiences and intentions to use the practices were very similar to those of the clinicians who remained in the CTP throughout the study period. Thus, although withdrawal from participation in a clinical trial may have an impact on long-term outcomes by decreasing sample size (which was not the case in the CTP) or may require additional efforts on the part of investigators to recruit new organizations and train additional clinicians (which was the case in the CTP), it did not appear to influence the results of this study.

In addition, clinicians who participated in this ethnographic study were recruited through convenience sampling methods and may not represent the broader population of clinicians participating in the CTP, much less the broader population of clinicians engaged in child and adolescent mental health services. Consequently, the results obtained thus far may not generalize to either population. The study was qualitative, and both collection and interpretation of data in such studies are susceptible to subjective bias and preconceived ideas of the investigators, especially under circumstances in which investigators are both study participants and observers. However, use of multiple observers and of multiple sources of data to achieve "triangulation" ( 32 ) was designed to minimize such bias.

Finally, as others have noted ( 11 ), because the processes described were examined in the context of a randomized clinical trial, the results may not be generalizable to the experience of evidence-based treatment implementation that do not involve randomized trials or may generalize differently depending on the core mediators of the treatments. Further research is required to empirically validate the model described in Figure 1 in other implementation contexts that do not involve participation in randomized clinical trials.

Conclusions

Despite these limitations, the results of this study suggest that an understanding of evidence-based treatment implementation requires a longitudinal perspective that takes into consideration the dynamic relationship between factors often viewed as static (for example, clinician and treatment attributes and lag time between training and use) and the experience of implementation. It also requires a perspective that acknowledges the dynamic relationship formed between evidence-based treatment developers and practicing clinicians.

Acknowledgments and disclosures

This study was funded through a grant from the John D. and Catherine T. MacArthur Foundation and grants P50-MH-50313-07 and R25-MH-067377 from the National Institute of Mental Health. The Research Network on Youth Mental Health is a collaborative network funded by the John D. and Catherine T. MacArthur Foundation. At the time this work was performed, network members included John Weisz, Ph.D. (director), Bruce Chorpita, Ph.D., Robert Gibbons, Ph.D., Charles Glisson, Ph.D., Evelyn Polk Green, M.A., Kimberly Hoagwood, Ph.D., Kelly Kelleher, M.D., John Landsverk, Ph.D., Stephen Mayberg, Ph.D., Jeanne Miranda, Ph.D., Lawrence Palinkas, Ph.D., and Sonja Schoenwald, Ph.D.

The authors report no competing interests.

1. Burns BJ: Children and evidence-based practice. Psychiatric Clinics of North America 26:955–970, 2003Google Scholar

2. Henngeler SW, Schoenwald SK, Borduin CM, et al: Multisystemic Treatment of Antisocial Behavior in Children and Adolescents. New York, Guilford, 1998Google Scholar

3. Webster-Stratton C, Reid JR, Hammond M: Treating children with early-onset conduct disorder: intervention outcomes for parent, child, and teacher training. Journal of Clinical Child and Adolescent Psychology 31:168–180, 2004Google Scholar

4. Weisz JR, Hawley K, Jensen-Doss A: Empirically tested psychotherapies for youth internalizing and externalizing problems and disorders. Child and Adolescent Psychiatric Clinics of North America 13:729–815, 2004Google Scholar

5. Bickman L, Lambert EW, Andrade AR, et al: The Fort Bragg continuum of care for children and adolescents: mental health outcomes over 5 years. Journal of Consulting and Clinical Psychology 68:710–716, 2000Google Scholar

6. Chorpita BF, Yim LM, Donkervoet JC, et al: Toward large-scale implementation on empirically supported treatments for children. Clinical Psychology: Science and Practice 9:165–190, 2002Google Scholar

7. National Advisory Mental Health Council: Bridging Science and Service: A Report by the National Advisory Mental Health Council's Clinical Treatment and Services Research Workgroup. NIH pub no 99-4353. Rockville, Md, National Institute of Mental Health, 1999Google Scholar

8. Hoagwood K, Burns BJ, Kiser L, et al: Evidence-based practice in child and adolescent mental health services. Psychiatric Services 52:1179–1189, 2001Google Scholar

9. Glisson C: The organizational context of children's mental health services. Clinical Child and Family Psychology Review 5:233–253, 2002Google Scholar

10. Fixsen DL, Naoom SF, Blasé KA, et al: Implementation Research: A Synthesis of the Literature. FHMI pub no 23. Tampa, University of South Florida, Louis de la Parte Florida Mental Health Institute, the National Implementation Research Network, 2005Google Scholar

11. Glasgow RE, Lichtenstein E, Marcus AC: Why don't we see more translation of health promotion research to practice? Rethinking the efficacy-to-effectiveness transition. American Journal of Public Health 93:1261–1267, 2003Google Scholar

12. Green LM, Kreuter MW: Health Promotion Planning: An Educational and Ecological Approach, 3rd ed. Mountain View, Calif, Mayfield, 1999Google Scholar

13. Klein KJ, Sorra JS: The challenge of innovation implementation. Academy of Management Review 21:1055–1080, 1996Google Scholar

14. Real K, Pool MS: Innovation implementation: conceptualization and measurement in organizational research. Research in Organizational Change and Development 15:63–134, 2005Google Scholar

15. Rosenheck RA: Organizational process: a missing link between research and practice. Psychiatric Services 52:1607–1612, 2001Google Scholar

16. Shortell SM, Zazzali JL, Burns LR, et al: Implementing evidence-based medicine: the role of market pressures, compensation incentives, and culture in physician organizations. Medical Care 37(July suppl):I62–I78, 2001Google Scholar

17. Simpson DD: A conceptual framework for transferring research to practice. Journal of Substance Abuse Treatment 22:171–182, 2002Google Scholar

18. Schoenwald SK, Kelleher K, Weisz JR, et al: Building bridges to evidence-based practice: the MacArthur Foundation Child System and Treatment Enhancement Projects (Child STEPs). Administration and Policy in Mental Health 35:66–72, 2008Google Scholar

19. Glisson C, Landsverk J, Schoenwald SJ, et al: Assessing the organizational social context (OSC) of mental health services: implications for research and practice. Administration and Policy in Mental Health 35:98–113, 2008Google Scholar

20. Schoenwald SK, Chapman JE, Kelleher K, et al: A survey of the infrastructure for children's mental health services: implications for the implementation of empirically supported treatments (ESTs). Administration and Policy in Mental Health 35:84–97, 2008Google Scholar

21. NIH Roadmap for Medical Research. Bethesda, Md, Division of Strategic Coordination, Office of Portfolio Analysis and Strategic Initiatives, 2007. Available at nihroadmap.nih.govGoogle Scholar

22. Hopper K, Jost J, Hay T, et al: Homelessness, severe mental illness, and the institutional circuit. Psychiatric Services 48:659–665, 1997Google Scholar

23. Ware NC, Tugenberg T, Dickey B, et al: An ethnographic study of the meaning of continuity of care in mental health services. Psychiatric Services 50:395–400, 1999Google Scholar

24. Willging CE, Salvador M, Kano M: Pragmatic help seeking: how sexual and gender minority groups access mental health care in a rural state. Psychiatric Services 57:871–874, 2006Google Scholar

25. Aarons GA, Palinkas LA: Implementation of evidence-based practice in child welfare: service provider perspectives. Administration and Policy in Mental Health and Mental Health Services Research 34:411–419, 2007Google Scholar

26. Isett KR, Burman MA, Coleman-Beattie B, et al: The state policy context of implementation issues for evidence-based practices in mental heath. Psychiatric Services 58:914–921, 2007Google Scholar

27. Rogers EM: Diffusion of Innovations. 5th ed. New York, Free Press, 2003Google Scholar

28. Kendall PC, Kane M, Howard B, et al: Cognitive-Behavioral Treatment of Anxious Children: Therapist Manual. Ardmore, Pa, Workbook Publishing, 1990Google Scholar

29. Weisz JR, Thurber CA, Sweeney L, et al: Brief treatment of mild-to-moderate child depression using primary and secondary control enhancement training. Journal of Consulting and Clinical Psychology 65:703–707, 1997Google Scholar

30. Barkley RA: Defiant Children: A Clinician's Manual for Assessment and Parent Training, 2nd ed. New York, Guilford, 1997Google Scholar

31. Chorpita, BF, Daleiden E, Weisz JR: Modularity in the design and application of therapeutic interventions. Applied and Preventive Psychology 11:141–156, 2005Google Scholar

32. Denzin NK: The logic of naturalistic inquiry, in Sociological Methods. Edited by Denzin NK. Thousand Oaks, Calif, Sage, 1978Google Scholar

33. Glaser BG, Strauss AL: The Discovery of Grounded Theory: Strategies for Qualitative Research. New York, Aldine de Gruyter, 1967Google Scholar

34. Miles MB, Huberman AM: Qualitative Data Analysis: An Expanded Sourcebook, 2nd ed. Thousand Oaks, Calif, Sage, 1994Google Scholar

35. Strauss AL, Corbin J: Basics of Qualitative Research: Techniques and Procedures for Developing Grounded Theory. Thousand Oaks, Calif, Sage, 1998Google Scholar

36. Fraser D: QSR NVivo NID*IST Vivo Reference Guide. Melbourne, Australia, QSR International, 2000Google Scholar

37. Barwick MA, Boydell KM, Stasiulis E, et al: Knowledge Transfer and Implementation of Evidence-Based Practices in Children's Mental Health. Toronto, Children's Mental Health Ontario, 2005Google Scholar