Is Telepsychiatry Equivalent to Face-to-Face Psychiatry? Results From a Randomized Controlled Equivalence Trial

The provision of psychiatric services to rural and geographically isolated regions challenges most health systems ( 1 ). Countries with shortages of psychiatrists find it inefficient for psychiatrists to travel long distances to see few patients in remote communities. Conversely, it is expensive and often not feasible for patients from remote regions to travel to urban centers for psychiatric care. Interactive videoconferencing, often called telepsychiatry, is a potential solution to this problem ( 2 ). However, the efficacy of telepsychiatry to provide clinical psychiatric services to distant communities has yet to be definitively established ( 3 ).

Researchers have established that telepsychiatry can provide a reliable diagnosis of common psychiatric disorders ( 4 , 5 ) and accurate assessments of cognitive ( 6 ), depressive ( 7 ), anxiety ( 7 ), and psychotic symptoms ( 8 ). However, the assessment of symptoms, such as emotional affect, that require visual observation of behavior appear less reliable ( 9 ), especially when using bandwidths of 128 kilobits per second or less ( 8 , 10 ).

The ultimate test of telepsychiatry is whether it can produce clinical outcomes that are at least equivalent to those achieved through face-to-face service. Two small studies examining clinical outcomes showed no statistically significant differences in outcomes between patients seen via telepsychiatry and those seen in person ( 11 , 12 ). In the only reported randomized controlled trial of telepsychiatry for adults, 119 U.S. veterans with depression were randomly assigned to six months of outpatient treatment in person or by telepsychiatry ( 13 ). They received medications, psychoeducation, and supportive counseling. No between-group differences were observed in depressive symptoms, adherence to appointments, adherence to medication regimens, dropout rates, or satisfaction levels. A second small (N=28) randomized controlled trial of telepsychiatry evaluating an eight-week course of cognitive-behavioral therapy for children reported "decreasing symptoms of childhood depression over videoconferencing at rates comparable to face-to-face" ( 14 ).

All published outcome studies, including the randomized controlled trial of adults with depression ( 13 ), used comparative methods rather than equivalence methods for the determination of sample size, analysis of data, and interpretation of results. Comparative studies often incorrectly conclude that a failure to detect a statistically significant difference in outcome implies "equivalence." However, the lack of a statistically significant difference does not mean equivalence ( 15 , 16 ). "While non-significant P values from tests of equality indicate that trial results have not conclusively established the superiority of an experimental regimen, these tests do not address the issue of equivalence which requires the use of confidence intervals" ( 17 ) and predetermined equivalence margins ( 15 ).

We overcame the limitation of previous telepsychiatry research using methods specially designed to test whether two interventions are equivalent. We describe a variety of clinical outcomes among outpatients with mixed psychiatric diagnoses referred by their family physician and randomly assigned to receive psychiatric consultation and short-term follow-up either in person or by telepsychiatry. We predicted that patients referred by their family physician for a psychiatric consultation and, if needed, short-term follow-up would have equivalent clinical outcomes regardless of whether they were seen via telepsychiatry or in person. We also predicted that telepsychiatry would be less expensive than in-person care.

Methods

The research ethics committees of the University of Western Ontario and the Thunder Bay Regional Hospital reviewed and approved this study. All participants were provided with written and verbal information about the nature of the study and consented to take part.

Study design

Participants were sampled from referrals to the psychiatric consultation clinic of the Thunder Bay Regional Hospital. We used a sample size calculation and analytical methods that are designed for equivalence trials ( 15 ). In this process, we start by pre-specifying delta (Δ), the absolute value of the difference that could be found between telepsychiatry and face-to-face care and still conclude that the two interventions are equivalent. This is called the equivalence margin and -Δ to +Δ is the range within which Δ can vary and still be of no clinical importance ( 15 ). Because our main interest is that telepsychiatry is not inferior to face-to-face care, we are concerned with the lower limit of this range (-Δ), and in our analysis, we check whether the confidence interval (CI) for the difference between the groups on various outcome measures is less than -Δ.

Sample size was determined for our primary outcome, the difference in proportions of patients moving from dysfunctional (case) to functional (noncase) status on the Brief Symptom Inventory (BSI) ( 18 ) four months after initial psychiatric consultation. We used expert clinical judgment ( 15 ) to choose the lower limit of the difference in proportions, which would still be consistent with clinical equivalence (-Δ=-.15) ( 17 ). If the difference in improvement between intervention and control groups is less than this predetermined equivalence margin, the treatments would be considered equally effective or equivalent, even though one can never actually "prove" equivalence ( 19 ).

With alpha error of .05 (one sided) and statistical power of 80%, we used the sample size formula from Jones and colleagues ( 15 ) for the one-sided case for comparison of proportions in equivalence trials, indicating a requirement of 138 patients per group, or 276 patients total.

We experienced a high loss to follow-up that required recruitment of more participants to achieve the full sample of completers. The first 42 patients were allocated to groups by flip of a coin, and block randomization (that is, using random numbers generated by computer algorithm in blocks of eight) was subsequently employed to control for any change of referral pattern over the 30-month duration of the study.

Inclusion criteria

Patients were eligible if they were aged 18 to 65, from the Thunder Bay region (officially designated as an underserviced area for psychiatry), and referred by a family doctor to the psychiatric outpatient department of the local general hospital. Patients were excluded if their family doctor considered them incapable of consenting to the study or if the referral was primarily for a medico-legal or insurance assessment. All eligible patients received a letter explaining the nature of the study. Shortly afterward they were contacted by telephone by a research assistant, who is an experienced registered nurse. The research assistant answered questions about the study and, if the patient was willing to proceed, completed the BSI over the telephone. Only patients who had an initial BSI score in the dysfunctional range were randomly assigned to one of the study groups.

Equipment

The interactive videoconference equipment consisted of a Polycom 512 View Station and a Sony Trinitron 68.5-centimeter diagonal screen. The connection was made by using three ISDN lines delivering a bandwidth of 384 kilobits per second.

Clinical services

Participants in each arm of the study attended the Thunder Bay Regional Hospital for service. Four psychiatrists from London, Ontario, located approximately 1,000 kilometers from Thunder Bay, provided the service. They traveled by air to Thunder Bay to provide in-person service and connected via interactive videoconference from London for the telepsychiatry service. Each psychiatrist was assigned an equal number of participants in each arm of the study.

The clinical service provided in the study was modeled on the outpatient service already in use in Thunder Bay. The psychiatrists sent a handwritten form with recommendations to the family physician by facsimile within 48 hours of the initial assessment, followed by a full typed report. The psychiatrists decided, on the basis of clinical need, whether patients should return for follow-up visits. Patients could be seen within the protocol for up to four months after the initial assessment. When needed, follow-up visits were scheduled at monthly intervals for each group.

Treatment recommendations included medication management, psychoeducation, supportive counseling, and triage to other local services. In the vast majority of cases the prescription of medication, recommended by the psychiatrists, was undertaken by the family physician. This is typical of practice in underserviced areas where telepsychiatry would most often be used. However, the psychiatrists could prescribe directly when the need arose. The psychiatrists frequently referred patients to a short-term psychotherapy program, community-based case-management services, various self-help groups, and recreation programs. The psychiatrists were instructed to provide services in the same manner to participants in each group. As a check on the similarity of services provided to the two groups, the research assistant reviewed the handwritten forms filled out by the psychiatrist and collected data on whether medications or referrals to other community services were recommended.

Research scales

The BSI is a 53-item self-report psychological symptom inventory with lower scores indicating fewer symptoms ( 18 ). The Global Severity Index (GSI) subscale of the BSI is calculated from the raw scores and is the most sensitive indicator of distress from psychiatric symptoms. Raw scores are converted into standardized T scores. The operational rule for classifying a patient as a "case" is if the respondent has a GSI score greater than or equal to a T score of 63 or if any two of the nine primary dimensions are greater than or equal to a T score of 63. When the individual is classified as a case he or she is in the dysfunctional range ( 20 ). The recommended brief standardized instructions were provided to the participant on the telephone for the screening contact and later in the mail for the four-month follow-up by the same trained research assistant.

On the initial assessment visit participants completed the Medical Outcomes Study Short Form (SF-36), a self-report health survey with 36 questions, which is suitable for self-administration or administration by a trained interviewer in person or by telephone ( 21 ). It yields an eight-scale profile of scores. For this study, the full SF-36 was administered even though we planned to use only the five-item mental health subscale, because this is the usual manner of scale completion. Scores on the mental health subscale range from 0 to 100, with higher scores indicating higher functioning. Because this study was done in Canada, we used the Canadian norm-based scores, which are standardized to 50 by using Canadian weights ( 22 ).

The Client Satisfaction Questionnaire (CSQ-8) is an eight-item self-report scale with brief written instructions. Scores range from 8 to 32, with higher scores indicating higher satisfaction ( 23 , 24 , 25 , 26 ).

At four months, the BSI, SF-36, and CSQ-8 were mailed to all participants with a stamped addressed return envelope. All scales included standardized instructions for self-report. Two further mailings were sent to noncompleters. In a pilot project for measuring outcomes for telepsychiatry, we found that the procedures for administration of the BSI and SF-36 on the telephone, in person, and in the mail yielded baseline and four-month scores with expected values and direction of change ( 27 ). Thus it was deemed to be valid to administer the baseline BSI on the telephone and the four-month BSI via mail for this full study.

Admissions

Psychiatric admissions to the Thunder Bay general hospital and the regional psychiatric hospital were noted for all participants. It was not possible to determine admissions to psychiatric units outside of the Thunder Bay region. However, the geographical location, 480 kilometers from the nearest alternative psychiatric unit, makes it unlikely that many patients were admitted elsewhere. There is no reason to believe that participants in either group would be more likely to leave the region.

Costs

Details of fees paid to the psychiatrists and of reimbursement of expenses the psychiatrists incurred (on the basis of receipts) when traveling to and staying in Thunder Bay were collected throughout the study. ISDN line rental charges and per minute connection costs were available from telephone company monthly billing invoices. The capital cost of the videoconference equipment was depreciated over a five-year period. All participants attended the same office in the outpatient department of the distal site for clinical service. However, an additional office was used at the proximal site to house the videoconference equipment, and market rate rental for this office was added to the costs of telepsychiatry.

Outcome variables

The primary outcome is the proportion of participants whose BSI score moved from dysfunctional to functional range (that is, the patient moved from being classified as a case to being classified as a noncase). The BSI was chosen because it measures overall distress from psychiatric symptoms, and distress is an important reason that patients seek help. Secondary outcome variables were the proportion of participants with any psychiatric admission during the 12 months after the initial assessment, change in scores on the GSI subscale of the BSI, change in scores on the mental health subscale of the SF-36 ( 21 ) standardized on a Canadian population ( 22 ), score on the CSQ-8 at four months, and cost of providing psychiatric assessment and follow-up.

Analysis

The two groups were compared on major baseline variables to check if the randomization worked. We used equivalence methods ( 15 ) to analyze outcome data for scores on the BSI, hospital admissions, scores on the GSI subscale of BSI, scores on the mental health subscale of SF-36, and scores on the CSQ-8.

BSI. We constructed a CI centered about the observed difference in proportions of participants moving from dysfunctional to functional status on the BSI from baseline to four months. Because the objective was to ensure that telepsychiatry is not inferior to in-person service, a lower one-sided 95% CI was constructed by using the method of Pagano and colleagues ( 28 ).

Admissions. For comparing proportions of participants in each group with at least one admission during the 12 months after initial consultation, we used methods suitable for testing equivalence of two proportions with the predetermined lower limit of the equivalence margin (-Δ=-.10). Clinical consensus was that a difference of 10% or less was a conservative estimate, as decided by research team members in consultation with psychiatrist colleagues working in both clinical and research practice. We calculated cumulative hospital days for each group to help understand the results. We did not use mean number of admissions or days in the hospital, as we anticipated (and found) skewed data.

GSI. We also tested for equivalence of mean improvement in scores on the GSI, the BSI subscale, which is the most sensitive single indicator of distress ( 20 ). We used the lower limit of the predetermined equivalence margin (-Δ=-5) to be conservative, because a difference in scores on the GSI of 7 is considered to be clinically significant ( 18 , 29 ).

Mental health subscale of SF-36. A 5-point variation in the score on the mental health subscale is considered the smallest clinically significant difference. To detect this, we needed 132 participants per group ( 30 ), which is compatible with the number for testing the primary outcome. We set the lower limit of the predetermined equivalence margin (-Δ) at -5 and used SPSS to obtain the lower one-sided 95% CI centered around the observed difference in the mean improvement scores on the mental health subscale.

CSQ-8. We constructed a lower one-sided CI centered around observed differences in mean four-month scores using SPSS. The lower limit of the equivalence margin (-Δ) was set at -2 by using clinical expertise to extrapolate from literature, such as the study by Gill and colleagues ( 31 ), who found a main effect when CSQ-8 scores varied from 3 to 4 points (22.4–26.4).

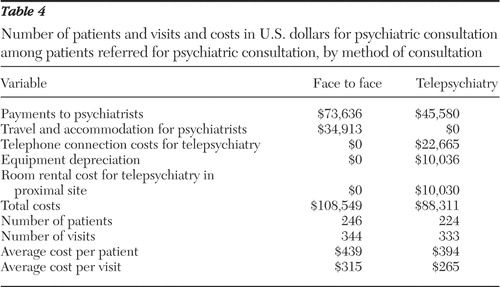

Costs. We calculated the average cost per patient by simply dividing the total cost per group by the number of patients randomly assigned to that group. Because it was not possible to attribute costs to individual patients, standard deviations are not available. Because participants went to the same hospital for telepsychiatry and face-to-face services, they incurred no differences in expenses in either arm of the study.

Results

Disposition and characteristics of patients

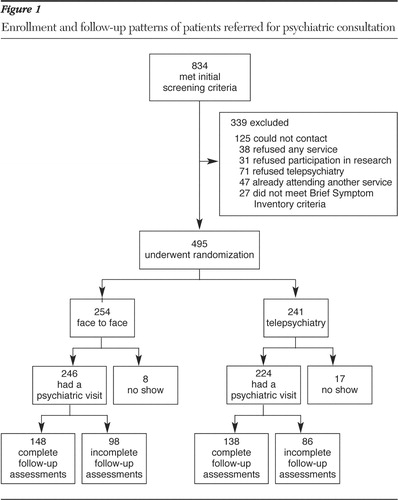

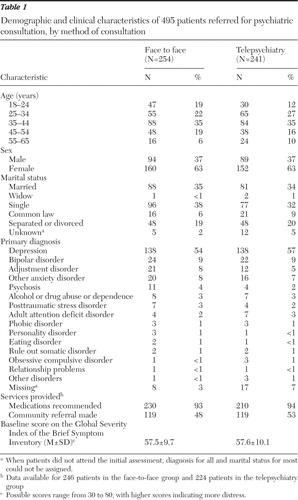

The study was conducted between 2001 and 2004. Figure 1 depicts the enrollment, random assignment, and follow-up of study patients. Table 1 shows the baseline demographic and clinical characteristics of the two groups. There were no significant differences between the groups on baseline measures or on the measures of services provided. Completers and noncompleters in the two groups were similar, except that more completers in both groups were married and noncompleters in the face-to-face group used more hospital days than completers, whereas in the telepsychiatry group, the opposite was true. These differences would not challenge our hypothesis.

|

Clinical outcomes and hospital use

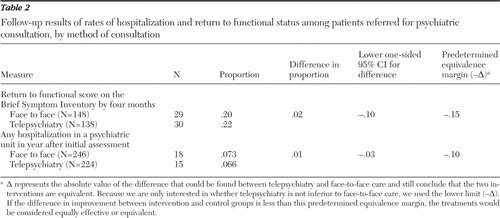

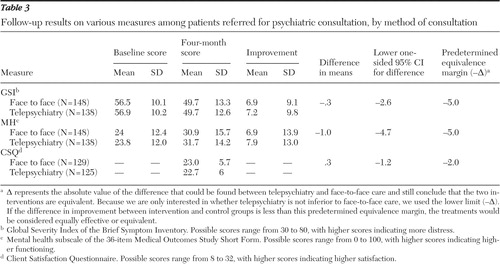

Tables 2 and 3 show the results of equivalence testing for the primary and secondary outcome measures, using the predetermined equivalence margins. All results support the hypothesis that telepsychiatry produces equivalent outcomes to face-to-face care.

|

|

As expected, scores on the BSI (GSI subscale) and SF-36 (mental health subscale) showed that patients reported less distress from symptoms and improved mental health after the clinical intervention in both groups. The levels of improvement were consistent with those considered to be clinically and socially relevant by the authors of these scales ( 18 , 29 , 30 ) and in the literature in which the BSI has been used to measure outcomes for patients similar to those in our trial ( 32 ). The CSQ-8 indicated a moderate degree of satisfaction ( 33 ). We conducted an intent-to-treat analysis for the proportion of participants hospitalized within 12 months from initial consultation, and this analysis showed equivalence between the two groups.

Costs

Table 4 shows that face-to-face services required travel and accommodation expenses for the psychiatrists that were unnecessary when using telepsychiatry. Face-to-face services also required larger fees for psychiatrists to compensate for travel time. These costs were greater than the technical costs of telepsychiatry. The average cost of telepsychiatry was 10% less per patient (16% less per visit) than the cost of in-person service.

|

Discussion

Using equivalence methods, we demonstrated that psychiatric consultation and short-term follow-up provided by telepsychiatry can produce clinical outcomes that are equivalent to those achieved when patients are assessed and followed in-person. On the primary outcome, approximately 20% of each group moved from a dysfunctional to functional rating. This is a modest proportion because we used a stringent test of effectiveness: the change from a positive psychiatric diagnosis to functional status, or a patient's moving from being a case to a noncase ( 20 ). The GSI baseline, four-month, and improvement scores were similar in magnitude to those found in a study of different types of psychotherapy for major depressive disorder ( 32 ). In addition, our finding of clinically significant improvements and equivalence in the primary outcome is supported by the analysis of the other outcomes, as measured by hospitalization and mean improvement in the GSI and mental health subscales.

The clinical service provided via telepsychiatry was less expensive than when it was provided in person. This finding coupled with the equivalent clinical outcomes suggests that telepsychiatry can be a cost-effective method for delivering psychiatric services. Our study provided services to a remote community, which required air travel and overnight stays. As noted elsewhere ( 34 ), the relative cost of telepsychiatry and in-person care is influenced by several factors, such as the distance traveled, volume of patients, and the type of technology. Therefore, the cost savings to the service provider in this study, may not be realized in other settings. Furthermore, the costs in the study presented here were assessed solely from the perspective of the provider. In this study, the patients traveled to the Thunder Bay Regional Hospital irrespective of whether they received service in-person or via telepsychiatry, and therefore there is no reason that patients' travel expenses and time taken from work would differ. Other research has suggested that telepsychiatry, used under certain conditions, can reduce cost to service users ( 35 ).

A major strength of our study was that it was conducted in a remote, underserviced area and thus replicated the conditions in which telepsychiatry is most likely to be used. We minimized exclusion criteria to ensure the inclusion of a broad range of patients, similar to the usual referrals from primary care physicians to psychiatrists.

However, the naturalistic nature of the service also produced limitations. Because of the broad inclusion criteria, we did not limit psychiatric care protocols to a carefully defined, diagnosis-specific, therapeutic intervention. Psychiatrists were instructed to provide the same type and level of service to patients seen in person and by telepsychiatry. Furthermore, data on services provided ( Table 1 ) suggests that similar care was actually provided to both groups. However, it is still possible that there may have been subtle differences in the way patients in each group were managed.

A second limitation was the high rate of noncompletion of the four-month research scales. Although we continued to recruit patients until we had the required number of participants, only 58% of participants initially randomized to the groups completed these scales. As a result we were able to do only a per-protocol analysis on these outcomes. However, a per-protocol analysis is considered by many to be more important than an intent-to-treat analysis for equivalence trials ( 15 ). It is important to note that we were able to perform an intent-to-treat analysis on the risk of hospitalization in the year after consultation, for which we had full data, and this analysis also showed equivalence.

The low completion rate was probably influenced by the fact that consultation was available more quickly through the study than through regular local services and that most participants had ended their clinical contact a number of months before they were required to complete the final research scales. These factors likely contributed to the recruitment of a cohort of participants with low motivation to complete the research component of the intervention.

We did not measure satisfaction with the technical components of telepsychiatry as has been done in other studies. This would have been possible only for the telepsychiatry group. Rather, we used the opportunity provided by the randomized controlled trial to compare satisfaction with the clinical service provided to the two groups using a standard questionnaire, the CSQ-8. The results demonstrated equivalent levels of satisfaction in both face-to-face and telepsychiatry groups.

Ten percent of patients who were initially contacted refused participation in the study because of an unwillingness to use telepsychiatry. This figure is lower than the 33% of residents of a rural area of Iowa who said that they would be unwilling to use telepsychiatry if they needed mental health services ( 36 ). The higher rate in the Iowa study may be because the researchers surveyed a general community population rather than individuals referred for psychiatric assessment. Nevertheless, there appears to be a group of individuals who are averse to the use of telepsychiatry. Administrators developing telepsychiatry programs may need to maintain some parallel face-to-face service to meet the needs of this group.

Conclusions

Our findings indicate that psychiatric consultation and short-term follow up provided by telepsychiatry can produce clinical outcomes that are equivalent to those achievable when patients are seen face to face. In our setting telepsychiatry was less expensive than face-to-face service, although the relative cost of the two modes of service delivery is likely to be influenced by factors such as the distance between sites and service volume. It is important to recognize that we examined a single psychiatric service: psychiatric consultation and short-term follow-up. It is possible that telepsychiatry may not produce equivalent outcomes when used to deliver other mental health services, such as psychotherapy, which is more dependent on the therapist-patient relationship. Nevertheless, the findings are likely to encourage those who advocate a more widespread adoption of telepsychiatry to counter the shortage of psychiatrists in remote regions.

Acknowledgments and disclosures

This study was supported by grant R2354-A01 from the Ontario Mental Health Foundation and by NORTH Network. The authors thank Emmanuel Persad, M.B., for his helpful advice and assistance and Allan Donner, Ph.D., for his advice on statistics.

The authors report no competing interests.

1. El-Guebaly N, Kingstone E, Rae-Grant Q, et al: The geographical distribution of psychiatrists in Canada: unmet needs and remedial strategies. Canadian Journal of Psychiatry 38:212–216, 1993Google Scholar

2. Brown FW: Rural telepsychiatry. Psychiatric Services 49:963–964, 1998Google Scholar

3. Monnier J, Knapp RG, Frueh BC: Recent advances in telepsychiatry: an updated review. Psychiatric Services 54:1604–1609, 2003Google Scholar

4. Ruskin PE, Reed S, Kumar R, et al: Reliability and acceptability of psychiatric diagnosis via telecommunication and audiovisual technology. Psychiatric Services 49:1086–1088, 1998Google Scholar

5. Elford R, White H, Bowering R, et al: A randomized, controlled trial of child psychiatric assessments conducted using videoconferencing. Journal of Telemedicine and Telecare 6:73–82, 2000Google Scholar

6. Ball CJ, Scott N, McLaren PM, et al: Preliminary evaluation of a low-cost video conferencing (LCVC) system for remote cognitive testing of adult psychiatric patients. British Journal of Clinical Psychology 32:303–307, 1993Google Scholar

7. Baer L, Cukor P, Jenike MA, et al: Pilot studies of telemedicine for patients with obsessive-compulsive disorder. American Journal of Psychiatry 152:1383–1385, 1995Google Scholar

8. Zarate CA Jr, Weinstock L, Cukor P, et al: Applicability of telemedicine for assessing patients with schizophrenia: acceptance and reliability. Journal of Clinical Psychiatry 58:22–25, 1997Google Scholar

9. Jones BN 3rd, Johnston D, Reboussin B, et al: Reliability of telepsychiatry assessments: subjective versus observational ratings. Journal of Geriatric Psychiatry and Neurology 14:66–71, 2001Google Scholar

10. Yoshino A, Shigemura J, Kobayashi Y, et al: Telepsychiatry: assessment of televideo psychiatric interview reliability with present- and next-generation internet infrastructures. Acta Psychiatrica Scandinavica 104:223–226, 2001Google Scholar

11. Zaylor C: Clinical outcomes in telepsychiatry. Journal of Telemedicine and Telecare 5(suppl 1):S59–S60, 1999Google Scholar

12. Kennedy C, Yellowlees P: The effectiveness of telepsychiatry measured using the Health of the Nation Outcome Scale and the Mental Health Inventory. Journal of Telemedicine and Telecare 9:12–16, 2003Google Scholar

13. Ruskin PE, Silver-Aylaian M, Kling MA, et al: Treatment outcomes in depression: comparison of remote treatment through telepsychiatry to in-person treatment. American Journal of Psychiatry 161:1471–1476, 2004Google Scholar

14. Nelson EL, Barnard M, Cain S: Treating childhood depression over videoconferencing. Telemedicine Journal and E-Health: The Official Journal of the American Telemedicine Association 9:49–55, 2003Google Scholar

15. Jones B, Jarvis P, Lewis JA, et al: Trials to assess equivalence: the importance of rigorous methods. British Medical Journal 313:36–39, 1996Google Scholar

16. Garrett AD: Therapeutic equivalence: fallacies and falsification. Statistics in Medicine 22:741–762, 2003Google Scholar

17. Fleming TR: Design and interpretation of equivalence trials. American Heart Journal 139:S171–S176, 2000Google Scholar

18. Piersma HL, Reaume WM, Boes JL: The Brief Symptom Inventory (BSI) as an outcome measure for adult psychiatric inpatients. Journal of Clinical Psychology 50:555–563, 1994Google Scholar

19. Hauck WW, Anderson S: Some issues in the design and analysis of equivalence trials. Drug Information Journal 33:109–118, 1999Google Scholar

20. Derogatis LR: BSI Brief Symptom Inventory: Administration, Scoring, and Procedures Manual, 4th ed. Minneapolis, National Computer Systems, 1993Google Scholar

21. Ware JE Jr, Gandek B: Overview of the SF-36 Health Survey and the International Quality of Life Assessment (IQOLA) Project. Journal of Clinical Epidemiology 51:903–912, 1998Google Scholar

22. Hopman WM, Towheed T, Anastassiades T, et al: Canadian normative data for the SF-36 Health Survey: Canadian Multicentre Osteoporosis Study Research Group. Canadian Medical Association Journal 163:265–271, 2000Google Scholar

23. Larsen DL, Attkisson CC, Hargreaves WA, et al: Assessment of client/patient satisfaction: development of a general scale. Evaluation and Program Planning 2:197–207, 1979Google Scholar

24. Attkisson CC, Zwick R: The client satisfaction questionnaire: psychometric properties and correlations with service utilization and psychotherapy outcome. Evaluation and Program Planning 5:233–237, 1982Google Scholar

25. Attkisson CC, Greenfield TK: The UCSF Client Satisfaction Scales: I. the Client Satisfaction Questionnaire-8, in The Use of Psychological Testing for Treatment Planning and Outcomes Assessment, 2nd ed. Edited by Maruish MA. Mahwah, NJ, Lawrence Erlbaum Associates, 1999Google Scholar

26. Nguyen TD, Attkisson CC, Stegner BL: Assessment of patient satisfaction: development and refinement of a service evaluation questionnaire. Evaluation and Program Planning 6:299–313, 1983Google Scholar

27. Bishop JE, O'Reilly RL, Maddox K, et al: Client satisfaction in a feasibility study comparing face-to-face interviews with telepsychiatry. Journal of Telemedicine and Telecare 8:217–221, 2002Google Scholar

28. Pagano M, Gavreau K: Principles of Biostatistics. Belmont, Calif, Duxbury Press, 1993Google Scholar

29. Carscaddon DM, George M, Wells G: Rural community mental health consumer satisfaction and psychiatric symptoms. Community Mental Health Journal 26:309–318, 1990Google Scholar

30. Ware JE: SF-36 Health Survey Manual and Interpretation Guide. Boston, Health Institute, New England Medical Centre, 1993Google Scholar

31. Gill KJ, Pratt CW, Librera LA: The effects of consumer vs staff administration on the measurement of consumer satisfaction with psychiatric rehabilitation. Psychiatric Rehabilitation Journal 21:365–370, 1998Google Scholar

32. Beutler LE, Engle D, Mohr D, et al: Predictors of differential response to cognitive, experiential and self directed psychotherapeutic procedures. Journal of Consulting and Clinical Psychology 59:333–340, 1991Google Scholar

33. Greenwood N, Key A, Burns T, et al: Satisfaction with in-patient psychiatric services: relationship to patient and treatment factors. British Journal of Psychiatry 174:159–163, 1999Google Scholar

34. Hyler SE, Gangure DP: A review of the costs of telepsychiatry. Psychiatric Services 54:976–980, 2003Google Scholar

35. Simpson J, Doze S, Urness D, et al: Telepsychiatry as a routine service—the perspective of the patient. Journal of Telemedicine and Telecare 7:155–160, 2001Google Scholar

36. Rohland BM, Saleh SS, Rohrer JE, et al: Acceptability of telepsychiatry to a rural population. Psychiatric Services 51:672–674, 2000Google Scholar