Innovations: Clinical Computing: An Audio Computer-Assisted Self-Interviewing System for Research and Screening in Public Mental Health Settings

Computer-assisted interviewing (CAI) along with its extension, audio computer-assisted self-interviewing (ACASI), has the potential to collect more accurate information than can be obtained by a face-to-face interview and to make efficient use of scarce interviewer time and personnel with specialized language skills. CAI systems can also speed research by assisting data entry from paper-and-pencil interviews ( 1 ) and can calculate scores immediately and send the results to the respondent or the clinician for immediate feedback, action, or analysis. Computer-administered interviews are usually preferred by respondents in the general population ( 2 ) and by those with a mental health diagnosis ( 3 ). This is especially true when the interview includes sensitive questions about socially undesirable or illegal behavior, such as alcohol use ( 4 ), drug use ( 5 ), and risky sexual activities ( 6 ).

Several products are available for implementing ACASI systems. Two typical commercial systems are the ADS: Audio-Computer Assisted Self Interview Software (www.teratech.com) and the QDS: Questionnaire Development System (www.novaresearch.com). Both systems are rather expensive for the typical public mental health care provider, and they can be difficult and time-consuming to program. Other systems, such as QDT: Questionnaire Design Tool ( 7 ), are part public domain and part proprietary, so access to them is complicated and confusing. In addition, none of these systems contained all the features that our organization required for upcoming projects, so we undertook the development of an ACASI system that would both meet our needs and be freely available to others.

This column describes Talker, which was developed in 2001 at the Nathan Kline Institute by the first author. It continues to be maintained and enhanced by the original author and is now in version 2.7. Talker is an ACASI system intended for use in both service and research settings. The system was designed especially for delivering screening instruments to low-literacy populations as well as to those whose native language is not English. Talker supports ease of programming, multiple languages, on-site scoring, and the ability to update a central research database. Key features include highly readable text display, audio presentation of questions along with audio prompting of answers, and optional touch screen input. Although Talker was designed for collecting data for community-based research and quality improvement projects, it can also be used for routine clinical screening by local mental health and other providers.

Talker operates on any computer that can run Microsoft Windows 2000 or greater. Either a touch monitor or an external touch screen (which overlays the existing monitor) is required if touch screen input is desired. A sound card and speakers or headphones are needed to use audio prompting. The TabletPC is also supported and uses the pen in place of a touch screen. More details as well as a downloadable version of Talker can be found at http://ssrd.rfmh.org/talker. This column describes how Talker can be used to adapt interview instruments for presentation using an ACASI system. Two studies in which Talker was used to collect interview data are described.

Sample Talker script for implementing the Rapid Alcohol Problems Screen

options autotalk;

yesnoq: answer 'Yes' count #N, 'No';

q1: yesno 'Do you sometimes take a drink when you first get up in the morning?';

q2: yesno 'During the last year has a friend or a family member ever told you about things you said or did while you were drinking that you could not remember?';

q3: yesno 'During the last year have you had feelings of guilt or remorse after drinking?';

q4: yesno 'During the last year have you failed to do what was normally expected from you because of drinking?';

q5: yesno 'During the last year have you lost friends or girlfriends or boyfriends because of drinking?';

if #N |> 0 then

say 'Based on your answers, you may have an alcohol problem.';

else

say 'Based on your answers, you do not seem to have an alcohol problem.';

end if;

Talker software

Instruments are created for Talker with a simple domain-specific scripting language that is similar to computer languages such as BASIC and C but much easier to use. High-level statements, such as ANSWER, SAY, PICK, and DATA, are used to specify and present the questions and answers to the respondents. All on-screen formatting is done automatically. Simple skip patterns can be executed using the "IF..GOTO" statement. More complex skip patterns can be implemented using such statements as "IF..END."

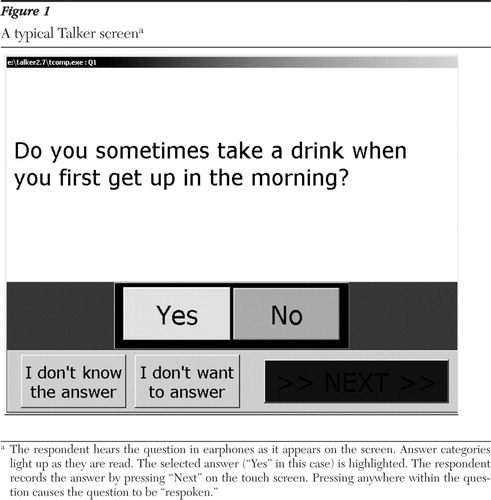

A simple Talker script for implementing the Rapid Alcohol Problems Screen (RAPS) ( 8 ) is shown in the box on this page. In this sample program, the respondent is presented with five yes-no questions. At the end of questioning, the respondent is presented with the results of the RAPS on the basis of the specified cutoff point. The questions and answers are presented on the screen and "read" to the respondent by a computer-synthesized voice. A typical screen view is shown in Figure 1 .

Talker data can be secured by using either the password or the data encryption features of the Windows operating system. If data are saved to a database, the security facilities of Interbase can be used. For total security in clinical applications, the data can be printed at the time of the interview, without saving any data on the computer itself.

Creating Talker instruments

The basic skill required to write Talker scripts is the ability to use a text editor or word processor. It also helps to have a good knowledge of how to manipulate Windows files and directories. Although file skills are not needed to actually write code for instruments, they are helpful when distributing instruments to others. Those with a basic course in computer programming should be able to master the system within several hours. Others may require a day or two of training to learn all the Talker features.

For the most part, converting instruments into Talker scripts was quick and easy. Most of the programming was done by research assistants who were computer literate but who had no formal experience in computer programming. They were able to start programming basic instruments after an hour of training. A few instruments required some complex conditional logic, which took more time to implement and required limited assistance from researchers with programming experience.

Use of Talker in research

The audio prompting and touch screen input capacities of Talker were used in a study of screens for co-occurring disorders in settings including jails and shelters and in a study in New York City job centers to examine the impact of mental health problems and community violence on women's ability to comply with welfare reform's work requirements. Many questions addressed sensitive topics: mental health symptoms, substance use, exposure to community violence and intimate-partner violence, and work experience. Two versions of each screen were created, one in English and another in Spanish.

The co-occurring disorders screen contained about 300 questions, and the job center study had roughly 200 questions. It required about 20 hours to code the instruments. Another 40 hours was spent creating high-quality audio recordings of the questions and answers. An additional ten hours was used to test the instruments and to make any corrections.

The instruments were presented to the respondents by using laptop personal computers equipped with touch screen overlays. Interviews were conducted in various settings, including job centers, treatment venues, and jails. To maintain privacy, the computers were located in quiet areas away from others and headphones were used to provide privacy for the audio presentations. No identifying information was collected on the computer during these sessions.

In both studies, acceptance of the computerized interview was strong. In the job center study, which included 568 women, 43 respondents (8%) initially asked for a research assistant to administer all or part of the interview, although many of those who initially asked for help dismissed the research assistant and used the computer themselves after a few minutes. Thirty-seven of the women who required assistance (86%) completed the interview in Spanish. A total of 511 respondents (90%) reported that the computer was not hard to use; 392 (69%) said that they preferred the computer, 114 (20%) had no preference, and 62 (11%) preferred being interviewed by a person. Among those who preferred the computer, the most common reasons cited were privacy (82 respondents, or 21%) and ease of use (63 respondents, or 16%); 51 (13%) felt that using the computer would take them less time.

For the study of the co-occurring disorders screen, which included 142 respondents (60 men and 82 women), 26 of the men (57%) and 63 of the women (77%) reported being more comfortable with the computer interview. Women were significantly more comfortable than men ( χ2 =6.51, df=1, p<.01). Thirty-two of those with a mental health diagnosis (84%) and 38 of those without a diagnosis (58%) reported being more comfortable with the computer interview ( χ2 =7.77, df=1, p<.005).

For both studies the interviewers reported that respondents appeared engaged in the interview and that they did not break off early.

Limitations

Talker is able to "read" the questions and answers and present them to the respondent using a computer synthesized "voice." Although this voice was adequate for prototyping, we felt it was not of sufficient clarity for use with low-literacy respondents. Therefore, we elected to make high-quality human-voice recordings of the questions and answers, which required a large amount of time. Hopefully, future voice-synthesizer programs will improve to the point where this step is not required.

Finding private areas for the computers, along with the electrical outlets to power them, was not always easy. Current laptops can run for only several hours on batteries, which restricted their use in some settings.

For the most part, the respondents answered most questions, although complex or unclear questions can be more evident when an ACASI system is used. For example, in the job center study more missing values were recorded for questions that had complex answers. Even though the respondent can replay the question and answers as many times a desired, the missing responses may have more to do with the wording of the question and answers than with the modality of presentation. Regardless, simple response scales may be important in minimizing "I don't know" and "I don't want to answer" responses, particularly among respondents with low literacy.

Acknowledgments and disclosures

Development and implementation of this application was supported in part by grant P50-MH-51359 from the National Institute of Mental Health, the New York State Office of Mental Health, and the New York State Office of Alcoholism and Substance Abuse Services.

The authors report no competing interests.

1. O'Reilly JM, Hubbard ML, Lessler JT, et al: Audio and video computer assisted self-interviewing: preliminary tests of new technologies for data collection. Journal of Official Statistics 10:197–214, 1994Google Scholar

2. Forster E, McCleery A: Computer assisted personal interviewing: a method of capturing sensitive information. IASSIST Quarterly 23(2):26–38, 1999Google Scholar

3. Weber B, Schneider B, Fritze J, et al: Acceptance of computerized compared to pencil-and-paper assessment in psychiatric in-patients. Computers in Human Behavior 19:81–93, 2002Google Scholar

4. Butler SF, Chiauzzi E, Bromberg JI, et al: Computer-assisted screening and intervention for alcohol problems in primary care. Journal of Technology in Human Services 21:1–19, 2003Google Scholar

5. Sarrazin M, Vaughan S, Hall JA, et al: A comparison of computer-based versus pencil-and-paper assessment of drug use. Research on Social Work Practice 12:669–683, 2002Google Scholar

6. Cooley PC, Rogers SM, Turner CF, et al: Using touch screen audio-CASI to obtain data on sensitive topics. Computers in Human Behavior 17:285–293, 2001Google Scholar

7. Dignon AM: The development of a language for automating medical interviews. Computers in Human Behavior 12:515–526, 1996Google Scholar

8. Cherpitel CJ: Screening for alcohol problems in the emergency room: a rapid alcohol problems screen. Drug and Alcohol Dependence 40:133–137, 1995Google Scholar